Experimenting With Machine Intelligence

By John Blankenship, Samuel Mishal View In Digital Edition

Machine-based intelligence is an exciting field often assumed to be out of the reach of typical hobbyists. Explore some unconventional ways of creating robots that can learn how to solve problems on their own, while adapting to a changing environment.

If you examine dictionaries and encyclopedias, you can find many definitions of artificial or machine-based intelligence. Depending on who you ask, here are a few of the definitions you might find:

- Solving problems through the utilization of sensory capabilities.

- The ability to make decisions based on past experiences.

- Reacting appropriately in the face of insufficient or conflicting information.

- The ability to adapt to a changing environment.

- Behavior demonstrating deduction, inference, and creativity.

The first of these definitions would attribute at least some level of intelligence to many of the robots you might see at a robotics club meeting. The last definition implies that intelligence must demonstrate human-like qualities — which allows many hard-liners to argue that machines can never attain true intelligence.

The goal of this article is to explore an option in between these two extremes.

We should start by saying that our methodology does not represent the typical AI research, but we think you will find it both entertaining and thought provoking. More importantly, it works.

Our objective is to build a robot that can learn on its own and constantly adapt to changes in its environment. The robot must have a well-defined goal so it can evaluate the effectiveness of its actions, thus providing a basis for modifying its own behavior.

To keep this project manageable, we need a relatively simple goal, yet one that is easily observable. Our robot will learn to follow a line — a common behavior for hobby robots.

Our control program, however, will not contain any line following algorithms. Instead, the robot’s internal nature will be to randomly try various actions and evaluate their effectiveness for achieving the desired goal. The ability to perform this evaluation is crucial to the robot’s ability to learn on its own.

Evaluating the Robot’s Actions

Appropriate evaluation cannot be conducted without associating the action being evaluated with a particular environmental state. For example, it makes no sense to say that turning left helps the robot follow a line. We could say though, that if a set of line-detecting sensors indicate a specific pattern, then turning left can help achieve the goal. This simple principle will be the basis for our robot’s ability to learn.

The robot will constantly observe its environment (by examining sensors) and then randomly perform some movement. If the robot determines that its movement helped achieve the desired goal, then the action taken (and its associated environmental state) will be remembered by storing it in memory.

If the robot encounters the same environmental conditions in the future, then it can retry the action associated with it. If performing the action still helps the robot achieve the goal, then the memory of it should be strengthened. If the action does not produce desirable results, then the memory should be weakened and eventually discarded. This evaluation process not only allows the robot to determine what works, it also allows it to change its mind about what works when the environment changes.

Obviously, the robot must be able to determine if a particular action is helping it achieve its goal. Our robot will have a group of five line sensors for collecting information about its environment. Actions that cause the middle sensor of the group to detect a line will be deemed beneficial because the goal is actually being met. If this is the only criteria though, the system would be simplistic at best. What is needed is a way to evaluate if a particular action helps achieve the goal, even if that action does not immediately cause the robot to see the line with the center sensor. We will address this problem shortly.

All this sounds complicated, but it is easily implemented in code. Furthermore, the programming itself should help clarify the concepts. A real robot could be programmed to confirm our assertions, but RobotBASIC’s integrated simulated robot allows us to experiment in an environment that is easier to control and implement.

Implementing the Principles

Figure 1 shows a simplified version of the RobotBASIC program we used.

main:

gosub Init

while 1

gosub RoamAndObserve

wend

end

RoamAndObserve:

// genetically turn away from walls

if rFeel() then rTurn (140+Random(80))

if rSense()

// something got our attention

gosub ReactAndAnalyze

else

if !rFeel() then rForward 1

endif

return

Figure 1.

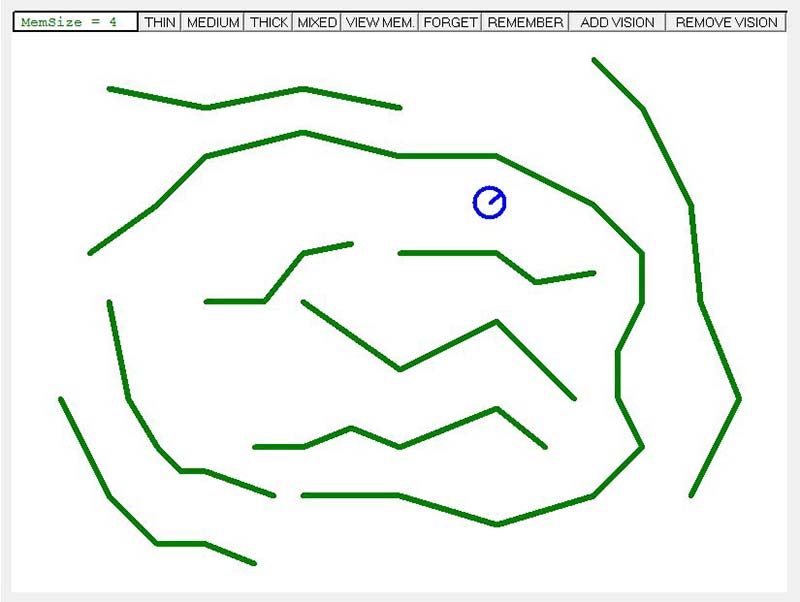

After some initialization (which creates the environment full of line segments shown in Figure 2), an endless loop causes the robot to roam around and observe its environment. The subroutine that performs these actions uses the robot’s perimeter sensors which are read with the function rFeel() to avoid walls as it moves around. Think of this as a built-in reflex reaction to a hot or sharp object.

Figure 2.

When a line is detected using the rSense() function, another subroutine is called to react in some way to the presence of the line and analyze the outcome of the actions taken.

This new subroutine ReactAndAnalyze implements the basic algorithm of our program. It is shown in Figure 3.

ReactAndAnalyze:

CurState = rSense()

// see if CurState is in memory

InMemory=false

if NumStates>0

for p=0 to NumStates-1

if GoodState[p]=CurState

InMemory = True

break

endif

next

endif

if InMemory

// react as expected based on past experience

// perform the action associated with the current state

gosub "Action"+ActionToTake[p]

// decide if the action still produces good outcome

NewState = rSense()

Reward=false

if NewState&2 then Reward=True

// Also reward if this NewState is in memory

if !Reward and NumStates>0

for i=0 to NumStates-1

if NewState=GoodState[i]

Reward=True

break

endif

next

endif

if !Reward

// old memory does not seem to be valid

ConfidenceLevel[p]--

if ConfidenceLevel[p]=0

for i=p to NumStates-1 // delete memory

GoodState[i]=GoodState[i+1]

ActionToTake[i]=ActionToTake[i+1]

ConfidenceLevel[i]=ConfidenceLevel[i+1]

next

NumStates--

endif

else

// increase confidence of memory (up to 2)

if ConfidenceLevel[p]<3 then ConfidenceLevel[p]++

endif

else

// try something new

NewAction = Random(6)+1

gosub "Action"+NewAction

// now see if the New Action produced good results

NewState = rSense()

Reward=false

if NewState&2 then Reward=True // gave direct reward

// Also reward if this NewState is in memory

if !Reward and NumStates>0

for i=0 to NumStates-1

if NewState=GoodState[i]

Reward=True

break

endif

next

endif

if Reward

// good result so add to memory if not there already

// CurState is what initiated this action so add to memory

// NewAction is what we did to get rewarding results

GoodState[NumStates]=CurState

ActionToTake[NumStates]=NewAction

ConfidenceLevel[NumStates]=1

NumStates++

endif

endif

return

Figure 3.

The code in Figure 3 begins by reading the line sensors and storing them in the variable CurState. It then searches the robot’s memory to see if that state has been memorized some time in the past. The robot’s memory is composed of three arrays: GoodState[], ActionToTake[], and ConfidenceLevel[].

The GoodState[] array holds the robot’s views of the world (the line sensor data) that it has determined to be important. The corresponding element in the array ActionToTake[] identifies what action the robot should take when this line sensor configuration is detected.

The final array ConfidenceLevel[] keeps track of how well this memory is working. The use of these arrays will become clearer as we proceed.

Referring back to Figure 3, a for-loop is next used to search GoodState[] to see if it contains the current state of the line sensors. If it does, the variable InMemory is set to TRUE, and the value of the corresponding element of ActionToTake[] is used to compute a subroutine name which is then called to cause the desired action to take place.

Most computer languages do not have the ability to gosub to a variable name, so if you are not using RobotBASIC, this area of the code could be implemented using if statements or a switch-case construct.

After the action is performed, the program must decide if a rewarding result occurred. As discussed earlier, there are two criteria for this evaluation. If the line sensors indicate that the center sensor detects the line, then the variable Reward is set to TRUE. The second condition tests to see if the new state of the sensors (the state resulting from the action) is in the robot’s memory.

Basically, these two conditions mean the memorized movement will be deemed to produce a rewarding result when either a line is detected by the center sensor, or any situation is detected that is associated with seeing the line.

Every time a memorized action causes a rewarding result, the confidence level associated with this action is increased, but not beyond some preset maximum. If the action does not produce a rewarding result, the confidence level is decreased. If it decreases to zero, then that memory condition is removed from the arrays. This forces the robot to discard situations that do not consistently work, which effectively allows the robot to forget old habits and learn new ones when its environment changes.

Back to Figure 3. If the current state of the line sensors is not found in the memory, then the robot performs a random action. When the action is complete, the robot must re-examine the line sensors to determine if the action produced a result that should be rewarded (again using the two criteria previously mentioned).

If either of these criteria is met, then the most recent action is stored in memory along with the environmental conditions that initiated the action. Allowing both the actual detection of the line by the center sensor as well as conditions associated with that detection to be considered good is a powerful concept that effectively allows the robot to memorize a sequence of actions to accomplish a goal — even though no programming was specifically created in this regard. This principle is very important, so let’s summarize the situation.

If the robot performs an action that allows the robot to detect the line with the center sensor, then that action and its associated line sensor state is stored in memory. This allows the robot to perform the same action in the future if that state is seen again. Furthermore, if the robot ever performs a random action that causes the robot to experience states already stored in memory (states associated with finding the line), then that action and state is also saved and performed in the future, as well.

The next step is to define the actions that the robot can take. These can be very simple or more complex. The more complex the choices are, the faster the robot can learn.

We can effectively determine the genetics of our robot by deciding what actions we provide for it. The six actions we initially used are shown in Figure 4.

Action1: // easy left

rForward 1

rTurn -1

return

Action2: // easy right

rForward 1

rTurn 1

return

Action3: // medium left

rForward 1

rTurn -3

return

Action4: // medium right

rForward 1

rTurn 3

return

Action5: // Hard left

rForward 1

rTurn -6

return

Action6: // Hard right

rForward 1

rTurn 6

return

Figure 4.

In each case, the robot moves forward slightly then turns either left or right by a fixed amount. Some actions cause very small turns, while others turn a significant amount. Notice that none of these actions have anything specifically to do with following a line. They could just as easily be used to hug a wall or find a ball. No matter what actions we allow, the robot will use its experiences with its environment to decide which of these actions are important, and store those in its memory.

Using the Program

Due to space limitations, we have omitted parts of the code such as the initialization routines that draw the lines and code associated with using buttons to alter the robot’s environment and behavior in real time. You can download the entire source code from www.robotbasic.org.

org (see the In The News tab), as well as a free copy of RobotBASIC. If you run the program, you will see the screen shown back in Figure 2. It has many line segments which allow the robot to learn faster because it encounters lines more often.

At startup, the lines are all of medium width, but there are buttons at the top of the screen that allow you to change them to thin lines or thick lines, or even to a mixture of all three types. The top left corner of the screen also displays the number of memories currently in use. As the robot contends with its environment, the number of memories will increase and decrease as things are learned and forgotten.

Each time the program is started, the robot will have no ability to follow a line, but as it roams its environment it quickly learns what works and what doesn’t. Often the robot becomes reasonably adept at line following in 30 seconds or so, and it gets better (especially at acquiring the line) as time progresses.

If you run the program multiple times and look carefully at each robot’s behavior, you will see that they seem to have different personalities. Some of the robots will follow the line by staying centered as you might expect. Some though, will stay on the left side of the line while others will stay on the right. It all depends on what the robot encountered during its learning cycle and what actions it randomly took.

Once the robot has learned to follow a particular line width, press one of the buttons at the top of the screen to change the width of the lines. Sometimes the robot will be able to handle the new lines right away, but often, the actions stored in the robot’s memory will not work well with a different line width — especially on sharp turns. In such cases, the robot will quickly forget the actions that do not work and learn new ones.

Modifying the Code

Experimenting with various alternatives can be intriguing. In addition to changing the width of the lines, you could change how quickly the robot forgets (just change the maximum allowable confidence level) in order to see how that affects its ability to adapt. With a little modification, the program could even allow the robot to change its own propensity to forget based on a long-term self-evaluation of its efforts.

You could also change the nature or number of the built-in behaviors. One of the most interesting things we tried was substituting the behaviors in Figure 5 for the last two behaviors shown in Figure 4. The actions in Figure 5 give the robot an advantage over the original version.

Action5: // hard left

for i=1 to 10

rForward 1

if rFeel()then break

next

for i=1 to 70

rTurn -1

if rSense() then break

next

return

Action6: // hard right

for i=1 to 10

rForward 1

if rFeel()then break

next

for i=1 to 70

rTurn 1

if rSense() then break

next

return

Figure 5.

The robot moves forward a bit, but then turns to the left or right a maximum amount of up to 70º, but stops the turn if it sees the line with any of the sensors. To some extent, these new actions give the robot a very limited form of vision because the robot can look for the line rather than just performing an action and hoping it will find the line.

This ability to look around for the line is much more efficient than just turning a fixed number of degrees, and robots capable of these actions learned much more quickly. They also learned ways of following the line far different from anything we might have imagined. They often follow the line by just staying close to it (instead of being centered on it). Oddly too, these robots often shift from one side of the line to the other as they move along it — an unexpected and very unusual behavior. To make it easy for you to observe these options, the program has buttons that allow you to make the robot forget easier and remember longer, as well as buttons that allow and disallow the vision-based actions shown in Figure 5.

Most of these buttons have no effect on the robot’s memory. When vision is added or removed, however, the memory is cleared so that the new criteria can be learned. There is also a button that displays the contents of the robot’s memory so you can scrutinize what has been learned.

Practical Considerations

Real robots could certainly employ these principles, but generally they would move much slower than the simulation and take far longer to initially learn. You could easily save the array data from the simulator though, and use it with a real robot allowing it to take advantage of everything learned by the simulator. The real robot could still adapt by continuing to learn and forget — just as the simulator does — but without having to go through the initial learning phase.

The program could easily be adapted to allow the robot to learn different things such as how to hug a wall or find a ball by giving the robot new criteria for determining when the goal has being satisfied. Of course, changing the nature of the goal might mean you need to add or change the sensors your robot has. A more advanced program might have multiple goals, allowing the robot to deal with a much more complex environment.

Even though these techniques are unconventional, we have shown them to work exceedingly well and we encourage others to experiment with them to see how they might be used with real robots. SV

Article Comments