Thumb-Shaped Sensor Offers a Novel Way to Develop Touch Sensing in Robotics

By Sonali Roy View In Digital Edition

Researchers at Max Planck Institute for Intelligent Systems in Germany have engineered a thumb-shaped robust soft haptic sensor that employs computer vision and a deep neural network for improving touch sensing in robotics. When you touch the finger, the system prepares for a 3D force map from the visible deformations of its flexible outer shell. The scientists named the sensor ‘Insight.’

Generally, surface haptic sensors concentrate on unifying many small sensing units constituting a grid along a flat or a bent surface. A large quantity of wiring and many sensing elements are needed for executing this technique.

These systems are not always easy to create with robustness because both the sensing elements and wires are close to the contact surface. Huanbo Sun, a final year Ph.D. student at the Max Planck Institute for Intelligent Systems in Germany who participated in the project, confirmed this.

Huanbo Sun. (Photo courtesy of Mr. Wolfram Scheible.)

A “Skin Game”

The human body is layered with skin which is very delicate. It comes up with the responses corresponding with the environment. This very feature of human skin urged and motivated the researchers to develop such a sensor.

They wanted to upgrade robots with this feature and so incorporated the formula into the design. As Sun stated, “The human body is covered with skin that is very sensitive and provides feedback about interaction with the environment. We want to give robots also this ability.”

For the betterment of dexterous robotic operation, high fidelity touch sensing technology with three-dimensional sensing surfaces is required. A 3D sensing surface differs from those of the two-dimensional flat geometry of most other tactile sensors in the field because a 3D sensing surface enables robots to feel contact on all sides of their body just like human skin does. For this capacity, robots can perform better even in complex and difficult situations.

As Sun points out, “A 3D sensing surface can enable robots to feel contact on all sides of their body parts [like human skin] which will let them work better in messy real world environments.”

Other sensors available in the commercial markets and the research laboratories do not have the extensive implementation in the robotics world because of their inability to sense shear forces and low spatial accuracy.

Sun says, “Beyond the shape of their sensing region, sensors that are currently available both commercially and in research labs have other limitations that prevent their widespread application in the field of robotics, including low spatial accuracy, an inability to sense shear forces, complex fabrication processes, and/or low durability for long-term use.” He continues, “Commercially available sensors (e.g., SynTouch BioTac) tend to be expensive, delicate, have a limited sensing surface, and output readings that are difficult to interpret.” Hence, the researchers wanted to overcome the limitations of the existing robots. That’s why they started work on developing a sensor which would offer accurate normal and shear force sensation across a 3D surface.

Sun states, “So we were motivated to propose a solution to this challenge: a new sensor design that offers accurate normal and shear force sensation across a 3D surface while being cheap, robust, and easy to fabricate. Our article in Nature Machine Intelligence is our first paper on this approach to tactile sensing.”

Inspiration for the Researchers

Vision-based touch sensing technology adds a new dimension to sensor design options in that it offers the means to deal with typical problematic situations. Sun describes, “Among different sensor design options, vision-based haptic sensors are a new family of solutions, typically using an internal camera that views the soft contact surface from within.”

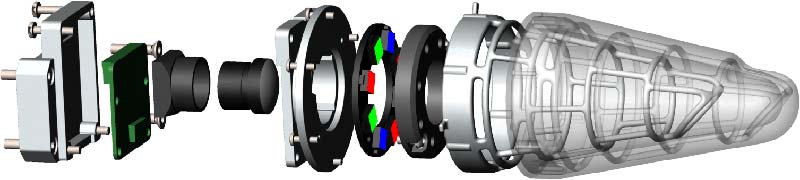

Insight uses a high-resolution camera with a colorful LED ring to observe the internal surface of a silver-colored (transparent in figure) elastomer supported by a hollow metal skeleton.

Today, high resolution and good quality cameras that offer robustness are now available at lower prices. They’re capable of framing concurrent data across a large area which is very helpful, as Sun confirms, “The cameras available nowadays have high resolution, good quality, high robustness, wide availability, and a low price. They can capture simultaneous data across a large area, which is very useful for creating a tactile sensor for robots.”

He continues, “But you have to figure out an accurate way to transform the image data into tactile contact measurements.”

Why a Thumb-shaped Sensor?

Inspired by the biological advancement of humans, the researchers believe that robots need a good touch sensing technology for interacting with surrounding objects — especially when it comes to fingertip manipulation. Sun shares, “We think robots need a good sense of touch to interact with objects around them.”

He further adds, “We are particularly interested in fingertip manipulation tasks like picking up small objects, inserting a key into a lock, or handing a cup of coffee to a human. These kinds of tasks require high resolution haptic sensing similar to the human fingertip.”

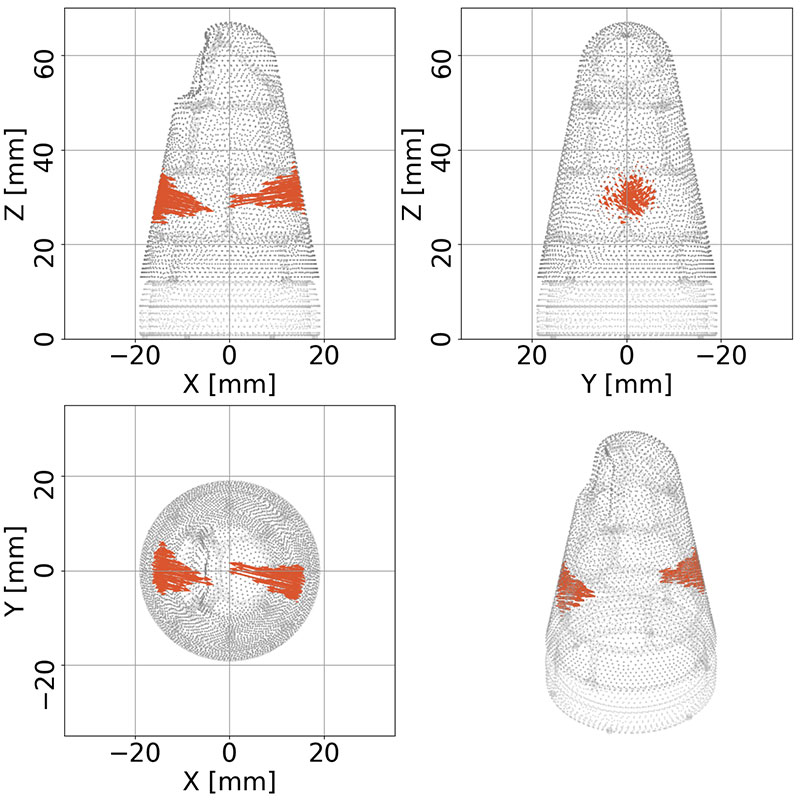

Researchers (Huanbo Sun, Katherine J. Kuchenbecker, and Georg Martius) from the Max Planck Institute for Intelligent Systems invented a soft thumb-sized vision-based haptic sensor called Insight to deliver 3D high-resolution force sensing information. The figure shows what Insight’s camera sees when it’s touched by a human hand.

It’s also a fact that many robots use cylindrical fingers and other body parts those resemble humans. The researchers used a different approach in that they opted for developing a thumb-shaped sensor. Why? Again, Sun explains, “It’s also important to know that many robots have curved cylindrically shaped fingers and other body segments similar to humans. Thus, we decided to create a thumb-shaped sensor to illustrate that our approach works well at creating a curved 3D sensing surface, which few other sensors can achieve.”

In size and shape, Insight takes after a human thumb, but it doesn’t have any joints.

Materials Used to Design & Develop the Sensor

Commercial products with widespread availability were used to keep the project costs low. “The camera and the LED ring are commercial products with a low cost and broad availability. The sensing surface is constructed with a thin aluminum skeleton over-molded by a soft elastomer layer; together they are similar to tent poles (the skeleton) covered by a fabric tent (the elastomer). The soft elastomer (EcoFlex 00-30) is used to sense light contact with high sensitivity in all directions,” Sun disclosed.

The aluminum skeleton is 3D printed so that it can be light and powerful enough at the same time for carrying the soft elastomer’s global shape and sustaining high forces as well. The elastomer is the joint venture of aluminum powder and aluminum flakes so that it can find the ideal optical properties for the imaging system (neither too dark nor too reflective, and keeping isolated from external lighting interference), according to Sun.

Structure of the Sensor Including the Hardware & Software Design

While designing the sensor, the researchers focused on balancing structural integrity and high sensitivity. The finger needed to be equally capable of resisting strong forces and feeling a light touch. That is the structural significance of the sensor or the finger. The researchers fuse the soft elastomer with the metal skeleton into one outer shell and thus achieve this goal. The design of the imaging and the lighting system is also compelling. The camera’s field of view (FOV) is such that it can see as much of the sensing surfaces as possible (from inside), Sun explained. The team devised the light sources and the reflective properties of the sensing surface to stabilize the camera image in ‘neither too dark nor too bright’ mode.

The interior of the soft sensing surface possesses ridges resembling fingerprints that enhance the visual appearance of small deformations.

How the System Operates

The sensor comprises a miniature camera, an LED ring, and a soft-stiff hybrid sensing surface. Sun adds, “The hybrid structure of Insight’s soft elastomer shell with a stiff metal skeleton inside ensures high sensitivity and robustness. Colored LED light is projected on the inner side of the sensing surface, and the camera looks through the ring of lights to see the hybrid surface.”

When an object contacts the outside of the sensor, the camera sees changes caused by the deformation. A trained machine-learning model operates to elucidate each camera image as a force map, which sketches the directional force distribution over the entire 3D sensing surface.

Camera Position

Sun says that the camera is the essential sensing unit that detects the deformation of the sensing surface. The system uses the hidden camera so that light from outside does not impact the camera, so the entire system is mechanically robust and works independently of external circumstances. Sun further explained that haptic sensors need to interact with other objects where potentially strong forces are applied from the outside. He continued, “Sooner or later, the contact surface will probably become damaged due to material aging, high pressure cutting the material.”

The system promotes isolated camera and lighting applications. The sensing surface faces the contacts. So, the camera and the lighting systems are always safe from the outside forces. Sun says, “Our design separates the camera and lighting system from the vulnerable sensing surface. Contact can only happen on the sensing surface, so the camera and lighting system are always safe.”

If the sensing surface is broken from the contact, it needs to be replaced. “We only need to replace the sensing surface at a low cost if it becomes broken from contact, and that can be done very quickly,” explains Sun.

Constructing the 3D Force Map

The finger constructs the 3D force map from the visible deformation of its flexible outer shell by employing a machine-learning pipeline. Optionally, the researchers can go for the deep neural network.

First, the researchers constructed a test bed with five degrees of freedom. The test bed proceeds with a four mm globular, small, and hard object employed for generating an indentation so that the test bed could check the sensor over the outer sensing surface. A force/torque sensor is placed in between the test bed and the indenter for observing the contact forces in all directions, whether normal or shear.

After that, the team approximates the force distributions on the surface according to the recorded contact position, the size of the indenter, and the measured force vector. According to Sun, “Using this setting, we can create a dataset that comprises visible deformation images of the surface under contact from the camera, the position of contact on the surface from the test bed coordinate, the force strength of the contact from the force/torque sensor, and the approximated force distribution map of the contact.”

Using the combined dataset of the inputs of visible deformations and the outputs of the 3D force map, the researchers train a machine-learning model. This trained machine-learning model records camera views and outputs as well. Sun goes on to say, “We then train a machine-learning model using the dataset (input: visible deformations; output: 3D force map) end-to-end. The trained machine-learning model takes camera views and outputs a force map at run time.”

The invented sensor Insight is contacted by two fingers. The hidden camera captures the deformation of the soft sensing surface lit internally by a ring of colored LEDs. The sensor is powered by artificial intelligence, where a machine learning model is trained to transform the image captured by the camera to the distribution of contact force vectors all over the surface.

How Does the Sensor Learn?

The first step of the learning process of such a sensor like Insight is to collect reference data with an automatic test bed. This is similar to a CNC mill, but instead of the milling tool, there is a force-torque sensor and an indenter, according to Sun. This haptic sensor connects many points on the surface, and the corresponding force vector and the image from the camera are observed. The researchers exercise this information to train a machine-learning model or the deep neural network, which collects an image from the camera as input and releases the corresponding force map. As the machine-learning model is trained, it’s able to perform the task of translation with extreme perfection.

Sun further includes, “One important design choice is the type of neural network. Our purely convolutional network is able to infer multiple contacts in different zones even though the training data contains only single contact events.”

The Significance of the Study

Compared to other haptic sensors available on the market, Insight’s current design offers highly accurate force sensation across a 3D surface while being cheap, robust, and easy to fabricate. “Directly outputting forces (rather than deformations, impedances, or voltages, like many other sensors) means that a robotic system can directly use the measurements to physically interact with and understand objects around it, like measuring the coefficient of friction of a surface or the hardness of a fruit. These properties can facilitate our sensor’s widespread application and advancement in the field,” Sun noted.

Applications for Insight

Insight can be integrated into a robotic hand. A hand with such a sensor should be able to manipulate objects in ways similar to the human hand. Insight can be applied and utilized chiefly in three categories of industrial, farming, and caring services, and other fields of human-machine association that can include getting together products, harvesting and taking in fruits, and presenting food and drinks to someone.

There is also opportunities to span other robotic parts like limbs, the chest, and feet though the researchers have yet not attempted this. Sun explains that Insight could also be purposefully used in the healthcare system as is exemplified to improve the device for distinguishing the hardness of different tissues including blood vessels, muscles, and tumors, etc. The system also has the potential to get unified into the endoscopy system to build up and amplify what physicians perceive about a patient’s tissue at a distance. Moreover, the new sensor could be applied in prosthetics.

Insight employs an easy-to-pilot technique using cost-effective components. So, replacing and/or substituting the indispensable fragments is simple and trouble-free as each situation demands. According to Sun, “Since our sensor design is made from low-cost commercial components, it is easy to adapt the essential parts to fit other application scenarios.”

What is the Future of the Study?

The haptic sensor technology used while designing Insight can offer a wide range of robotic applications based on a variety of shapes and specifications as may be required. Other sensor designs can also use the machine-learning architecture, training procedure, and the inference process, which are widespread.

The researchers came up with ideas for tailoring the design parameters of the sensor for different applications such as the camera’s field of view, the arrangement of the light sources, and the composition of the elastomer. Apart from the current force map, the team is further interested in constructing a smaller version of the sensor as well as allowing it to sense other touch sensing signals like temperature and vibration.

Sun is optimistic that the team will make sensors like this in other shapes and sizes. He adds, “We are working on miniaturizing the design and increasing the update frequency.” SV

All figures courtesy of Huanbo Sun@MPI-IS.

Resources

1. Study Reference: Sun, H., Kuchenbecker, K.J. & Martius, G. A soft thumb-sized vision-based sensor with accurate all-round force perception. Nat Mach Intell 4, 135–145 (2022); https://doi.org/10.1038/s42256-021-00439-3.

Article Comments