My Cloud Native Journey

While I am not new to robotics, I still have a lot to learn.

It all began when I bought my first Raspberry Pi 3 for my birthday in 2016. I learned the basics of Linux and used it as a desktop for a whole week. Then I learned how to blink an LED and then multiple LEDs. However, things would change in 2018 when I bought my first CamJam Edukit 3 Robotics kit on the Pi Hut website.

First, I used the cardboard box as a chassis for the robot and used the Pi 3 for initial tests. Then, to reduce both power and to provide stability, I used my Pi Zero W and a chassis. I ran basic motor tests and then used keyboard control.

I later moved on to a bigger platform with the Devastator Tank Mobile Platform by DFRobot. I ran the same tests and gave my robot some LEDs for eyes. I named my robot Torvalds. In 2020, I built another robot and called it Linus.

My two robots: Linus (left) and Torvalds (right).

First, Linus was used with the Google AIY Voice Kit V2. Later, I added a face and an arm. In 2021, I used guizero to control two robots at once with a GUI and then learned the basics of Flask. What I didn't realize was that things were going to change for the better.

The Journey Begins

My journey into Cloud Native computing started when I got accepted into the Udacity SUSE Scholarship Challenge in. During this course, I learned about the basics of Cloud Native computing. I already learned how to use the web framework, Flask to control an LED, then three LEDs, a servo, a motor, and then a robot. Things changed when it dawned on me that the scholarship course was using Flask. Realizing that I had already learned the basics of Flask, this was the perfect opportunity to expand my knowledge and apply what I learned to my maker projects.

Trial and Error

First, I learned the basics of Docker (an open platform for developing, shipping, and running applications). I learned how it differs from virtual machines since Docker containerizes an application. Multiple containers can run in one host rather than hosting multiple virtual machines. So, I took my LED control app and built my first Docker image. I ran the image and realized I was getting somewhere. Unfortunately, there were several issues I also ran into.

First, I had to make the application available to other hosts and I had not enabled that feature. Next, I had to make sure to enable remote GPIO access on my Pi before I built my Docker image. After that, I tested running multiple LEDs, a servo, a motor, a robot, and then multiple robots.

Of course, I had code syntax issues I quickly resolved, but after that, my tests were a success. I then learned how to push my images to Docker Hub. It was at that moment I realized that my journey into Cloud Native computing had started.

Next, I learned about Kubernetes; how to install it and the various commands.

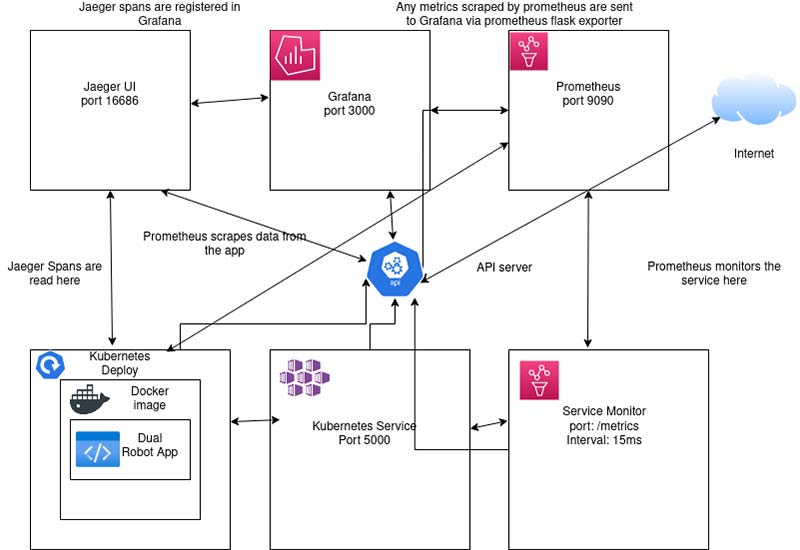

Kubernetes diagram for the app.

For those not familiar with Kubernetes, it’s a container orchestration platform that deploys applications within clusters. Kubernetes comes in many flavors such as Rancher, OpenShift, and minikube. However, I used K3s and Kind for my tests as they are easier for beginners to get started with.

First, I set up a virtual machine to learn how to use Kubernetes; in this case, K3s. Later, I was comfortable using Kind on my PC. I tried out the exercises and was confident in building my very first cluster. I decided to use the test images I created with Docker and then deployed my first clusters.

I had some issues specifically with the syntax of the commands and I also had to learn how to debug errors. I also had to learn concepts like pods, config maps, helm charts, manifests, declarative manifests, and imperative commands. After more trial and error, I was able to successfully deploy my first cluster and learned how to run the application from inside the cluster. I knew at this point I was making further progress, but I didn't realize where it would get me to.

I also learned about how to use GitHub Actions and how to push any changes I make to my repository to Docker Hub. I was quite apprehensive, as I had issues getting it to work the first time. Eventually, I was able to get it to perform properly.

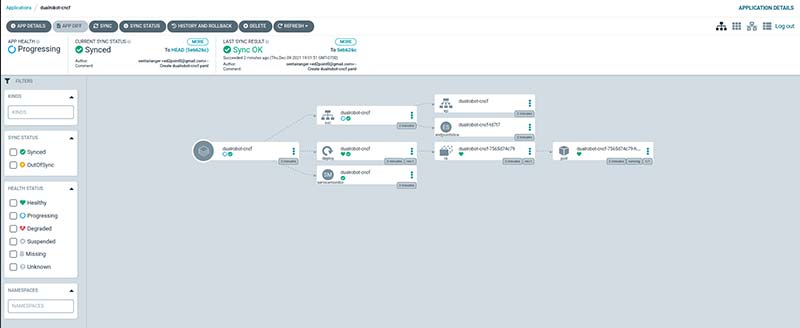

Next, I learned to use Argo CD to deploy changes that were made with GitHub actions.

Argo CD deployment sample.

When I successfully deployed my first cluster, I had no idea how to run my application within the cluster until I realized I could run the same command I used with Kubernetes.

I was then able to run my application. I made some changes in my code as a test and thankfully the application still ran. Just like I did with Docker, I tested a servo, motor, and two robots with Argo CD. It was then and there I was inspired to create my very first Cloud Native project.

First Success

My first success came when I built a dual robot Flask application. The way the project worked was, first, I packaged the application with Docker, then containerized it with Kubernetes, used GitHub Actions to push code changes to Docker Hub, and finally used Argo CD to deploy the application to the cloud. After a few more tests, the application was a success.

Detours

After my first successful Cloud Native project, I knew I had broken new ground. However, things would change as I was accepted into the Cloud Native Application Architecture Nanodegree program. After completing the first project, I learned new concepts such as Kafka, gRPC, and FastAPI. I attempted to use these new tools and had some success with FastAPI and Kafka. However, gRPC was a dead end and realized I didn't need it. So, I moved on to learning Prometheus, Grafana, and Jaeger.

My First Dashboard

Learning Prometheus, Grafana, and Jaeger was quite the headache.

Sample span in Jaeger UI.

Getting these services running on my cluster was cumbersome and exhausting. Only with the help of others (looking at you, Audrey!) was I able to get past several hurdles.

After that, it was smooth sailing. For those not up to speed, Prometheus is used to scrape metrics; Jaeger is used to monitor applications; and Grafana takes the metrics gathered from Prometheus and displays them as a dashboard.

Grafana dashboard sample.

After learning how to use these services in my classroom project, I decided to try it out on my own with the applications I already built. However, I shelved those projects until I finished my Nanodegree in November of 2021. After that, I decided to run more tests, and afterward, I successfully built my first robot monitoring dashboard.

Unlike my previous project, I used declarative manifests to deploy my application and learned all about indentation and allowing Prometheus to scrape my application. To accomplish this, I had to add a service, deployment, and service monitor manifest. The service monitor is required for Prometheus to scrape the application and the deployment manifest also required annotations for Prometheus to detect the application.

After some trial and error, I was able to deploy my robot monitoring dashboard. This was my most ambitious project at the time.

Exploring the Unknown

After completing this project, I decided to apply it to other Python web frameworks including Django and Pyramid. Thankfully, I had success with those frameworks, so I moved on to other frameworks outside of Python. This included Express (Node.js) and Spring Boot (Java).

After more tests, I realized that it’s possible to build a robot monitoring dashboard using any web framework, provided that remote GPIO access is included by default. During this time (2022 to be exact), I submitted my main project to Grafana for GrafanaCON. While I wasn't accepted. I was allowed to post my project on their official blog. I did just that and now my project is prominently featured there.

Quest for Automation

This year, I wanted to expand my robot monitoring system, so I decided to investigate the automation platform Ansible. I got Jeff Geerling's book, Ansible for DevOps, and decided to run the test playbooks. With that, I was inspired to use Ansible on my own projects.

I realized I could use Ansible to automate my Docker builds and also automate my Kubernetes deployments. I took my existing project and used Ansible to allow users to deploy my project on their own remote hosts. However, things would change when I was introduced to Terraform.

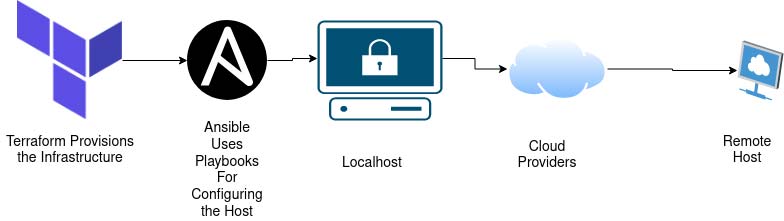

Terraform diagram.

Into the Cloud

Recently, I learned about the infrastructure provisioning platform called Terraform (open source software for defining and provisioning a datacenter infrastructure using configuration files). I decided to give it a chance and try out the official tutorials. After success with the tutorials, I then decided to extend my robot monitoring system one last time with Terraform.

I used Terraform to provision my Docker builds and my Kubernetes deployments. Unlike with Ansible, Terraform only requires a few commands to deploy the applications.

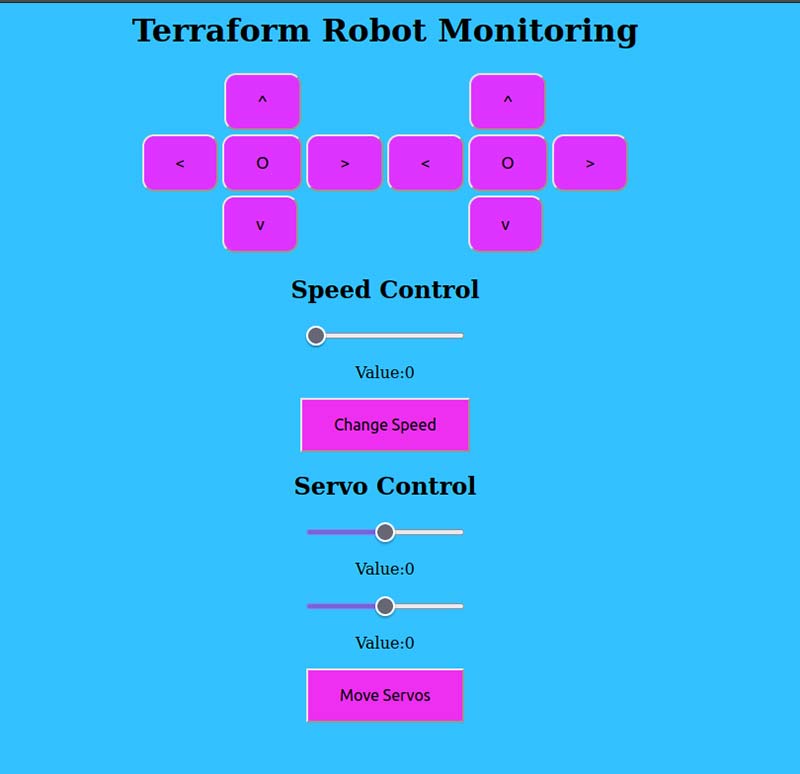

Main application.

The tests were a success, but I kept Ansible to automate configuration. This meant that I would use Ansible to install the required platforms on the individual hosts.

I also tested Terraform in AWS, Azure, and Google Cloud. There, I realized that everything I had learned and all the tools I had used had come to this. I finally built the project I didn't think was possible.

The Journey Ends

And with that, my Cloud Native journey has come to an end. While I will continue to learn about these platforms in depth, I have completed what I wanted to do. I took my love of robotics and applied it to the cloud native ecosystem. I will forever be grateful to Udacity and SUSE for the opportunity. And now, a new era begins ... SV

Downloads

202308-Peregrino.zip

What’s In The Zip?

Ansible Robot Monitoring

Dual Robot Monitoring

Flask Dual Robot

Terraform Monitoring

Article Comments