Dreaming of Rosie

By Alan Federman View In Digital Edition

When will we get a personal household robot?

As a baby boomer growing up in the sixties, one of my favorite Saturday morning cartoons was the “Jetsons.” Often, Mr. George Jetson would be served by his robot, Rosie, as he headed off to work in his flying car. Right now, it looks like I’ll ride in a flying car a lot sooner than I’ll be able to own a personal home robot. I was wondering why that is, and when can we expect a ‘Rosie’ to cook and serve us our morning breakfast.

Let’s consider what it would take for a robot to do a simple household maintenance task: Pick up clothes tossed around the house and put them in the washer. This is something we could delegate to five year old children, but for our purposes here, they would be overqualified.

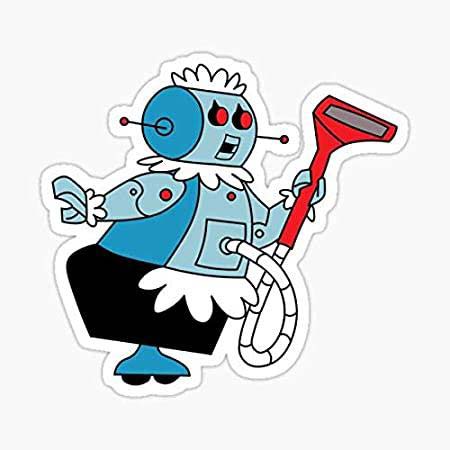

From the figure of Rosie, we can see she is fairly complicated. Mobility is mostly similar to a unicycle, so stairs are out. She has two arms, but the grippers look unlikely to manipulate small items or pushbuttons.

There’s an accessory port for tools like the vacuum cleaner attachment. There is vision, speech, and ‘radio’ communication. It looks like Rosie has social awareness and can communicate both verbally and with visual cues. All in all, a very impressive list of features.

We have had several single task ‘robots’ for quite a while. We’re all familiar with robot vacuum cleaners, and in some respects appliances like your washer, dishwasher, and microwave are somewhat autonomous. None of these come close to taking the place of a household caretaker.

The concept is to develop a “General Purpose Helper Robot” or GoPHR that can do assorted household tasks. So, what would be needed for a more basic household robot?

- Mobility — The ability to move around the house (single floor, no steps).

- Navigation — Know the location of the rooms in the house to search for out-of-place items.

- Smart Vision — Identify clothes, distinguish between clothes and items that stay put like rugs and shoes; also deal with obstacles like coffee tables and any movable items like people and pets. (You’d want to avoid putting the kitten, shoes, or throw pillows in the washer.)

- Manipulation — Picking up and placing items.

Also remember this is all in a unit that would retail for about twice the cost of a major appliance (about $2000-$4000) and cost a fraction of that to build in large quantities. (Remember the first ‘personal’ computers cost about $2000 in 1980 dollars.) Otherwise, there’s no incentive to manufacture and sell it.

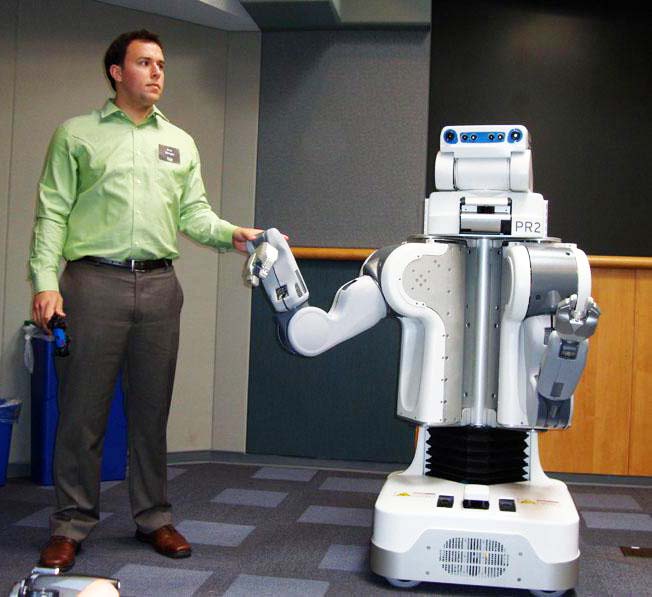

While it currently is possible to build a robot that could accomplish this now — an example would be the Willow Garage PR2 or Toyota Research Institute HSR — these platforms are research only and cost upwards of $200K.

Willow Garage PR2: General-purpose ‘humanoid” >$200,000 research platform.

Toyota Research Institute HSR research platform.

So, we need about two orders of magnitude cost reduction to be profitable. Let’s look at the current state of the art and consider some of the reasons Rosie won’t arrive for several years.

Mobility

There have been major advances in motor, battery, and sensor control that have advanced robot mobility. Brushless Hub Motors (BLM) weigh less and are more compact, and because of the way they are controlled, allow odometry (wheel position) determination. Likewise, lithium-ion batteries are lighter and perform better than heavier lead-acid or NiCad.

Most household robot tasks would require a relatively inexpensive differential drive mobility platform, consisting of two BLM motors (similar to hoverboards). Currently, it costs about $500 to build such a platform. Here’s one area where the current state of the art is adequate and will continue to improve.

Vision

Likewise, there have been advances in robot vision. Depth cameras like the Asus Xtion and Intel RealSense, and open-source software like OpenCV and Yolo combined with faster processing based on GPU acceleration allow the identification of people and objects. However, in some ways, the technology is not fully mature.

As an example, a robot will ‘see’ a portrait of a person or an image of a person on TV and ‘assume’ it’s a live person who is stationary. Another example is to see a stuffed toy dog and not ‘know’ if it’s more like a toy teddy bear or real dog. The cost of cameras is coming down though, and the OAK-D Lite type cameras are inexpensive and sophisticated.

Manipulation

Designing a robot manipulator and gripper that is both capable of picking up a sock tossed on the floor and being able to open a closed door is pretty challenging. While there are industrial robot arms capable of both coarse and fine manipulation, these aren’t affordable at present. While it might be possible to have a door opener or a gripper for picking things up from the floor, both capabilities simultaneously would be problematic.

A potential work-around would be something like the Snap-on tools concept, where the robot could attach a different accessory from its charging station. Just the capability to open the various doors and cabinets in a typical kitchen is remarkable. I once counted over 20 different kinds of knobs and dials to manipulate in just my kitchen alone. This is the one area in which I’m uncertain how long the development will take. Currently, robot arms and grippers alone cost more than $2,000.

Navigation and General AI

A lot of progress is being made here in both the cost of sensors and CPUs. These include laser ranging, sonars, and IMUs. ARM based processors, including ARM64 based offerings like the Raspberry Pi 4 and Nvidia Jetson Nano offer low-cost, low-power consumption and, in some cases, GPU acceleration. This makes many tasks previously only available on desk-sized computers work on a consumer sized robot.

As the speed and connectivity of computing continue to increase, both onboard and cloud based services for speech recognition and generation, visual perception, and general AI will increase. These platforms and the software that runs on them is an area that will continue to see the most progress, and since a lot of development is open source, it’s relatively inexpensive to implement.

So, in conclusion, Rosie probably won’t be able to sarcastically serve us breakfast in the next five years. A robot able to pick the clothes up and put them in the laundry room basket may be available by 2030. I’m hedging my bets and hoping to see the first Rosie prototypes arriving in the next 5-6 years … hopefully. SV

https://robotsguide.com/robots/pr2

https://robotsguide.com/robots/hsr

https://www.youtube.com/watch?v=0OferXS4jIw; OAK-D lite camera

Article Comments