The Turing Test: From Inception to Passing

By Tim Hood View In Digital Edition

June 7, 2014 was the 60th anniversary of the death of a personal hero of mine. It was the logistician, mathematician, cryptanalyst, computer scientist, and philosopher, Alan Turing (1912-1954).

June 7, 2014 was the 60th anniversary of the death of a personal hero of mine. It was the logistician, mathematician, cryptanalyst, computer scientist, and philosopher, Alan Turing (1912-1954).

Turing is often hailed as the father of modern computing. He played a pivotal role in breaking Germany's "Enigma" code — an effort that some historians say brought an early end to World War II.

In 1952, Turing was prosecuted for homosexuality, which was still a criminal offense in the UK at that time. Instead of a prison sentence, he chose to have oestrogen injections (chemical castration) to reduce his libido as an alternative to prison. It has been suggested that the combination of this — along with his sense of unfair persecution and the fact that he lost his job due to his conviction— led to his suicide from arsenic poisoning. A half-eaten apple was found by the side of his bed and it is believed he injected this with the poison before taking a bite. Urban legend has it that this is why the Apple logo came to be an apple with a bite taken out. However, Steve Job's biographer, Walter Isaacson points out that the Apple logo had a bite taken out of it simply because without this, it looked more like a cherry than an apple.

In 2012, many peers and scientists — including acclaimed academics such as Professor Stephen Hawking and Professor Noel Sharkey — pushed for an official pardon for Alan Turing during a high profile Internet campaign. An official pardon was granted by the Queen on December 24, 2013.

During the second World War, Turing worked in Hut 8 at Bletchley Park — Britain's code-breaking center. He led a team who developed a number of techniques for breaking the German cipher. He and Gordon Welchman devised an early electro-mechanical computer known as the “bombe” to automate the process of cracking the German Enigma code. It has been estimated that the work carried out at Bletchley Park shortened the war by two to four years, and that without this valuable ability to penetrate the communications of the axis powers the outcome of the war would have been uncertain.

Bletchley Park has been preserved as a museum and memorial to all those who worked there during the war. It is well worth a visit if you ever happen to be in England.

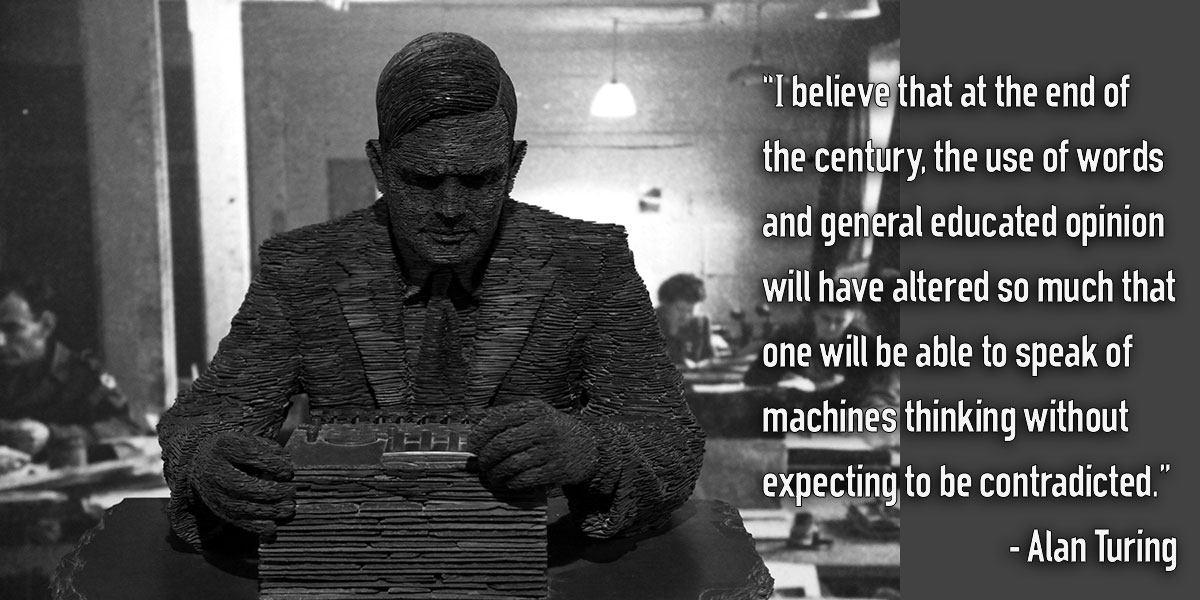

This is a photo of the Alan Turing Memorial, situated in the Sackville Park in Manchester, England. Turing is sitting on a bench situated in a central position in the park. On Turing's left is the University of Manchester; on his right is Canal Street.

The statue was unveiled on Turing's birthday, June 23, 2001. It was conceived by Richard Humphry — a barrister from Stockport, who set up the Alan Turing Memorial Fund in order to raise the necessary funds.

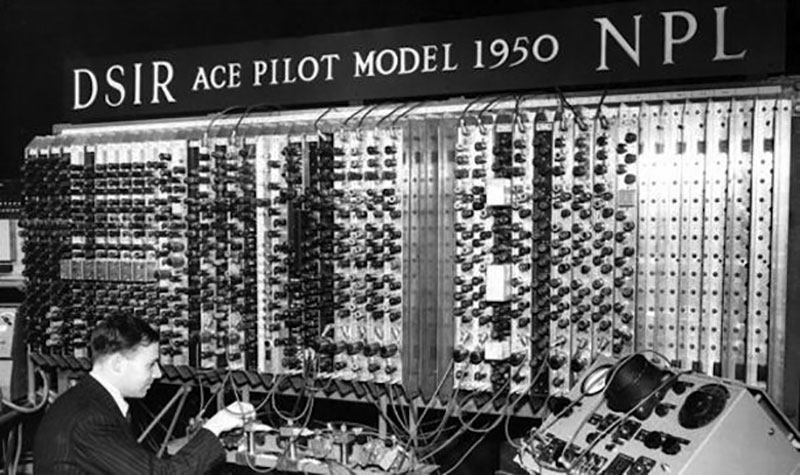

In 1936 prior to the outbreak of the war, Turing had written a paper proposing a Turing machine: a hypothetical device that manipulates symbols on a strip of tape according to a set of predefined rules. While not intended to be a practical device, it allowed computer scientists to understand the limitations of mechanical computation. Turing went on to propose a universal machine, which was an apparatus that would be able to simulate any other Turing machine.

Alongside Alonzo Church, Turing provided a more mathematical definition which stated that his machines capture the informal notion of effective methods in logic and mathematics, and provide a precise definition of an algorithm or mechanical procedure. The study of these complex properties is still extremely relevant to computer science today. What was novel about Turing's approach was that it demonstrated that a machine could perform the computation tasks of any other machine, or rather that it was capable of calculating anything that was calculable.

Many considered this model to be the origin of the stored program computer, as used by John von Neuman in 1946 for the electronic computing machine architecture that now bears his name. Von Neuman later acknowledged this himself. Because of his work in this area, Turing is considered by many to be the father of the modern computer.

Turing's Automatic Computing Engine

Turing is probably most notably remembered by roboticists for his paper titled Computing Machinery and Intelligence, which was published in October 1950 and proposed an experiment that became known as the Turing Test. In an attempt to define a standard at which we could classify a computer or artificial intelligence (AI) as being truly intelligent, Turing proposed that if a human participant communicating with a computer or artificial intelligence was unable to tell whether or not they were communicating with a real human or a computer, then that computer would pass the Turing Test and be considered truly “intelligent.” You have probably taken a reverse Turing Test many times yourself without realizing it when you’ve been asked to fill in a CATCHPA test on a website to determine that you are indeed a human and not a robot.

A CAPTCHA (an acronym for "Completely Automated Public Turing test to tell Computers and Humans Apart") is a type of challenge-response test used in computing to determine whether or not the user is human. The term was coined in 2000 by Luis von Ahn, Manuel Blum, Nicholas J. Hopper of Carnegie Mellon University, and John Langford of IBM.

In practice, this means a human judge engaging in turn with an artificial intelligence; then, a human through a text interface (keyboard, mouse, and screen); then having to decide which was the human and which was the computer. It is worth noting that the test is not concerned with whether or not the answers given to the questions are correct; it is only interested in whether or not the artificial intelligence's behavior is indistinguishable from that of a human.

Turing believed that no computer would be able to pass the test without human level intelligence because all human intelligence is embodied within language. Many critics argue that it isn't a true test of human intelligence since it doesn't incorporate visual and auditory information. However, in my opinion, this view fails to understand how the human brain actually works.

It was the late Dr. James Gouge (a.k.a., Dr. Jim) who first pointed out to me that all information is represented in the same manner in the brain. Our sense organs merely convert stimulus in our environment (the information to be processed) — whether electro-magnetic waves in the visible part of the light spectrum or sound waves — into neural signals; this was the basis of his machine intelligence. Whether the information is visual or auditory is immaterial, as long as it is converted into the same data type. Therefore, adding a visual and auditory element to the Turing Test wouldn't actually make it any more difficult. This is a view also shared by Ray Kurzweil (American author, computer scientist, inventor, futurist, and a director of engineering at Google).

The University of Reading held Turing Test 2014 at the Royal Society, London on June 7, 2014. Reports in the media soon followed that an AI — developed in Russia and known as Eugene Goodman— had passed the test for the first time in history by fooling 33 percent of the judges into believing it was human. However, some have argued that Eugene Goodman was effectively cheating by pretending to be a 13 year old Ukrainian boy.

Anil Seth, Professor of Cognitive and Computational Neuroscience at the University of Sussex, commented on a Radio 4 broadcast that "Eugene is gaming the system by pretending to be a non-native speaker of English and a 13 year old child." He goes on to argue that not knowing the answer or a clumsy reply could seem plausible given the character of the AI.

Other critics have highlighted the fact that Turing didn't specify a time limit on the test, whereas those judging Eugene Goodman only had five minutes. That was certainly not a sufficient amount of time to decide whether or not he was human or AI. By using a larger number of judges over a longer period of time and by asking more probing questions, it would have made it much more difficult for the AI to fool the judges.

Some critics have gone so far as to say these sorts of Turing Tests do a grave disservice to AI by assuming that intelligence is just about the disembodied abstract exchange of thoughts that can be reduced to a text interface. During the radio broadcast, it was necessary to have a person read out some of Eugene Goodman's comments. Having spoken to Eugene myself through a text interface (which you can do as well by following the link included with this article), I noticed that actually hearing him speak made him appear more 'alive' to me.

While I have already argued that the use of auditory or visual information wouldn't actually make the Turing Test any harder in the case of making Eugene speak, this would simply mean employing text-to-speech synthesis. It is possible that an AI using text-to-speech synthesis in the future would influence a judge’s perception, resulting in this AI more likely to be judged human rather than one which merely employs a text interface.

Artificial intelligence is far more than just being able to respond to questions. It also involves: behaving in the world; constructing complex perceptual scenes; and deciding to do the right thing at the right time. These are all areas where there is currently lots of work being undertaken in AI — which has nothing to do with chat bots.

The value of the 2014 event at the Royal Society and the following publicity was that it increased public awareness and attention to AI, though the degree of overstatement involved probably was not that helpful. While a benchmark is important in assessing the development of AI, some argue that the Turing Test is not the gold standard others see it as because — in practice — its application varies a lot.

Although there is scope for AI passing the Turing Test, we are not there yet, and in reality there will most likely be no clear point at which we will suddenly have AI. It will more likely be a process of our technology becoming more and more automated, gradually taking certain tasks away from the humans involved.

One of our most advanced AIs is IBM's super computer, Watson: a question answering computing system built to apply advanced natural language processing, information retrieval, knowledge representation, automated reasoning, and machine learning technologies to the field of open domain answering. It is probably best known for beating two former winners at the American quiz show, Jeopardy.

Watson contains hundreds of sub-systems, each of which considers millions of competing hypotheses. A similar analysis using a biological brain would take centuries compared to the three seconds it takes Watson. Because of this level of complexity, there is not a single person who fully understands in its entirety how Watson works.

Although Watson has natural language processing capability, he/she/it(?) doesn't engage in conversation which would require the additional ability to track all of the earlier statements made by each participant in the conversation. This ability would be needed for Watson to pass the Turing Test but, in fact, this isn't dissimilar to what Watson is doing already as it has read millions of pages of material on the Internet — including stories which are sequential in a manner similar to a conversation. This is how it acquires its extensive bank of knowledge and information.

In his book, The Age of Spiritual Machines, Kurzweil predicts that an AI will pass the Turing Test by 2029. However, to achieve this, AIs attempting to fool humans into believing they are human will have to dumb themselves down. Watson — although currently limited in its language capacity — has far more knowledge than the majority of humans (which would quickly give the game away). While Watson has more knowledge, humans currently still have more conceptual levels. This explains our abilities to perform cognitive tasks that Watson can't.

As critics of the Turing Test have highlighted, artificial intelligence is about far more than merely being able to converse with a human and fooling that person into believing the AI is an individual.

The human brain is physically limited in its processing power by the size of the skull. To increase our processing power, we need more hardware (a larger brain). However, we can't increase the size of this until either evolution endows us with a bigger cranium or we find an artificial means to replicate this. AIs, on the other hand, do not have such limitations; most of you will be well aware of Moore's Law which states that the number of transistors in a processor doubles every two years, meaning that the processing capability roughly increases at an exponential rate.

I believe it is clear that once we do achieve human-like intelligence in an artificial form, the level of intelligence won't remain human. It will quickly “artificially” evolve beyond this level. We are already starting to see AI being used in this manner.

Watson has been reprogrammed and is being trialled as a clinical decision support system; in other words, helping doctors to make more accurate diagnoses. The advantage here is clear: Diagnosticians can only retain a limited amount of information in their brain, and although they may try their best to stay abreast of current medical research, they are not going to be able to match the knowledge base that a system like Watson can acquire.

The Imitation Game is a 2014 British-American historical thriller film about British mathematician, logician, cryptanalyst, and pioneering computer scientist, Alan Turing, who was a key figure in cracking Nazi Germany's Enigma code that helped the Allies win World War II. It stars Benedict Cumberbatch as Turing, and is directed by Morten Tyldum with a screenplay by Graham Moore. The movie is based on the biography, Alan Turing: The Enigma, by Andrew Hodges.

Another example of artificial intelligence that is being used to accomplish tasks that humans can't or that take humans an excessive amount of time is Wolfram Alpha. Whereas Google is a search engine, Alpha is an answer engine; that is to say it doesn't just search for the answer, it will actually calculate it for you. It holds around 10 trillion bytes of data, which includes the vast majority of known scientific methods.

Whenever you ask Apple's Siri a question that requires a calculation, it defers to Wolfram Alpha. Currently, it is working with a 90% success rate with an exponential decrease in failure rate of around 18 months.

As all of the disciplines of science — no longer just those traditionally considered "hard" — come to rely more and more upon big data, the need for these systems to do the number crunching for us will only increase. While humans are relatively successful at discovering and compiling scientific and mathematical methods, computers are far better at implementing them than humans.

However, there is one important scientific model that still eludes us: the algorithm that replicates the processes within the human brain.

The question still remains whether AIs will ever be able to fully replicate the human thought processes or whether they will form a different sort of intelligence — one that we will employ to undertake tasks that the human brain isn't suited to. SV

Article Comments