Bots in Brief

Table This Discussion

A new home robot from Labrador Systems is essentially a semi-autonomous mobile table, which is poised to have a huge impact on people who could really use exactly that.

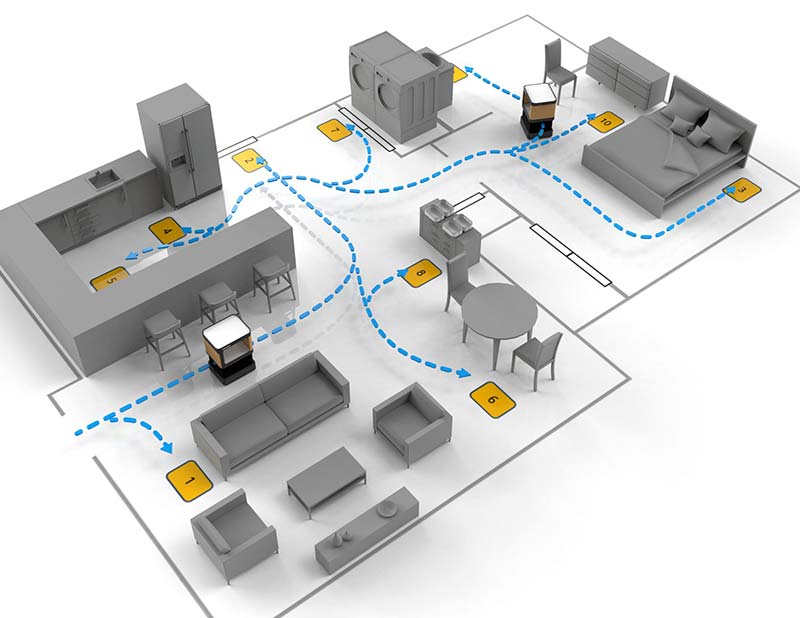

The Labrador Retriever takes the form of a small rolling table with a shelf that’s adjustable in height. It’s able to navigate through doorways and maneuver throughout a house, bringing goods to people who have mobility difficulties. This could be laundry, a meal tray, medication, or even a package delivered to the door by a courier service.

For many older adults as well as adults with disabilities, reliance on mobility aids (like canes or walkers) means that moving around while carrying things in hands or arms is difficult and dangerous, and for some, moving at all can be painful or exhausting. This can necessitate getting in-home help, or even having to leave your home completely. This is the problem that Labrador wants to solve (or at least mitigate) with its mobile home robot.

For people with limited mobility, the Labrador robot offers a place to store and transport heavy items that might be impossible for those people to carry or move on their own. Items that are used regularly can live semi-permanently in the storage area in the middle deck of the robot. The robot can handle up to 25 pounds (11 kilograms) in total of whatever you can fit on it. One version of the robot is at a fixed height, while a slightly more expensive version can raise and lower the height by about one-third of a meter and do some other clever stuff.

Autonomy is fairly straightforward. Labrador uses 3D visual simultaneous localization and mapping, or SLAM, combined with depth sensors and bumpers on all sides to navigate through home environments, managing tight spaces, ADA-compliant floor transitions, and low lighting conditions, although you’ll have to keep clutter and cords away from the robot’s path and possibly tape down troublesome carpet edges.

When you first get a robot, a Labrador representative will (remotely) drive it around to build a map and to set up the “bus stops” where the robot can be sent. This greatly simplifies control, since the end user can then just speak a destination into a smartphone app or a voice assistant and the robot will make its way there. So, zero training time is required.

The robot can also be scheduled to be at specific spots at specific times. All-day battery life is achievable since the robot spends most of its time being a table and not moving. It will even charge the user’s small electronics on its lower deck.

Labrador doesn’t expect to be in full production until the second half of 2023. The company is taking fully refundable $250 reservations now, though. If you’re lucky (and live in the right place), you might be able to get access to a beta unit earlier than that. Of course, 2023 seems like a long time to wait, but it’ll be absolutely worth it if Labrador can get this right.

ANYmal Goes ANYwhere

ANYmal is a quadraped created by the Robotic Systems Lab at ETH Zurich. It has apparently found the perfect testing ground in Mount Etzel in Switzerland. Between slippery ground, high steps, and trails full of roots, the 3,602 ft mountain is ripe with challenges for a legged robot.

Difficult terrains are particularly challenging for robots. They struggle to combine the visual perception of their environment with the proprioception of their legs. For humans, this is a task that we don’t even think about.

The information that robots gather about their environment with sensors can often be incomplete or ambiguous. The robot might see tall grass, a shallow puddle, or snow, and determine it’s an insurmountable obstacle. Alternatively, the robot might not pick up on those obstacles at all if the sensors are obscured by weather conditions or poor lighting.

ANYmal, ETH Zurich’s quadruped robot on Mount Etzel. Photo courtesy of ETH Zurich.

“That’s why robots like ANYmal have to be able to decide for themselves when to trust the visual perception of their environment and move forward briskly, and when it is better to proceed cautiously and with small steps,” Takahiro Miki, a doctoral student and lead author on a study, said. “And that’s the big challenge.”

To help ANYmal navigate environments quickly, the team used a new control technology developed by ETH spin-off, ANYbotics, based on a neural network. Thanks to this controller, ANYmal learned how to combine its visual perception with its proprioception. The team tested ANYmal’s ability in a virtual training camp before bringing it out into the world. On Mount Etzel, ANYmal scaled almost 400 feet (120 m) in just 31 minutes. “With this training, the robot is able to master the most difficult natural terrain without having seen it before,” ETH Zurich Professor Marco Hutter explained.

ANYmal learned how to overcome numerous obstacles, as well as when to rely more on its proprioception instead of visual perception. This way, ANYmal can tell when poor lighting or weather is distorting its perception, and navigate with its sense of touch instead.

Stark Reality

Meet iRonCub: a robotic preschooler equipped with four jet engines that give it the ability to fly like Iron Man. Tony Stark makes it look easy, but this is a hard and occasionally a flaming and slightly explosive problem — especially for a humanoid robot that was never designed for this sort of thing.

A team of researchers at the Italian Institute of Technology (IIT) are working toward granting humanoid robots the power of flight for work in search and rescue missions.

One of the key aims for this project is making robotic aerial manipulation more robust and energy-efficient than ever before. As explained by Daniele Pucci, head of the Artificial and Mechanical Intelligence lab at the Institute, aerial humanoid robots can be technologically, socially, and scientifically beneficial. Aerial humanoid robots like iRonCub can help improve flying robots, which are mostly quadrotors equipped with a robotic arm.

Mirror, Mirror

NASA’s James Webb Space Telescope is preparing for a three-month long process of aligning the 18 hexagonal mirror segments that make up the telescope’s mirror. The process begins by moving each segment upwards by just 12.5 mm.

The James Webb Space Telescope is the largest telescope to ever be launched into space. With all of its mirror segments unfolded, it’s around the size of a tennis court.

NASA’s engineers had to place each hexagonal piece with extreme precision. To do so, they used a robotic arm that can move in six directions. The arm was controlled by a custom hexapod created by PI USA. The hexapod was placed at the end of the robotic arm to ensure that each piece was placed in the correct spot. “In order for the combination of mirror segments to function as a single mirror they must be placed within a few millimeters of one another, to fraction-of-a-millimeter accuracy. A human operator cannot place the mirrors that accurately, so we developed a robotic system to do the assembly,” according to NASA’s James Webb Space Telescope Program Director Eric Smith.

A custom hexapod from PI was used to place the segments of the telescope’s mirror. Photo courtesy of NASA.

Placing the pieces required two teams of engineers to work simultaneously. The first was dedicated to controlling the robotic arm and maneuvering it around the structure of the telescope. The other team worked to take measurements with lasers as the segments were placed, bolted, and glued.

“Instead of using a measuring tape, a laser is used to measure distance very precisely,” Garry Matthews, Harris Corporation’s James Webb Space Telescope’s assembly integration and test director, said. “Based off of those measurements a coordinate system is used to place each of the primary mirror segments. The engineers can move the mirror into its precise location on the telescope structure to within the thickness of a piece of paper.”

The James Webb Space Telescope was launched in December 2021. It’s designed to last in space for at least five and a half years. Ideally, however, NASA is hoping the telescope will continue operating for 10 years.

The telescope will build and expand on the work of the Hubble Space Telescope. It will orbit around one million miles from Earth to see some of the first galaxies formed in the universe.

Make Mine a Double

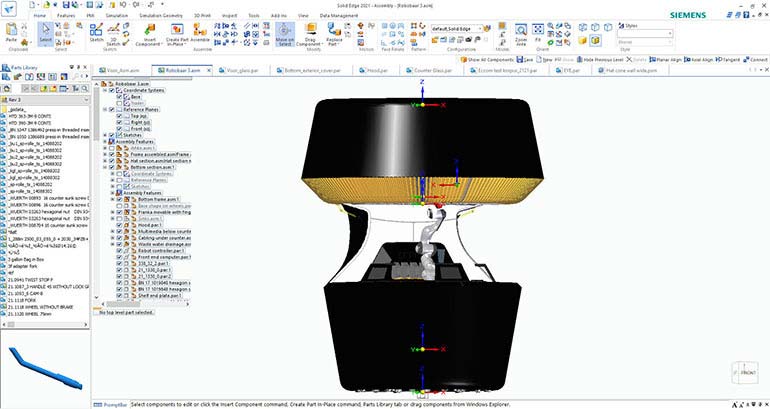

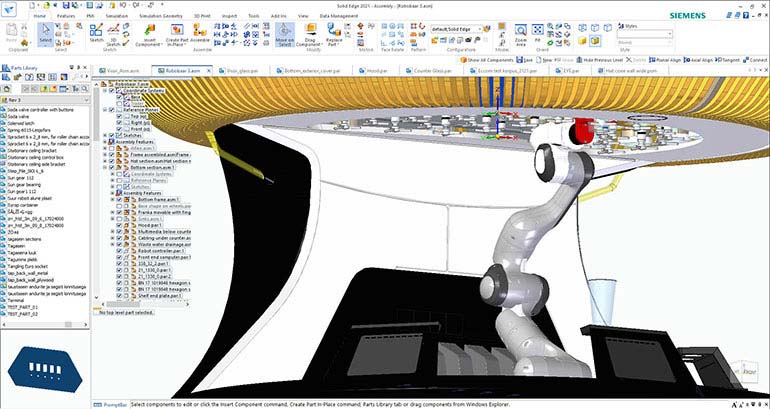

s bartending robot, is the brainchild of Alan Adojaan, CEO and founder of YANU, an Estonia-based robotics company. It looks like something out of a science fiction movie. Standing at nearly 10 feet tall, the assembly is large, cylindrical, and sleek. On its countertop are several touchscreens that display drink choices, ranging from martinis to old fashioneds. As soon as you input your order on a screen, Yanu’s single robotic arm springs to life.

It grabs a glass and swivels 180 degrees to the ice dispenser. Next, the arm raises the glass, navigating it along a series of ingredient dispensers that hang from the ceiling. After moving through the correct combination, the arm lowers itself and hands you a finished drink.

Though this robotic feat is impressive enough, mixology is just the beginning. In addition to making drinks, Yanu (which is powered by artificial intelligence) handles credit card and mobile payments, verifies a client’s drinking age, and communicates with patrons, whether suggesting a drink or cracking a joke. Capable of making up to 100 drinks in one hour and up to 1,500 cocktails in a single load, Yanu is poised to revolutionize the food and beverage industry.

Despite the fact that the company’s first product is a highly sophisticated service robot, Adojaan’s background is in hospitality, not robotics. As anyone in food service or bartending can tell you, the industry has its fair share of challenges, especially now. Examples include a high staff turnover rate, labor shortages, high recurring expenses, and an emerging need for contactless food and beverage service.

“It was a constant struggle to keep the business going,” Adojaan reflects. “On an especially busy night, I realized something had to change. Soon after, I had the idea to develop a robotic solution.”

Within four years, Adojaan and his team of designers and engineers transformed Yanu from a single idea to multiple prototypes. Unlike other robotic bartenders on the market, Adojaan’s solution is compact and mobile and is able to be collapsed into a 20 foot shipping container for transport. As noted, Yanu can also communicate with patrons and is fully autonomous; other bartending bots are non-communicative, only semi-autonomous, and require weeks to set up. In addition to its plug-and-play nature, Yanu is cloud-based, easy to operate, clean and refill, and comes with apps for administration, monitoring, and drink ordering.

Making such an advanced robot a reality — especially when “no one took me seriously at first,” Adojaan says — required the latest in robotics design technology and computer-aided design (CAD) software. For these reasons, Adojaan and his team designed and developed Yanu using Solid Edge®: Siemens’ ecosystem of sophisticated software tools that address all aspects of the product design and development process, including mechanical and electrical design, simulation, data management, and more.

Alan Adojaan, founder and CEO of YANU.

Whereas designing complex, intelligent robotic systems can slow the product development process, Solid Edge enabled the YANU team to get its revolutionary bartending bot to market quickly — all while overcoming many of the typical pitfalls that can affect the design process for new products.

Inching Closer

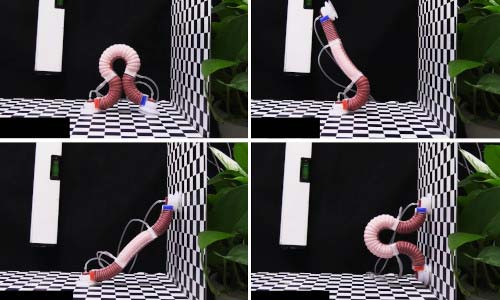

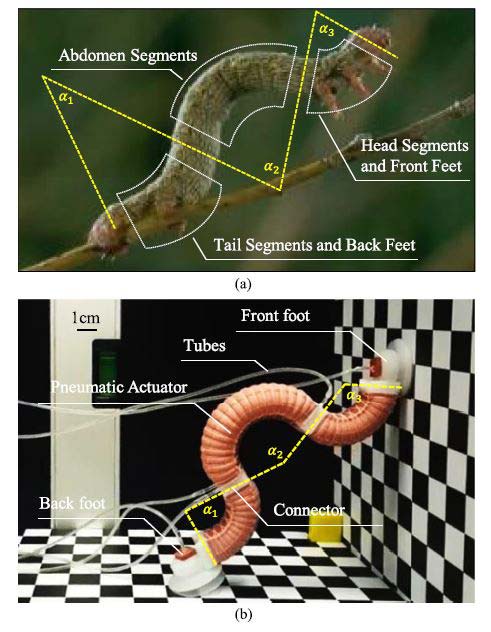

Researchers at China’s Shanghai Jiao Tong University have developed a robot that moves like an inchworm and can make its way between horizontal and vertical planes.

The robot is equipped with three fiber-reinforced pneumatic actuators to allow precise control of its tail, head, and body. It also features two pressure suckers with a double layer of silicone which work with the actuators to propel the robot forward, according to a published study. The robot can carry 500 grams on horizontal planes and 20 grams on vertical walls, and reach top speeds of 21 millimeters per second on horizontal planes and 15 millimeters per second on vertical walls.

Comparison between the biological inchworm and the robot inchworm: (a) Simple division of inchworm’s body parts. Thirteen body segments are divided into three groups (head, abdomen, and tail) according to a “Ω” deformation of the inchworm body; (b) SCCR transitions between crawling and climbing locomotion.

“It is the first time to achieve transition locomotion of a soft mobile robot between horizontal and vertical planes, which may expand the workspace of the soft robot,” says Shanghai Jiao Tong University Professor Guoying Gu.

ABii has Class

Built from decades of university research in child-robot interaction and educational intervention, ABii is just like a classroom assistant. She provides her students with step-by-step math and reading instructions, and can adapt to their individual learning habits. She knows when a student isn’t paying attention and enjoys trading high fives for right answers.

ABii is the brainchild of startup Van Robotics. This multidisciplinary team — made up of computer scientists, roboticists, and certified educators — is breaking new ground in the fields of social robotics and Artificial Intelligence (AI). Since its founding in 2016, the company has focused on developing smart robot tutors to improve the learning process for K-5 children with cognitive, learning, and developmental difficulties. Its flagship ABii robot personalizes math and reading lessons with fun social interactions, from fist bumps to dance parties. Able to work with any Wi-Fi-enabled device, ABii also adapts her interactions with each student based on sophisticated Machine Learning (ML) techniques.

In addition to ABii, Van Robotics has a second robot tutor in development, MARii: a musical assistant robot that teaches older adults with mild cognitive impairment how to play the piano. Equipped with sensors to detect stress and frustration in its users, MARii uses music to improve the adult’s cognitive and emotional health. Clinical trials funded by the National Institute of Health (NIH) are set to begin for MARii this summer.

Today, ABii is used by thousands of children all around the world, including in over 30 states in the United States, England, Germany, Emirates, and Qatar. Results from a 2018 efficacy study among Kansas, Alabama, New York, and Texas schools show that 67 percent of students that tested below proficient in mathematics improved by an average of 34 percent after just two to three sessions with ABii.

“Children naturally want to engage with robots,” says Laura Boccanfuso, PhD, Chief Executive Officer, Van Robotics. “With ABii, we wanted to make sure we were designing and engineering a robot that would work well with them.”

This engagement owes to Boccanfuso’s philosophy that robots are the physical embodiment of software, bringing more value to the learning process than traditional screen-based tools. For this reason — after first sketching her ideas for ABii on a napkin — she spent a lot of time thinking about the fine-tuning the robot’s design. “Our goal was to engage children in a way that goes beyond screen-based interfaces,” Boccanfuso says. “We appreciate that ABii is an engineered, physically embodied robot that can model scenarios for students in ways that aren’t possible with screen-based apps.”

Since that initial napkin sketch, it was critical for Boccanfuso to get ABii’s physical form just right. An advanced mechatronic package, ABii incorporates years of research in child-robot interaction. At 15 inches tall, the robot makes eye contact with children when placed on top of a desk. She has two degrees of freedom in each arm, as well as two in her head, totaling six. ABii is purposely larger than a toy, yet not too big that she intimidates students. She is also human enough to express emotions using the body language children are already familiar with, yet not too human-like that she is off-putting.

“We knew ABii needed to have a large head with large eyes and look pleasant — much like a familiar cartoon character a child would enjoy watching,” Boccanfuso says. “We also deliberately designed her for students that like to touch, move, hold, hug, and carry objects. Having too many degrees of freedom in her arms and legs could make her prone to damage.”

Article Comments