Bots in Brief (05.2020)

Robotic COVID Warriors

As the coronavirus emergency exploded into a full-blown pandemic in early 2020, robot-making companies found themselves in an unusual situation. Many saw a surge in orders. Robots don’t need masks, can be easily disinfected, and, of course, don’t get sick. An army of automatons has since been deployed all over the world to help with the crisis. They are monitoring patients, sanitizing hospitals, making deliveries, and helping frontline medical workers reduce their exposure to the virus.

Photo courtesy of Clement Uwiringiyimana/Reuters.

Not all robots operate autonomously, however. Many, in fact, require direct human supervision, and most are limited to simple repetitive tasks. Robot makers say the experience they’ve gained during this trial-by-fire deployment will make their future machines smarter and more capable. These photos illustrate how some robots are helping to fight the pandemic.

A robot, developed by Asimov Robotics to spread awareness about the coronavirus, holds a tray with face masks and sanitizer. Photo courtesy of Sivaram V/Reuters.

To speed up COVID-19 testing, a team of Danish doctors and engineers at the University of Southern Denmark and at Lifeline Robotics are developing a fully automated swab robot. It uses computer vision and machine learning to identify the perfect target spot inside the person’s throat.

A robotic arm with a long swab reaches in to collect the sample. This is all done with a swiftness and consistency that humans can’t match. In the photo to the right, one of the creators, Esben Østergaard, puts his neck on the line to demonstrate that the robot is safe.

Photo courtesy of University of Southern Denmark.

A squad of robots serves as the first line of defense against person-to-person transmission at a medical center in Kigali, Rwanda. Patients walking into the facility get their temperature checked by the machines which are equipped with thermal cameras atop their heads. Developed by UBTech Robotics in China, the robots also use their distinctive appearance (they look like something out of a Star Wars movie) to get people’s attention and remind them to wash their hands and wear masks.

Photos, left: Diligent Robotics; Right: UBTech Robotics.

After six of its doctors became infected with the coronavirus, the Sassarese hospital in Sardinia, Italy, tightened its safety measures by bringing in robots. The machines, developed by UVD Robots, use LiDAR to navigate autonomously. Each bot carries an array of powerful short-wavelength ultraviolet-C lights that destroy the genetic material of viruses and other pathogens after a few minutes of exposure. There is currently a spike in demand for UV-disinfection robots as hospitals worldwide deploy them to sterilize intensive care units and operating theaters.

In medical facilities, another ideal role for robots is taking over repetitive chores so that nurses and physicians can spend their time doing more important tasks. At Shenzhen Third People’s Hospital in China, a robot called Aimbot drives down the hallways, enforcing face-mask and social-distancing rules and spraying disinfectant.

Photo courtesy of UVD Robots.

At a hospital near Austin, TX, a humanoid robot developed by Diligent Robotics fetches supplies and brings them to the various patient rooms. It repeats this task day and night tirelessly, allowing the hospital staff to spend more time interacting with patients.

Volocopters Take to the Air

Volocopter put its VoloCity eVTOL (electric vertical takeoff and landing) aircraft in French skies recently and said it could be ready to launch passenger services at the Paris Olympics three years from now.

The German company behind the 18-rotor two-seat aircraft has been refining its design for the last decade as it competes with a slew of other firms exploring the urban mobility market.

Volocopter’s latest test run took place at Paris-Le Bourget Airport. During the flawless flight, the machine covered a distance of 500 meters while traveling at speeds up to 19 mph (30 kph) at an altitude of 98 feet (30 meters). The VoloCity air taxi can fly autonomously or be controlled by an onboard pilot. The design also allows the vehicle to be flown remotely by a pilot on the ground. The vehicle can travel for about 18 miles (30 kilometers) on a single charge.

In other efforts, Volocopter recently unveiled VoloConnect: an eVTOL aircraft designed to carry four passengers on journeys up to 62 miles (100 km) at a speed of 112 mph (180 kph). With its six sets of rotors, two propulsive fans, and retractable landing gear, the VoloConnect machine looks markedly different from VoloCity. The company is currently testing scaled prototypes of VoloConnect and hopes to achieve certification for the aircraft in the next five years.

Volocopter is also working on VoloDrone: an eVTOL aircraft designed to carry cargo. It looks very similar to VoloCity but has no cockpit, meaning it has to be operated remotely or fly autonomously.

The Mayflower — Autonomous Style

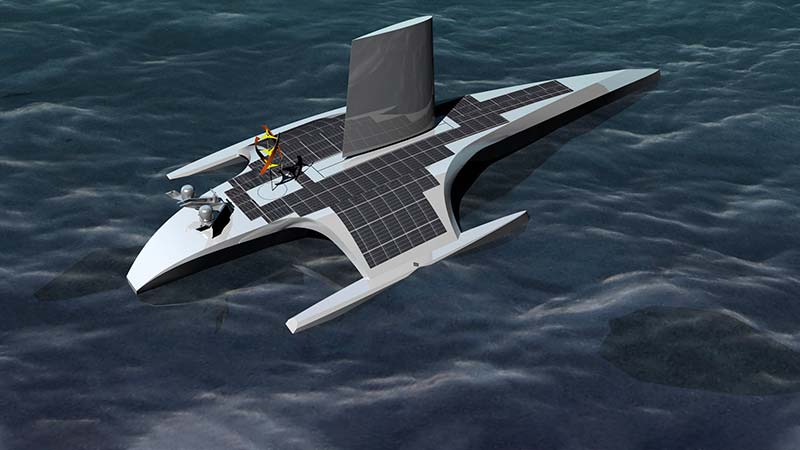

John Stanford-Clark, the chief technology officer for IBM in the U.K. and Ireland, was exuding nervous energy. It was the afternoon before the morning when, at 4 a.m. British Summer Time, IBM’s Mayflower Autonomous Ship (MAS) — a crewless, fully autonomous trimaran piloted entirely by IBM’s Watson AI and built by non-profit ocean research company ProMare — was set to commence its maiden voyage from Plymouth, England to Cape Cod, MA.

The challenge of sailing a ship autonomously isn’t the same as driving an autonomous car. An autonomous car means steering down predefined streets and watching out for other cars, buses, cyclists, and pedestrians, all while interpreting road scenes at high speed. In the open ocean, lanes are wider, population density is lower, and events happen far more slowly (although turning circles and stopping distance are also significantly worse). There is little risk of loss of life when an AI pilots a robot ship across the Atlantic Ocean compared to a self-driving car cruising through your average American city during rush hour.

The Mayflower Autonomous Ship uses similar technology to the tech that powers today’s self-driving cars. That includes both LiDAR and radar, as well as automated identification systems trained to spot hazards such as buoys, debris, and other ships. Combining this data with maritime maps and weather information, MAS will plot an optimal path across the ocean. In the event it determines something that needs to be avoided, its IBM-powered Operational Decision Manager software allows it to change course or even draw power from a backup generator to speed away from the hazard.

The Mayflower Autonomous Ship performed its three-week autonomous crossing (which commenced June 15) with zero in the way of human interference. Everything was carried out autonomously.

While the course has been set, any deviation on that course — from responding to weather conditions to avoiding obstacles larger than a seagull — was carried out by the ship’s AI Captain, built by startup MarineAI, based on IBM’s AI and automation technologies. Any big mechanical failure (all too easy when you’re sloshing around in the open ocean) and suddenly one of the world’s biggest autonomous vehicles becomes as useful as a laptop left overnight in a full bathtub.

Photos courtesy of Oliver Dickinson for IBM/ProMare.

To see how the journey actually turned out, go to www.mas400.com.

Robot Chopsticks Heavy Lifters

One area where several robotics companies have jumped into recently is box manipulation. Specifically, using robots to unload boxes from the back of a truck, ideally significantly faster than a human. This is a good task for robots because it plays to their strengths where you can work in a semi-structured and usually predictable environment; speed, power, and precision are all valued highly; and it’s not a job that humans are particularly interested in or designed for.

One of the more interesting approaches to this task comes from Dextrous Robotics: a Memphis, TN based startup led by Evan Drumwright. Drumwright was a professor at GWU before spending a few years at the Toyota Research Institute and then co-founding Dextrous in 2019 with an ex-student of his, Sam Zapolsky.

The approach they’ve come up with is to do box manipulation without any sort of suction or really any sort of grippers at all. Instead, they’re using what can best be described as a pair of moving arms, each gripping a robotic chopstick.

It definitely is cool, but why use chopsticks for box manipulation? Is this a good idea? Of course it is! The nice thing about chopsticks is that they really can grip almost anything (even if you scale them up), making them especially valuable in constrained spaces where you’ve got large disparities in shapes, sizes, and weights.

They’re good for manipulation too, being able to nudge and reposition things with precision. And while Dextrous is initially focused on a trailer unloading task, having this extra manipulation capability will allow them to consider more difficult manipulation tasks in the future.

Part of Dextrous’ secret sauce is an emphasis on simulation. Hardware is hard, so ideally, you want to make one thing that just works the first time, rather than having to iterate over and over. Getting it perfect on the first try is unrealistic, but the better you can simulate things in advance, the closer you can get.

Dextrous Robotics co-founders Evan Drumwright and Sam Zapolsky. Photo: Dextrous Robotics.

“What we’ve been able to do is set up our entire planning perception and control system so that it looks exactly like it does when that code runs on the real robot,” says Drumwright. “When we run something on the simulated robot, it agrees with reality about 95 percent of the time, which is frankly unprecedented.”

Using very high fidelity hardware modeling, a real time simulator, and software that can directly transfer between simulation and real, Dextrous is able to confidently model how their system performs even on notoriously tricky things to simulate like contact and stiction.

The idea is that the end result will be a system that can be developed faster while performing more complex tasks better than other solutions.

Kit This

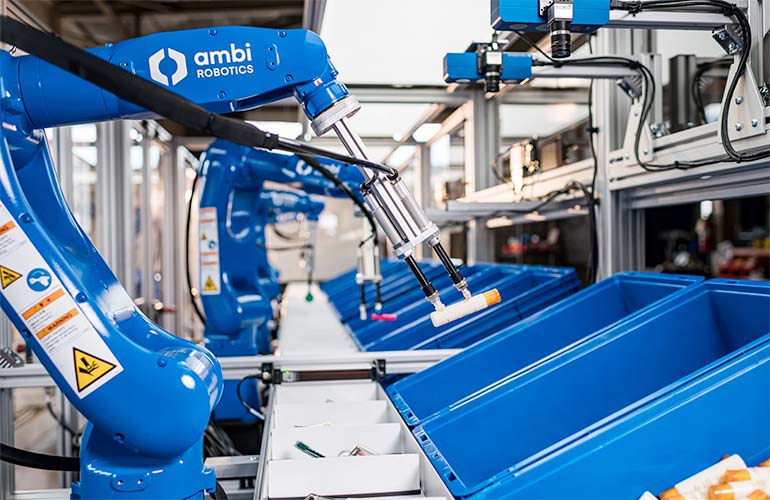

Ambi Robotics recently introduced AmbiKit: a new multi-robot kitting solution that is designed to package, well, kits. The automated solution uses artificial intelligence to learn how to identify and pick up items out of bulk storage and singulate them for the kitting operation. The company claims that their robots are trained 10,000x faster than alternatives. The new kitting system includes a five-robot picking line, currently deployed to augment the pick-and-place tasks associated with one of today’s fastest growing ecommerce opportunities: online subscription boxes.

AmbiKit is capable of picking and packing millions of unique products into specific bags or boxes for shipping to online customers. The cloud based nature of the system enables Ambi Robotics to learn new grasping methods and share those methods across the entire population of Ambi Robotics (no customer proprietary data is shared).

Ambikit includes robots, vision, and AI to quickly learn how to pick and place items in kits. Image courtesy of Ambi Robotics.

The AI in AmbiKit enables it to learn new products quickly. As a result, AmbiKit is uniquely engineered for operation such as order personalization or the subscription box market. Ambi Robotics is working with some of their early subscription box customers to perfect the solution. AmbiKit is successfully sorting up to 60 items per minute in a commercial production environment.

On-floor associates work alongside the AmbiKit system to induct totes with the products and pack complete kits to be shipped to the end-customer. The AI-powered robotic system guides associates while sorting products to complete external actions through intuitive interfaces and dashboards.

Super Soft Hand

A team of researchers from the University of Maryland has 3D printed a soft robotic hand that is agile enough to play Nintendo’s Super Mario Bros and win.

The feat demonstrates a promising innovation in the field of soft robotics, which centers on creating new types of flexible and inflatable robots that are powered using water or air rather than electricity. The inherent safety and adaptability of soft robots has sparked interest in their use for applications like prosthetics and biomedical devices. Unfortunately, controlling the fluids that make these soft robots bend and move has been especially difficult — until now.

The key breakthrough by the team (led by University of Maryland Assistant Professor of Mechanical Engineering, Ryan D. Sochol) was the ability to 3D print fully assembled soft robots with integrated fluidic circuits in a single step.

As a demonstration, the team designed an integrated fluidic circuit that allowed the hand to operate in response to the strength of a single control pressure. For example, applying a low pressure caused only the first finger to press the Nintendo controller to make Mario walk, while a high pressure led to Mario jumping. Guided by a set program that autonomously switched between off, low, medium, and high pressures, the robotic hand was able to press the buttons on the controller to successfully complete the first level of Super Mario Bros in less than 90 seconds.

Article Comments