Bots in Brief (04.2020)

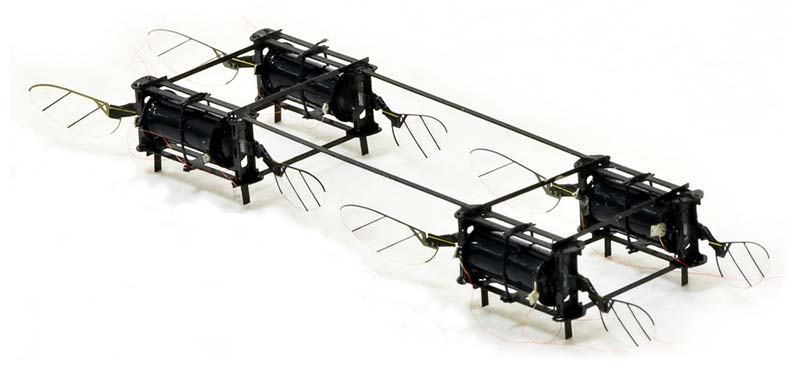

Mini Robot Aerialists

If you’ve ever swatted a mosquito away from your face, only to have it keep bugging you, then you know how remarkably acrobatic and resilient in flight they can be. Such traits are hard to build into flying robots, but MIT Assistant Professor Kevin Yufeng Chen has built a system that approaches the agility of insects.

Chen, a member of the Department of Electrical Engineering and Computer Science and the Research Laboratory of Electronics, has developed insect-sized drones with unprecedented dexterity and resilience. The aerial robots are powered by a new class of soft actuator which allows them to withstand the physical travails of real world flight. Chen hopes the robots could one day aid humans by pollinating crops or performing machinery inspections in cramped spaces.

Typically, drones require wide open spaces because they’re neither nimble enough to navigate confined spaces nor robust enough to withstand collisions in a crowd. “If we look at most drones today, they’re usually quite big,” says Chen. “Most of their applications involve flying outdoors. The question is: Can you create insect-scale robots that can move around in very complex, cluttered spaces?”

According to Chen, “The challenge of building small aerial robots is immense.” Pint-sized drones require a fundamentally different construction from larger ones. Large drones are usually powered by motors, but motors lose efficiency as you shrink them. So, Chen says, for insect-like robots “you need to look for alternatives.”

The principal alternative until now has been employing a small rigid actuator built from piezoelectric ceramic materials. While piezoelectric ceramics allowed the first generation of tiny robots to take flight, they’re quite fragile. And that’s a problem when you’re building a robot to mimic an insect.

Chen designed a more resilient tiny drone using soft actuators instead of hard fragile ones. The soft actuators are made of thin rubber cylinders coated in carbon nanotubes. When voltage is applied to the carbon nanotubes, they produce an electrostatic force that squeezes and elongates the rubber cylinder. Repeated elongation and contraction causes the drone’s wings to beat fast.

Chen’s actuators can flap nearly 500 times per second, giving the drone insect-like resilience. “You can hit it when it’s flying, and it can recover,” says Chen. “It can also do aggressive maneuvers like somersaults in the air.”

The tiny drone weighs in at just 0.6 grams (approximately the mass of a large bumble bee) and looks a bit like a tiny cassette tape with wings, though Chen is working on a new prototype shaped like a dragonfly.

Building insect-like robots can provide a window into the biology and physics of insect flight, a longstanding avenue of inquiry for researchers. Chen’s work addresses these questions through a kind of reverse engineering. “If you want to learn how insects fly, it is very instructive to build a scale robot model,” he says. “You can perturb a few things and see how it affects the kinematics or how the fluid forces change. That will help you understand how those things fly.” However, Chen aims to do more than add to entomology textbooks. His drones can also be useful in industry and agriculture.

Chen says his mini-aerialists could navigate complex machinery to ensure safety and functionality. “Think about the inspection of a turbine engine. You’d want a drone to move around [an enclosed space] with a small camera to check for cracks on the turbine plates.”

Other potential applications include artificial pollination of crops or completing search-and-rescue missions following a disaster.

Bionic Bird

Bionics4Education combines analog and digital learning in a didactic form with a practice-oriented educational kit and an accompanying digital learning environment. Bionics4Education was developed by a team of engineers, designers, computer scientists, and biologists. The team — which had successfully developed bionically inspired projects in the past — recognized that the countless prototypes and experimental models developed during the design process could be used as a source of ideas to get young people in particular excited about bionics and about STEM (Science, Technology, Engineering, and Mathematics) worldwide.

The didactic and learning strategy of the educational kit is based on the multi-layered learning experience of the people involved in the Bionic Learning Network. During the development phases of countless bionics projects, the experts were able to gather valuable experience over the years, which can now be passed on in a targeted manner with Bionics4Education. These bionics prototypes are at the heart of learning and have been adapted into a modular educational concept with a unique learning construction kit.

The goal of Festo’s Bionics4Education is to offer a range of bionics-inspired projects and supporting content that educators can use to create a project-based learning experience for their students that emphasizes creativity, innovation, and problem solving.

Finally, bionically inspired robots can be assembled with these learning construction kits. The idea is to build robots based on nature’s model and to control them remotely with mobile devices such as tablets or smartphones.

After the first Bionics Kit and the Bionic Flower, Festo Didactic has now taken inspiration from a swallow. The Bionic Swift is a robot bird for general technical education. The experimental set allows independent assembly of the wings and tail and is controlled by a remote control. The Bionic Swift is suitable for learners aged 15 and up in school or home environments and teaches knowledge about bird flight, lightweight construction, aerodynamics, and practical model building skills. It will become available in Q4 of this year.

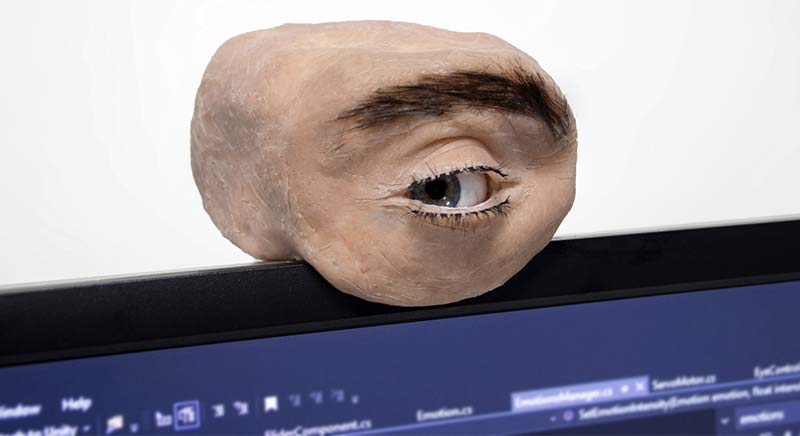

Look Into My Eye

Human eyes are crucial for communication. Through the look, we can perceive happiness, anger, boredom, or fatigue. The eyes move around when someone is curious and look straight to maintain focus. We’re familiar with these interaction cues influencing our social behavior. While webcams bascially share the same purpose as the human eye — to see — they aren’t expressive like human eyes. Eyecam brings back the “human” aspects of the eye into the camera.

Eyecam is a webcam shaped like a human eye. It can see, blink, look around, and observe you (that’s kinda creepy!). The purpose of this project by the folks over at https://marcteyssier.com/projects/eyecam is to speculate on the past, present, and future of technology.

We are surrounded by sensing devices. From surveillance cameras, Google or Alexa speakers, or webcams in our laptop, we are constantly being looked at. They are becoming invisible, blending into our daily lives, up to a point where we are unaware of their presence and stop questioning how they look, sense, and act. What are the implications of their presence on our behavior? This anthropomorphic webcam highlights the potential risks of hiding device’s functions. and challenges conventional devices design.

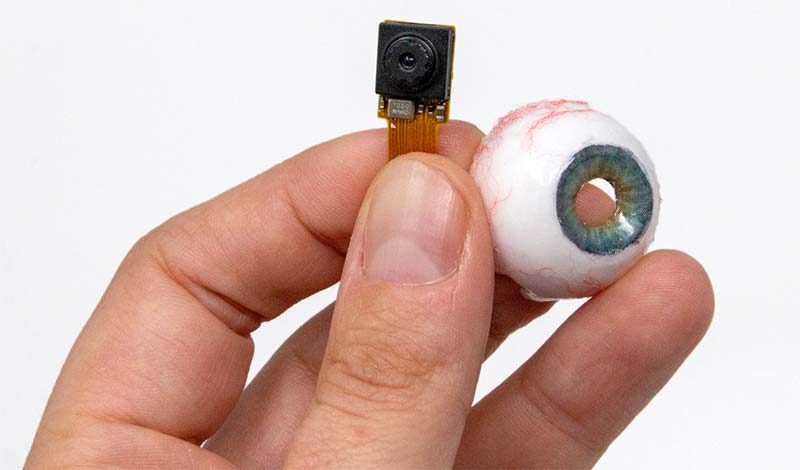

Eyecam comprises an actuated eyeball with a seeing pupil, actuated eyelids, and an actuated eyebrow. Their coordinated movement replicates realistic human-like motion. The device is composed of six servo motors positioned optimally to reproduce the different eye muscles. The motors replicate the lateral and vertical motion of the eyeball, the movement of the eyelids closing, and the eyebrow moving. The control of these motors is made with an Arduino Nano. A small camera is positioned inside the pupil which is able to sense high-resolution images (720p60). This camera is connected to a Raspberry Pi Zero and is detected by the computer as a conventional plug-and-play webcam.

The device is not only designed to look like an eye but to act like an eye as well. To make the movements feel believable and natural, the device reproduces physiological unconscious behavior and conscious behavior. Like a human, Eyecam is always blinking and the eyelids dynamically adapt to movements of the eyeball. When Eyecam looks up, the top eyelid opens widely while the lower one closes completely. Eyecam can be autonomous and react on its own to external stimuli, such as the presence of users in front of it.

Like our brain, the device can interpret what is happening in its environment. Computer-vision algorithms are used to process the image flux, detect the relevant features, and interpret what’s happening. Does it know this face? Should they follow it?

Eyecam is uncanny, unusual, and weird (that’s the understatement of the year). Its goal is to spark speculations on devices aestheticism and functions.

AI on the Menu

McDonald’s — one of the pioneers of modern fast food — has decided to step it up when it comes to efficiency and consistent service. Somewhere in a Chicago suburb, an AI is currently taking drive-thru orders.

The voice itself is described as female, similar to Alexa or Siri. It’s connected to a digital menu and can even suggest food if you’re unsure about what to order.

It can be a bit unsettling to hear a robotic voice greet you instead of the voice of a human employee, but it might be something to get used to if McDonald’s rolls this out to all locations. Just another bit of tech to add to the modern age.

The idea is that the AI will take care of the orders and allow human employees to focus on accuracy and quality of the food items. Fast-food places are always looking to speed up their service to contend with their competitors, and McDonald’s is hoping an AI drive-thru will help with this, even if it’s just a minute or two of difference.

Dill’s Unloading Speed is Sweet

Pickle Robots has announced its latest robot, Dill. It’s a robot that can unload boxes from the back of a trailer at places like ecommerce fulfillment warehouses at very high speeds. With a peak box unloading rate of 1,800 boxes per hour and a payload of up to 25 kg, Dill can substantially outperform even an expert human, and it can keep going pretty much forever as long as you have it plugged into the wall.

Pickle Robots says that Dill’s approach to the box unloading task is unique in a couple of ways. First, it can handle messy trailers filled with a jumble of boxes of different shapes, colors, sizes, and weights. Second, from the get-go, it’s intended to work under human supervision, relying on people to step in and handle edge cases.

Pickle’s “Dill” robot is based around a Kuka arm with up to 30 kg of payload. It uses two Intel L515s (LiDAR-based RGB-D cameras) for box detection. The system is mounted on a wheeled base, and after getting positioned at the back of a trailer by a human operator, it’ll crawl forward by itself as it picks its way into the trailer.

According to Pickle, the rate at which the robot can shift boxes averages 1,600 per hour, with a peak speed closer to 1,800 boxes per hour as mentioned. A single human in top form can move about 800 boxes per hour, so Dill is very, very fast. The robot does slow down when picking up some packages. Pickle CEO Andrew Meyer says that’s because “we probably have a tenuous grasp on the package. As we continue to improve the gripper, we will be able to keep the speed up on more cycles.”

To maintain these speeds, Dill needs people to supervise the operation and lend an occasional helping hand, stepping in every so often to pick up any dropped packages and handle irregular items. Typically, Meyer says, that means one person for every five robots depending on the use case. Although if you have only one robot, it’ll still require someone to keep an eye on it. A supervisor is not occupied with the task full-time, to be clear. They can also be doing something else while the robot works. Although the longer a human takes to respond to issues the robot may have, the slower its effective speed will be. Typically, the company says, a human will need to help out the robot once every five minutes when it’s doing something particularly complex. However, even in situations with lots of hard-to-handle boxes resulting in relatively low efficiency, Meyer says that users can expect speeds exceeding 1,000 boxes per hour.

Pickle Robots’ gripper, which includes a high contact area suction system and a retractable plate to help the robot quickly flip boxes. Photos courtesy of Pickle Robots.

Depending on the configuration, the system can cost anywhere from $50-100k to deploy and about that same amount per year to operate. Meyer points out that you can’t really compare the robot to a human simply on speed, since with the robot, you don’t have to worry about injuries or improper sorting of packages or training or turnover. While Pickle is currently working on several other configurations of robots for package handling, this particular truck unloading configuration will be shipping to customers next year.

Gone to the Dogs

Human-robot interaction goes both ways. You’ve got robots trying to understand humans, as well as humans attempting to understand robots. Robots have all kinds of communication tools at their disposal — lights, sounds, screens, haptics. That doesn’t mean that robot to human (RtH) communication is easy, though, because the ideal communication modality is something that is low cost and low complexity while also being understandable to almost anyone.

One good option for something like a collaborative robot arm can be to use human-inspired gestures (since it doesn’t require any additional hardware), although it’s important to be careful when you start having robots doing human stuff because it can set unreasonable expectations.

In order to get around this, roboticists from Aachen University are experimenting with animal-like gestures for cobots instead, modeled after the behavior of puppies.

For robots that are low cost and appearance constrained, animal-inspired (zoomorphic) gestures can be highly effective at state communication.

Photo courtesty of Franka Emika.

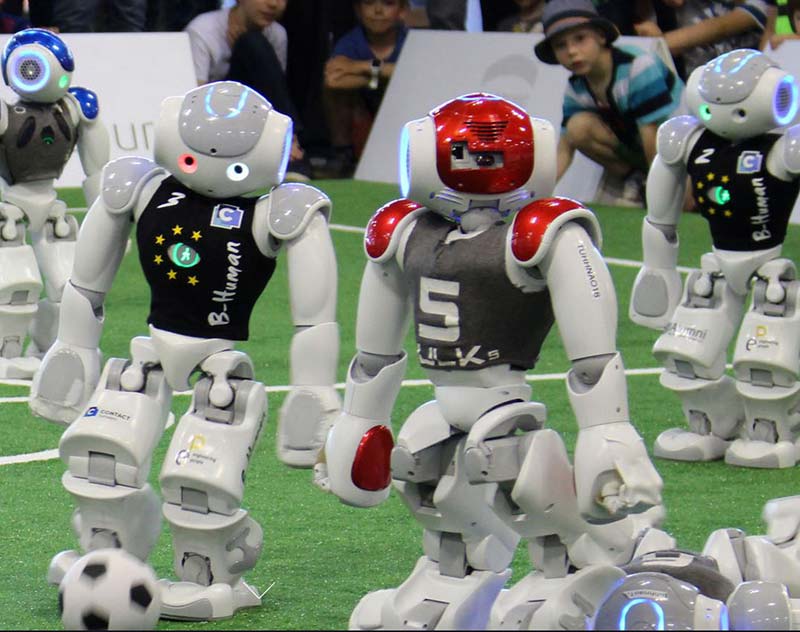

Soccer Punched

These robots are learning how not to fall over after stepping on your foot and kicking you in the shin.

B-Human is a collegiate project at the Department of Computer Science of the University of Bremen and the DFKI Research Department, Cyber-Physical Systems. The goal of the project is to develop suitable software in order to participate in several RoboCup events and also to motivate students for an academic career. The team consists of students and researchers from the University of Bremen and the DFKI.

These robots tasks are diverse. First of all, they need to orientate themselves in their surrounding to know where the goals are and in which one they have to shoot the ball. Many clues are being analyzed, e.g., the field’s lines. If one player is unsure where he is, they all communicate among each other. It’s even possible to change one player’s mind.

While the goal is clear, the robots have to figure out the way to get there. A robot constantly has to decide between several options: Which way do I take? When shall I shoot? Decisions that highly influence the result of the game.

Double Digit

Digit from Agility Robotics is designed primarily as a delivery robot; a market that’s already got some pretty major (and successful) players. However, where most delivery robots opt for a design that resembles a smart drinks cooler on wheels, Digit instead opts for a bipedal design, meaning that it walks upright on two legs.

This allows it to carry out actions like climbing steps while carrying items weighing up to 40 lbs in its arms. In all, it presents a robot that’s far more like the walking robots promised to us in science fiction.

Jonathan Hurst, co-founder of Agility Robotics, recently told Digital Trends, “Digit can localize itself within a known mapped space, and the user can identify a package to go get and bring back, and Digit can autonomously go retrieve the package off a shelf or the floor, avoid obstacles, and navigate through a building, and bring the package back.”

“Quadruped robots have a statically stable advantage, in that they can just stop and stand like a table,” said Hurst. “But all animals are dynamically stable — dogs stumble as much as humans do. For dynamic motions and real agility in the world, this dynamic stability is a necessity. Digit is not yet as stable as a person, but I see the day when it’s better.”

Major features include:

- Upper torso with integrated sensing, computing, and two 4-DOF arms.

- Extra bay for additional computing and custom payload integration.

- 2-DOF feet for improved balance and stability on a wide variety of surfaces.

- Sealed joints for all-weather outdoor operation.

- UN 38.3 certified battery for cargo air shipment.

- Comprehensive software API that leverages the controls, perception, and autonomy algorithms to develop end-user applications.

- Low-level API for customers wanting to develop their own controller.

Ultimately, what Agility is doing with Digit isn’t just coming up with an alternative design for robots to the ones that represent the status quo. It’s reaching for some seriously high-hanging fruit in the field of robotics. Building a robot that looks and moves like a human isn’t just a simple design choice to separate it from the likes of Starship Robotics’ delivery bots. It’s about building robots that can move and operate in the real world without us having to reimagine that world to accommodate them.

New Laws of Attraction

Imagine if some not-too-distant future version of a dating app was able to crawl inside your brain and extract the features you find most attractive in a potential mate, then scan the romance-seeking database to seek out whichever partner possessed the highest number of these physical attributes.

We’re not just talking qualities like height and hair color, but a far more complex equation based on a dataset of everyone you’ve ever found attractive before. In the same way that the Spotify recommendation system learns the songs you enjoy and then suggests others that conform to a similar profile — based on features like danceability, energy, tempo, loudness, and speechiness — this hypothetical algorithm would do the same for matters of the heart. Call it physical attractiveness matchmaking by way of AI.

In their recent experiment, researchers from the University of Helsinki and Copenhagen University used a generative adversarial neural network trained on a large database of 200,000 celebrity images to dream up a series of hundreds of fake faces. These were faces with some of the hallmarks of certain celebrities — a strong jawline here, a piercing set of azure eyes — but which were not instantly recognizable as the celebrities in question.

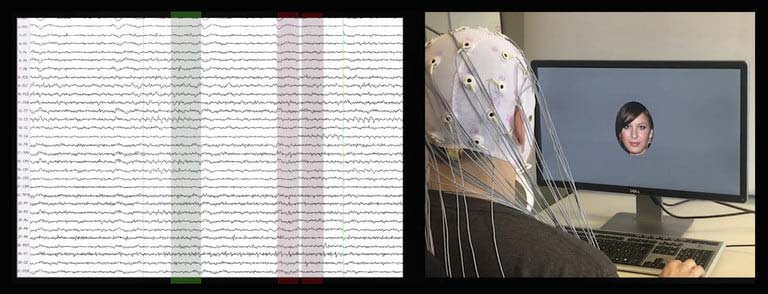

The images were then gathered into a slideshow to show to 30 participants who were kitted out with electroencephalography (EEG) caps able to read their brain activity via the electrical activity on their scalps. Each participant was asked to concentrate on whether they thought the face they were looking at on the screen was good-looking or not. Each face showed for a short period of time before the next image appeared. Participants didn’t have to mark anything down on paper, press a button, or swipe right to indicate their approval. Just focusing on what they found attractive was enough.

Finding the hidden data patterns that revealed preferences for certain features was achieved by using machine learning to probe the electrical brain activity each face provoked. Broadly speaking, the more of a certain kind of brain activity spotted, the greater the levels of attraction.

Participants didn’t have to single out certain features as particularly attractive. To return to the Spotify analogy, in the same way that we might unconsciously gravitate to songs with a particular time signature, by measuring brain activity when viewing large numbers of images and then letting an algorithm figure out what they all have in common, the AI can single out parts of the face we might not even realize we’re drawn to. Machine learning is, in this context, like a detective whose job it is to connect the dots.

Awesome Eruption

A drone pilot recently captured some extraordinary footage looking directly down into an erupting volcano in Iceland. The photographer, Garðar Ólafs flew his machine so close to the heat and spewing lava that after guiding his drone home, he noticed that part of it had melted.

The video (available at https://www.instagram.com/p/CMzW24JHaCF) features some of the most impressive footage (and audio!) of an eruption available. This one takes place near a flat-topped mountain named Fagradalsfjall, about 15 miles south-west of Iceland’s capital, Reykjavik.

AI-powered Pack

Here’s a smart backpack that’s able to help its wearer better navigate a given environment without problems — all through the power of speech.

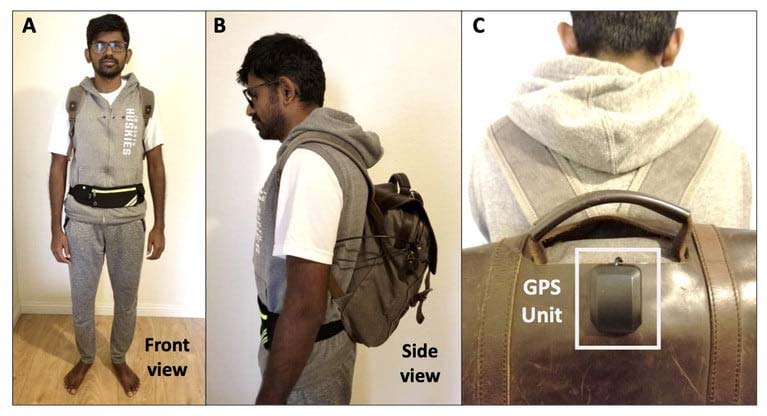

What researcher Jagadish Mahendran and team have developed is an AI-powered, voice-activated backpack that’s designed to help its wearer perceive the surrounding world. To do it, the backpack — which could be particularly useful as an alternative to guide dogs for visually impaired users — uses a connected camera and fanny pack (the former worn in a vest jacket, the latter containing a battery pack), coupled with a computing unit so it can respond to voice commands by audibly describing the world around the wearer.

That means detecting visual information about traffic signs, traffic conditions, changing elevations, and crosswalks alongside location information, and then being able to turn it into useful spoken descriptions delivered via Bluetooth earphones.

“The idea of developing an AI-based visual assistance system occurred to me eight years ago in 2013 during my master’s,” Mahendran recently told Digital Trends. “But I could not make much progress back then for [a] few reasons: I was new to the field and deep learning was not mainstream in computer vision. However, the real inspiration happened to me last year when I met my visually impaired friend. As she was explaining her daily challenges, I was struck by this irony: As a perception and AI engineer, I have been teaching robots how to see for years, while there are people who cannot see. This motivated me to use my expertise and build a perception system that can help.”

The system contains some impressive technology, including a Luxonis OAK-D spatial AI camera that leverages OpenCV’s Artificial Intelligence Kit with Depth, which is powered by Intel. It’s capable of running advanced deep learning neural networks, while also providing high-level computer vision functionality, complete with a real time depth map, color information, and more.

“The success of the project is that we are able to run many complex AI models on a setup that has a simple and small form factor and is cost-effective, thanks to the OAK-D camera kit that is powered by Intel’s Movidius VPU (an AI chip), along with Intel OpenVINO software,” Mahendran said. “Apart from AI, I have used multiple technologies such as GPS, point cloud processing, and voice recognition.”

The components are all hidden away from view, with even the camera (which, by design, must by visible in order to record the necessary images) looking out at the world through three tiny holes in the vest.

Currently, the project is in the testing phase. “I did the initial [tests myself] in downtown Monrovia, California,” Mahendran said. “The system is robust, and can run in real time.”

Mahendran noted that, in addition to detecting outdoor obstacles — ranging from bikes to overhanging tree branches — it can also be useful for indoor settings, such as detecting unclosed kitchen cabinet doors and the like. In the future, he hopes that members of the public who need such a tool will be able to try it out for themselves.

Breeding Bots

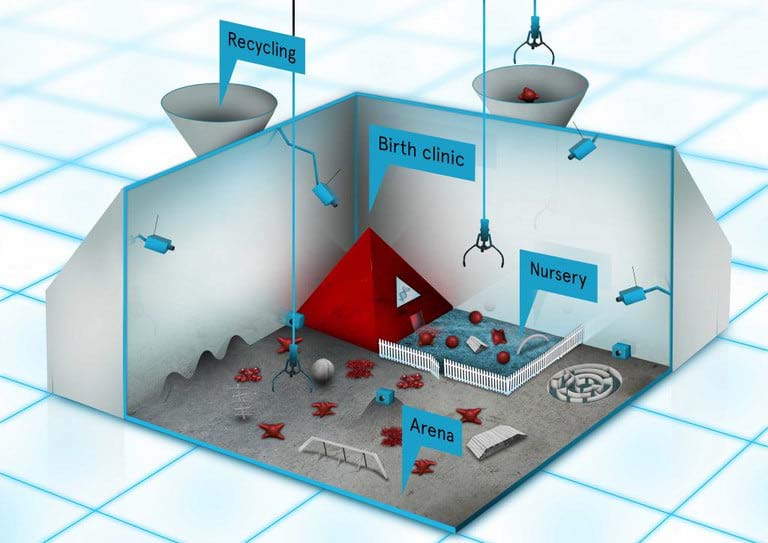

“We are trying to, if you like, invent a completely new way of designing robots that doesn’t require humans to actually do the designing,” said Alan Winfield, a professor of Cognitive Robotics in the Bristol Robotics Lab at the University of the West of England (UWE). “We’re developing the machine or robot equivalent of artificial selection in the way that farmers have been doing for not just centuries, but for millennia. What we’re interested in is breeding robots. I mean that literally.”

Winfield, who has been working with software and robotic systems since the early 1980s, is one of the brains behind the Autonomous Robot Evolution (ARE) project: a multi-year effort carried out by UWE, the University of York, Edinburgh Napier University, the University of Sunderland, and the Vrije Universiteit Amsterdam. These creators hope it will change the way that robots are designed and built.

EvoSphere.

The concept behind ARE is (at least hypothetically) simple. Think about science fiction movies where a group of intrepid explorers land on a planet and, despite their best attempts at planning, find themselves entirely unprepared for whatever they encounter. This is the reality for any of the inhospitable scenarios in which we might want to send robots — especially when those places could be be tens of millions of miles away.

Currently, robots like the Mars rovers are built on Earth, according to our expectations of what they will find when they arrive. This is the approach roboticists take because there’s really no other option available.

However, what if it was possible to deploy a miniature factory of sorts — consisting of special software, 3D printers, robot arms, and other assembly equipment — that was able to manufacture new kinds of custom robots based on whatever conditions it found upon landing? These robots could be honed according to both environmental factors and the tasks required of them.

What’s more, using a combination of real world and computational evolution, successive generations of these robots could be made even better at these challenges. That’s what the Autonomous Robot Evolution team is working on.

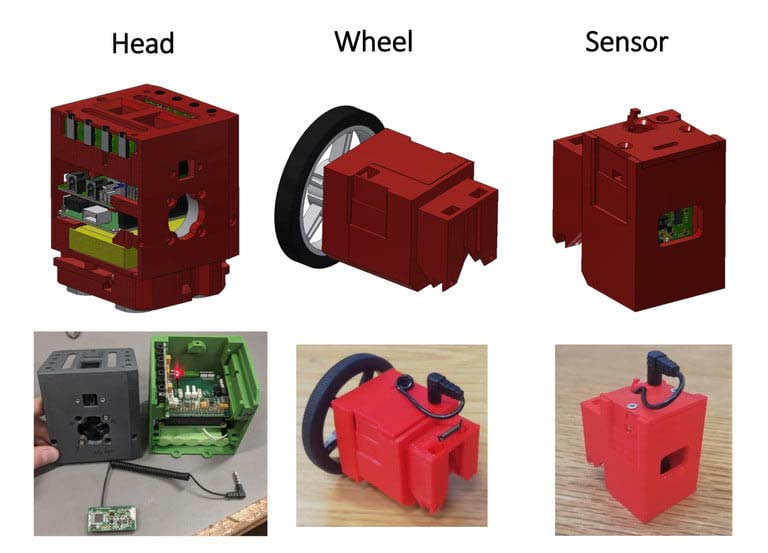

There are two main parts to the ARE project’s “EvoSphere.” The software aspect is called the Ecosystem Manager. Winfield said that it’s responsible for determining “which robots get to be mated.” This mating process uses evolutionary algorithms to iterate new generations of robots incredibly quickly.

Photo courtesy of Matt Hale/Autonomous Robot Evolution.

The software process filters out any robots that might be obviously unviable, either due to manufacturing challenges or obviously flawed designs, such as a robot that appears inside out. “Child” robots learn in a controlled virtual environment where success is rewarded. The most successful then have their genetic code made available for reproduction.

The most promising candidates are passed on to RoboFab to build and test. The RoboFab consists of a 3D printer (one in the current model, three eventually) that prints the skeleton of the robot before handing it over to the robot arm to attach what Winfield calls “the organs.” These refer to the wheels, CPUs, light sensors, servo motors, and other components that can’t be readily 3D-printed. Finally, the robot arm wires each organ to the main body to complete the robot.

Zap Enemy Drones with Microwaves

In July 2019, multiple drones were reportedly used to swarm Navy destroyers off the coast of California. The mysterious drones — around six in total — appeared over the course of several nights, flashing lights and performing “brazen maneuvers” close to the warships. They flew at speeds of 16 knots, and stayed aloft for upwards of 90 minutes — longer than commercially-available drones.

It’s not known where they originated from. News of the incident was only made public in March 2021, following a Freedom of Information Act (FOIA) request from The Drive (https://www.thedrive.com).

Troublesome drones are not a new phenomenon. To address this growing problem, Epirus (which builds modern defense systems to address 21st-century threats) has created Leonidas: a portable powerful microwave energy weapon that can be used to disable a swarm of drones simultaneously or knock out individual drones within a group with extremely high precision.

It works by overloading the electronics on board a drone, causing it to instantly fall out of the sky. It’s referred to as a Counter-UAS — or Counter Unmanned Aerial Systems — tool.

“Leonidas is a first-of-its-kind Counter-UAS system that uses solid-state, software-defined high-power microwaves (HPM) to disable electronic targets, delivering unparalleled control and safety to operators,” Leigh Madden, CEO told Digital Trends recently. “Digital beamforming capabilities enable pinpoint accuracy so that operators can disable enemy threats [without disrupting anything] else. Leonidas utilizes solid-state Gallium Nitride power amplifiers to give the system deep magazines and rapid firing rates, while dramatically reducing size and weight.”

The energy weapon solution can be mounted on a truck, ship, or a variety of other vehicles or platforms, depending on what is required by the customer. The beam it fires out can be narrowed or widened based on the specifications of the target. Want to instantly stop a swarm of approaching drones? Widen the beam. Looking for an energy weapon sniper rifle that can hit just one drone out of a group? Narrow it down to a needle-like point.

It’s also fast. The directed energy weapon produces a very high rate of fire, equivalent to multiple rounds per second. This is crucial because — like stormtroopers in Star Wars — the threat of drone swarms is less about the indestructibility of any single one than their ability to potentially overwhelm through strength in numbers.

Working prototype of Leonidas.

No Poop Scooping

The 360 Smart Life Robot Vacuum Cleaner has eyes to help it detect messes (like pet poop) and obstacles before there are any mishaps.

With three LiDAR sensors — a wall sensor, a main sensor, and a front sensor — the 360 Smart Life Robot Vacuum can gauge the size of objects. The built-in artificial intelligence allows it to decide in real time whether to continue over an object or avoid it. These sensors allow it to pick up items as small as 0.04 inches in size.

The 360 Smart Life S10 can vacuum your floors with up to 3,300 Pa of suction power, while its 3D mapping capabilities allow it to learn the layout of the home quickly. The AI will then determine the most efficient route through the home to provide you with a thorough clean.

Shooting the Tube

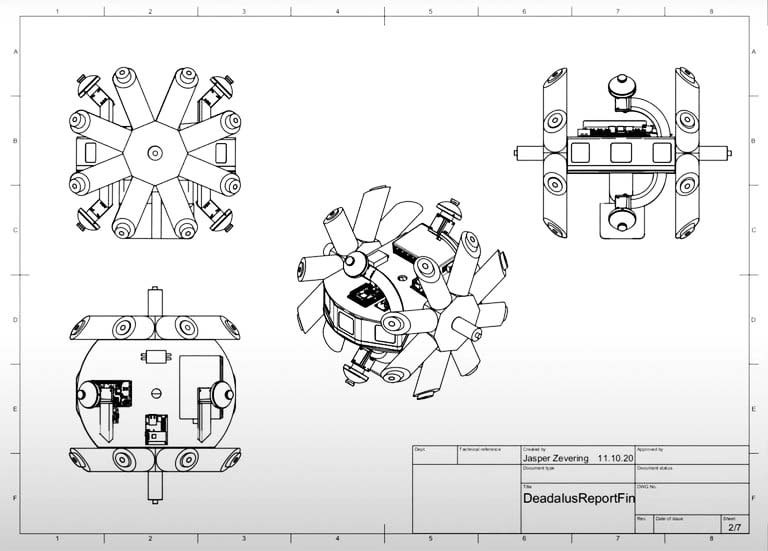

Andreas Nuechter, a professor of robotics and telematics at Julius-Maximilians-Universität of Würzburg (JMU) in Germany, is leading a project to build a rolling robot he hopes will be used to explore a system of hidden lava caves on the Moon. The spherical robot which looks a bit like BB-8 from the Star Wars movies is called DAEDALUS, an acronym that stands for Descent And Exploration in Deep Autonomy of Lunar Underground Structures.

Building an entirely new kind of robot that will use lasers to semi-autonomously map a hitherto unexplored part of the Moon is most definitely hard work. However, thanks to researchers from JMU, Jacobs University Bremen GmbH, University of Padova, and the INAF-Astronomical Observatory of Padova, it might just be possible.

Exploring the cave network beneath the Marius Hills skylight is what DAEDALUS is designed to do. “Lava tubes are particularly interesting since they could actually provide, in the far future, shelter to humans from radiation and all the nasty effects you have on the Moon,” explained Nuechter. “If you plan to live on the Moon, it would make sense to use the existing geologic structures to go underground.”

Rather than use a conventional rover, the plan for DAEDALUS is to use a 46 centimeter transparent spherical robot in which the scientific instruments — such as the sensors and power supply — are contained in a protective sphere made of a material like plexiglass.

It would move by rolling, with motorized components inside that are able to shift the center of mass. Alternatively, or possibly additionally, the researchers are exploring a series of “sticks” that could protrude from the robot’s shell to push it the way a rowboat might use oars to propel it away from the shore. These could also be used like a tripod to anchor the robot in one place when needed.

The idea is that the robot could be lowered — along with a Wi-Fi receiver — into the lava tube pit by way of a lightweight crane that’s also being developed as part of a European Space Agency project. Once at the bottom, it would then use cameras and LiDAR sensors to scan its surroundings in order to create a three-dimensional map.

“The drawback of cameras is that you need an external light,” Nuechter said. “Either you need the Sun or you need a light bulb. But LiDAR is completely independent of [that requirement].”

DAEDALUS will be partly autonomous in its operation. Due to the time it takes for a radio signal to travel to the Moon from Earth, the notion of direct supervision is out of the question. “If you cannot do direct supervision due to the delays and there is something like a delay longer than 300 milliseconds, then you need to come up with something else,” Nuechter said.

That “something else” will be a hybrid control system whereby the human operator will specify broad commands (“go to x coordinates and take y pictures”) and then the robot can take certain autonomous actions to figure out the intermediate steps.

The DAEDALUS project is still a work in progress, yet to be given the official go-ahead by the European Space Agency. It’s likely that it will not be deployed for another decade. However, Nuechter said he is confident the technology will be ready to go a lot sooner than that.

Soft-bodied Bots

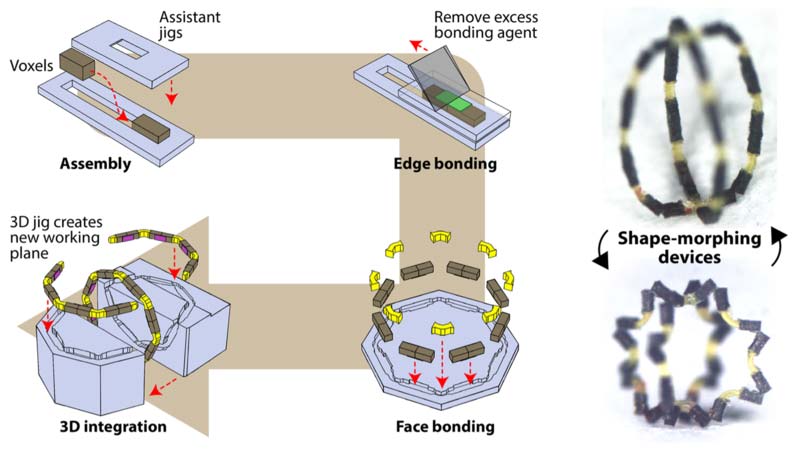

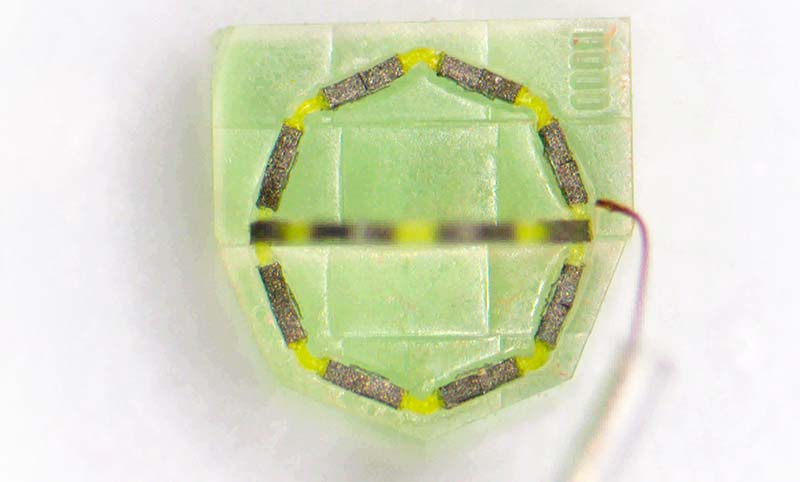

A team of scientists from the Max Planck Institute for Intelligent Systems (MPI-IS) has developed a system with which they can fabricate miniature robots building them block by block to function exactly as required.

Just like with a LEGO system, the scientists can randomly combine individual components. The building blocks or voxels — which could be described as 3D pixels — are made of different materials: from basic matrix materials that hold up the construction to magnetic components enabling the control of the soft machine. “You can put the individual soft parts together in any way you wish, with no limitations on what you can achieve. In this way, each robot has an individual magnetisation profile,” says Jiachen Zhang. Together with Ziyu Ren and Wenqi Hu, Zhang is first author of the paper entitled “Voxelated three-dimensional miniature magnetic soft machines via multimaterial heterogeneous assembly,” recently published in Science Robotics.

Illustrations of shape-morphing miniature robots fabricated using the heterogeneous assembly platform. Voxels made of multi materials are freely integrated together to create robots with arbitrary 3D geometries and magnetization profiles.

The project is the result of many previous projects conducted in the Physical Intelligence Department at MPI-IS. For many years, scientists there have been working on magnetically controlled robots for wireless medical device applications at the small scale, from millimeters down to micrometers in size. While the state-of-the-art designs they have developed to-date have attracted attention around the world, they were limited by the single material with which they were made, which constrained their functionality.

The new building platform enables many new designs and is an important milestone in the research field of soft robotics. The Physical Intelligence Department has already developed a wide variety of robots — from a crawling and rolling caterpillar-inspired robot, a spider-like construct that can jump high, to a robotic grasshopper leg and magnetically-controlled machines that swim as gracefully as jellyfish. The new platform will accelerate the momentum and open up a world of new possibilities to construct even more state-of-the-art miniature soft-bodied machines.

The scientists base each construction on two material categories. The base is mainly a polymer that holds up the matrix, however, other kinds of soft elastomers, including biocompatible materials like gelatin, are used. The second category comprises materials embedded with magnetic micro- or nano-particles that make the robot controllable and responsive to a magnetic field.

A heterogeneous assembly platform fabricates multi material shape-morphing miniature robots.

Thousands of voxels are fabricated in one step. Like dough being distributed in a cookie tray, the scientists use tiny mold casts to create the individual blocks — each of which is no longer than around 100 micrometers. The composition then happens manually under a microscope, as automating the process of putting the particles together is still too complex.

While the team integrated simulation before building a robot, they took a trial and error approach to the design until they achieved perfection. Ultimately, however, the team aims for automation; only then can they reap the economies of scale should they commercialize the robots in the future. “In our work, automated fabrication will become a high priority,” Zhang says. “As for the robot designs we do today, we rely on our intuition based on extensive experience working with different materials and soft robots.”

Article Comments