bots IN BRIEF (04.2019)

Robot, Heal Thyself

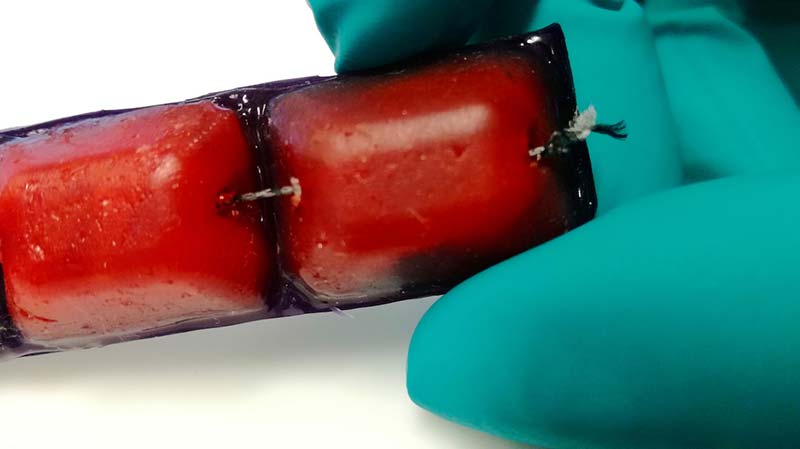

Robots break. The software breaks. The hardware breaks. While most of this is just a fundamental characteristic of robots that can’t be helped, the European Commission is funding a project called SHERO (Self HEaling soft RObotics) to try and solve at least some of those physical robot-breaking problems through the use of structural materials that can autonomously heal themselves over and over again.

This robot hand can autonomously heal itself over and over again.

SHERO is a three year/$3 million+ collaboration between Vrije Universiteit Brussel, University of Cambridge, École Supérieure de Physique et de Chimie Industrielles de la ville de Paris (ESPCI-Paris), and Swiss Federal Laboratories for Materials Science and Technology (Empa). As the name SHERO suggests, the goal of the project is to develop soft materials that can completely recover from the kinds of damage that robots are likely to suffer in day-to-day operations, as well as the occasional more extreme accident.

Most materials — especially soft materials — are usually fixable, whether it’s with super glue or duct tape. Typically, fixing things involves a human first identifying what’s broken, and then performing a potentially skill, labor, time, and money intensive task. SHERO’s soft materials will eventually make this entire process autonomous, allowing robots to self-identify damage and initiate healing on their own.

What these self-healing materials can do is really pretty amazing. The researchers are actually developing two different types. The first one heals itself when there’s an application of heat (either internally or externally) which gives some control over when and how the healing process starts. For example, if the robot is handling stuff that’s dirty, you’d want to get it cleaned up before healing it so that the dirt doesn’t become embedded in the material. This could mean that the robot either takes itself to a heating station or it could activate some kind of embedded heating mechanism to be more self-sufficient.

The damaged robot finger [top] can operate normally after healing itself.

The second kind of self-healing material is autonomous in that it will heal itself at room temperature without any additional input, and is probably more suitable for relatively minor scrapes and cracks.

Autonomous self-healing polymers don’t require heat; they can heal at room temperature. Developing soft robotic systems from autonomous self-healing polymers excludes the need of additional heating devices, however, it does take some time. The healing efficiency after three days, seven days, and 14 days is, respectively, 62 percent, 91 percent, and 97 percent.

This was the material used to develop the healable soft pneumatic hand shown here. Relevant large cuts can be healed entirely without the need of external heat stimulus. Depending on the size of the damage and even more on the location of the damage, the healing takes only seconds or up to a week. Damage on locations on the actuator that are subjected to very small stresses during actuation was healed instantaneously. Larger damages — like cutting the actuator completely in half — took seven days to heal. What’s cool is that even this severe damage could be healed completely without the need of any external stimulus.

After developing a self-healing robot gripper, the researchers plan to use similar materials to build parts that can be used as the skeleton of robots, allowing them to repair themselves on a regular basis.

Both of these materials can be mixed together and their mechanical properties can be customized, so that the structure they’re a part of can be tuned to move in different ways. The researchers also plan on introducing flexible conductive sensors into the material, which will help sense damage as well as provide position feedback for control systems.

Cookie Monster

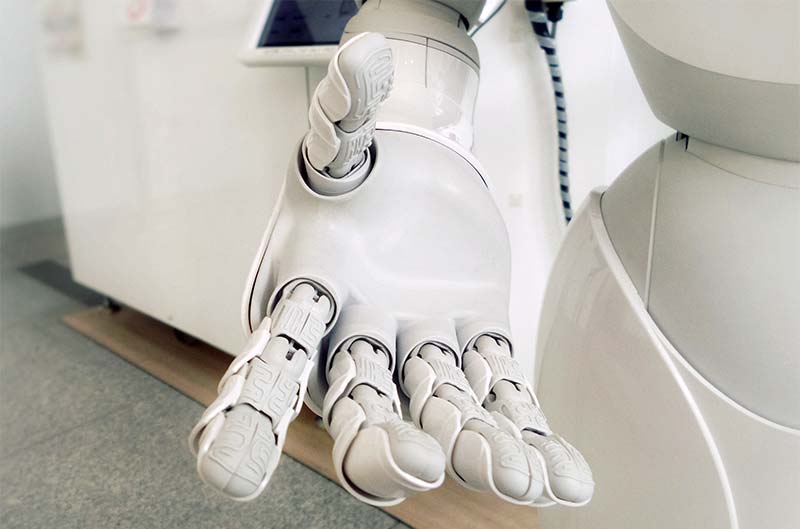

At FZI in Germany, they put together a very tasty cookie decorating demo with their humanoid robot, HoLLiE. The demo featured sprinkles, marshmallows, and a “frosting gun.”

Although the hat and the sign might throw you off, HoLLiE is not, in fact, a professional baking robot. Developed at FZI’s House of Living Labs, HoLLiE is designed to “manage complex tasks easily and support people in everyday situations.”

The robot is human-sized and mobile, with a similar workspace to a human, featuring 6-DoF arms and 9-DoF Schunk hands, along with a bendable waist that allows it to reach all the way down to the floor.

Photo courtesy of FZI.

Hooked on a Feeling

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have had a breakthrough in giving a robot the human-like ability to determine what an object looks like just by touching it, as well as predict what an object will feel like just by looking at it.

The researchers accomplished this by adding a tactile sensor called GelSight to a KUKA robot arm and then fed the information collected by the tactile sensor to the robotic arm’s AI, so it could begin learning the relationship between tactile and visual information on its own.

Twelve thousand videos of 200 fabrics and other household objects were then converted to still photographs and fed to the AI so it could further learn the relationship between touch and sight. The breakthrough could ultimately help robots become better at manipulating objects. Yunzhu Li, CSAIL PhD student and lead author of the research paper, explained:

“By looking at the scene, our model can imagine the feeling of touching a flat surface or a sharp edge. By blindly touching around, our model can predict the interaction with the environment purely from tactile feelings. Bringing these two senses together could empower the robot and reduce the data we might need for tasks involving manipulating and grasping objects.”

For now, CSAIL’s robot can only identify objects in a controlled environment. Next, the researchers plan to enlarge the tactile/visual data set so the robot can perform tasks in various other settings.

Photo courtesy of Franck V./Unsplash.

Assistive Robotic Arm

A new life-changing technology device is Canadian tech company Kinova Robotics’ Jaco arm: a lightweight carbon fiber robotic arm which attaches to any power wheelchair available on the market. Controlled by the user, the robo-arm provides three fingers and six degrees of freedom. It can be used for a wide range of everyday tasks.

“The arm plugs into the user’s battery source, and can then be controlled through whatever mechanism the user uses to control their chair,” Sarah Woolverton, who heads the marketing and communication at Kinova, explained. “That could be a joystick, a sip-and-puff, or whatever else; we can tap into them all. It makes it very, very easy to start using the arm because it’s just an extension of the wheelchair that they’re already using.”

Shrinky Dinks Tech to the Rescue

Remember Shrinky Dinks? They were translucent sheets kids used to draw things on, then shrink in the oven to create all kinds of tiny trinkets.

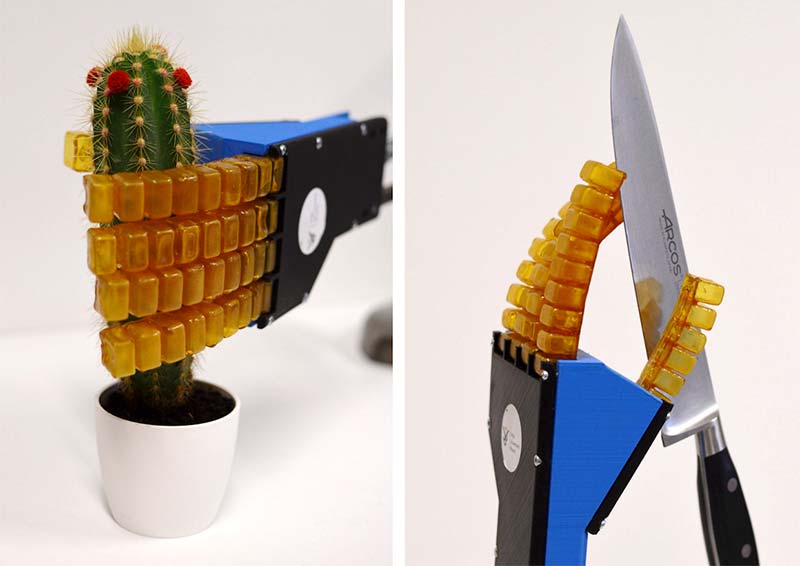

Well, scientists have now found a way to turn them into super-strong robotic grippers.

Recall, Shrinky Dinks are made of polysterene plastic; a polymer that “remembers” its original shape. After creating them, the manufacturer stretches them to a larger size at the factory so kids can easily draw on a big surface with markers or pencils. When they’re done, the kids heat them in the oven at about 217°F. At that point, the polysterene plastic recovers its original, pre-stretched shape, magically shrinking right before the kid’s eyes.

A team of scientists from the Department of Chemical and Biological Engineering at North Carolina State University and the Department of Aerospace Engineering at Auburn University in Alabama have found a way to use those properties to create a claw that can grasp objects.

This type of gripper could be used for soft robotic applications, with flexible materials that are comparable to those found in living organisms.

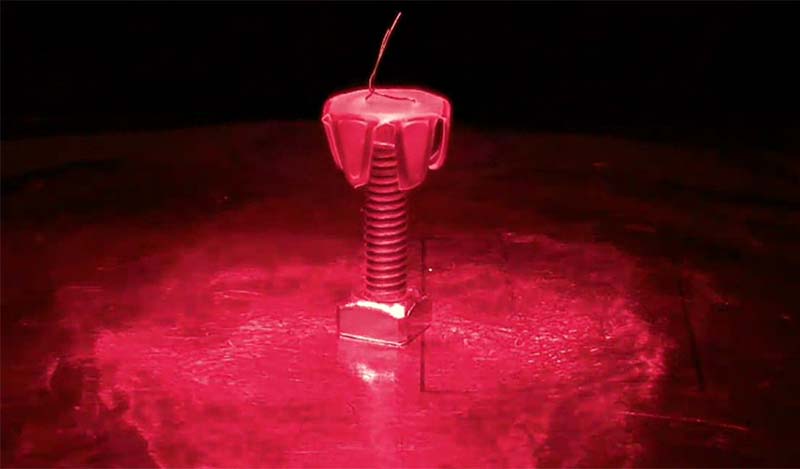

The research (published earlier this year in the American Chemical Society’s journal, Applied Polymer Materials) describes a method to turn thermoplastic polystyrene sheets into grippers. Soft grippers are typically made of hydrogels or elastomers which require solvents or continuous pneumatic pressure to change shape — both of which have drawbacks.

Solvents can damage the object that needs to be manipulated, and pneumatic pressure can be energy-intensive. This technology using Shrinky Dink style plastic avoids those pitfalls since it doesn’t require chemicals or power to operate. In fact, it doesn’t even require heat, like the Shrinky Dinks.

To make these grippers, the researchers use a black inkjet printer to draw different shapes onto the thermoplastic film, then cut them according to their design. Different designs result in different geometries, and each geometry is optimized to manipulate objects of a corresponding shape.

They then activate the Shrinky Dinks with light. The black ink concentrates the natural radiation in light, heating up the drawn part of the sheet which, in turn, contracts the surface and makes the sheet move into the final shape of the gripper.

The grippers are single-use only, unfortunately, but this is good enough for disposable soft robots that can work biomedical applications, for example. The grippers can release the objects if you apply additional heat to the entire surface.

The most surprising thing is their strength. They can hold over 24,000 times their own mass for several minutes before they fail, and 5,000 times their own mass for months. This is much stronger than hydrogel and elastomeric grippers, the research team claims.

It’s kinda crazy to see something so light holding a steel screw thousands of times its weight with such ease.

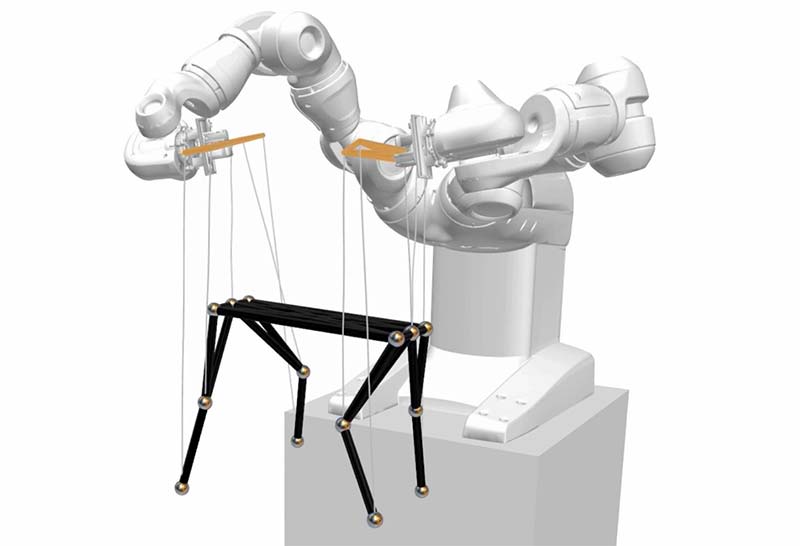

Stringing Us Along

So, there’s probably not a big need for robot puppeteers, but some folks over at ETH Zurich decided they’d have a crack at it anyway:

Marionettes are underactuated, high-dimensional, highly non-linear coupled pendulum systems. They are driven by gravity, the tension forces generated by a small number of cables, and the internal forces arising from mechanical articulation constraints. As such, the map between the actions of a puppeteer and the motions performed by the marionette are notoriously unintuitive, and mastering this unique art form takes unfaltering dedication and a great deal of practice. Our goal is to enable autonomous robots to animate marionettes with a level of skill that approaches that of human puppeteers.

As input, all a robot needs to know is the design of the puppet at the target motion you want the puppet to make. While moving the puppet in real life, the robot is continuously simulating its motions over the next second while iteratively optimizing to try to get the puppet to move the way it’s supposed to.

Plus, the usefulness of this research is not constrained to just puppets:

Our long term goal is to enable robots to manipulate various types of complex physical systems — clothing, soft parcels in warehouses or stores, flexible sheets and cables in hospitals or on construction sites, plush toys, or bedding in our homes, etc. — as skillfully as humans do. We believe the technical framework we have set up for robotic puppeteering will also prove useful in beginning to address this very important grand challenge.

Really Stringing Us Along

MIT engineers have developed a magnetically steerable thread-like robot that can actively glide through narrow winding pathways, such as the labyrinthine vasculature of the brain.

In the future, this robotic thread may be paired with existing endovascular technologies, enabling doctors to remotely guide the robot through a patient’s brain vessels to quickly treat blockages and lesions, such as those that occur in aneurysms and stroke.

The core of the robotic thread is made from nickel-titanium alloy, or “nitinol:” a material that is both bendy and springy. Unlike a clothes hanger — which would retain its shape when bent — a nitinol wire would return to its original shape, giving it more flexibility in winding through tight tortuous vessels. The team coated the wire’s core in a rubbery paste, or ink, which they embedded throughout with magnetic particles.

MIT engineers have developed a magnetically steerable thread-like robot that can glide through narrow winding pathways, such as the brain’s blood vessels. Photo courtesy of MIT.

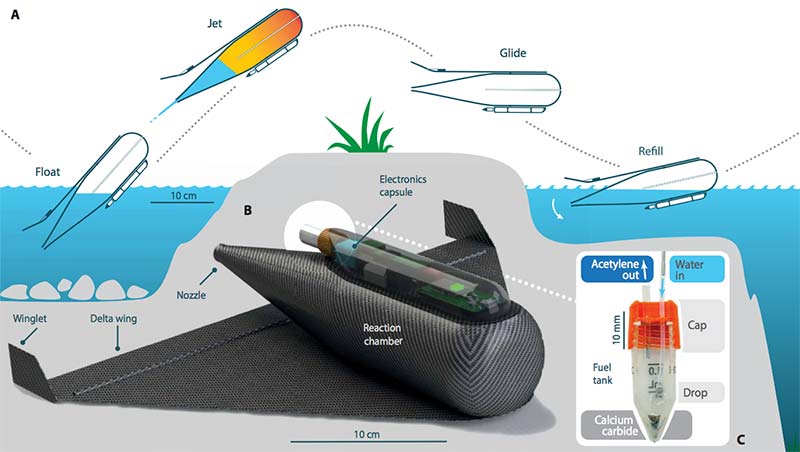

Airborne AquaMAV

Back at ICRA 2015, the Aerial Robotics Lab at the Imperial College London presented a concept for a multimodal flying/swimming robot called AquaMAV. The really difficult thing about a combo flying and swimming robot is the transition from from water to air in (ideally) a stable and repetitive way. Going from air to water is easy, thanks to gravity. The AquaMAV concept solved this by basically just applying as much concentrated power as possible to the problem, using a jet thruster to hurl the robot out of the water with quite a bit of velocity to spare.

In a recent paper appearing in Science Robotics, the roboticists behind AquaMAV presented a fully operational robot that uses a solid-fuel powered chemical reaction to generate an explosion that powers the robot into the air.

The 2015 version of AquaMAV used a small cylinder of CO2 to power its water jet thruster. This worked pretty well, but the mass and complexity of the storage and release mechanism for the compressed gas wasn’t all that practical for a flying robot designed for long-term autonomy.

So, there was one obvious way of generating large amounts of pressurized gas all at once: explosions.

The water jet coming out the back of AquaMAV is propelled by a gas explosion. The gas comes from the reaction between a little bit of calcium carbide powder stored inside the robot and water. Water is mixed with the powder one drop at a time, producing acetylene gas which gets piped into a combustion chamber along with air and water.

When ignited, the acetylene air mixture explodes, forcing the water out of the combustion chamber and providing up to 51 N of thrust, which is enough to launch the 160 gram robot 26 meters up and over the water at 11 m/s.

It takes just 50 mg of calcium carbide (mixed with three drops of water) to generate enough acetylene for each explosion. With 0.2 g of calcium carbide powder on board, the robot has enough fuel for multiple jumps, and the jump is powerful enough that the robot can get airborne even under fairly aggressive sea conditions.

The current version is mostly optimized for the jetting phase of flight, and doesn’t have any active flight control surfaces yet. It’s just a glider at the moment, but a low-power propeller is an obvious step after that, and to get really fancy, a switchable gearbox could enable efficient movement on water as well as in the air. Long-term, the idea is that robots like these would be useful for tasks like autonomous water sampling over large areas.

The robot can transition from a floating state to an airborne jetting phase and back to floating (A). A 3D model render of the underside of the robot (B) shows the electronics capsule. The capsule contains the fuel tank (C), where calcium carbide reacts with air and water to propel the vehicle. Image courtesy of Science Robotics.

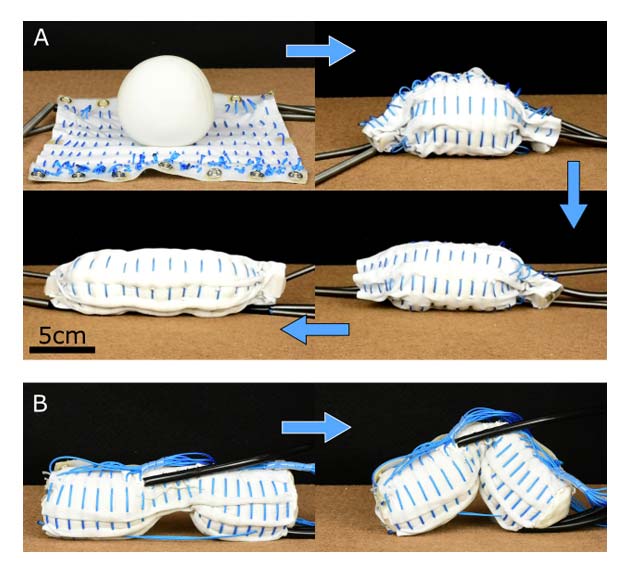

Clay Robot Sculpts Itself

Transforming robots have a way of making themselves adaptable to different environments or tasks. Usually, these robots are restricted to a discrete number of configurations — typically two or three different forms — because of the constraints imposed by the rigid structures the robots are usually made of.

Soft robotics have the potential to change all this, since they don’t have fixed forms but instead can transform themselves into whatever shape will enable them to do what they need to do.

At ICRA in Montreal earlier this year, researchers from Yale University demonstrated a creative approach toward a transforming robot powered by string and air, with a body made primarily out of clay.

The robot is actuated by two different kinds of “skin,” one layered on top of another. There’s a locomotion skin made of a pattern of pneumatic bladders that can roll the robot forward or backward when the bladders are inflated sequentially. On top of that is the morphing skin which is cable-driven and can sculpt the underlying material into a variety of shapes including spheres, cylinders, and dumbbells. The robot itself consists of both of these skins wrapped around a chunk of clay, with the actuators driven by offboard power and control.

This robot’s morphing skin sculpts its clay body into different shapes. Photos courtesy of Evan Ackerman and IEEE Xplore.

The Yale researchers have been experimenting with morphing robots that use foams and tensegrity structures for their bodies, but that stuff provides a “restoring force,” springing back into its original shape once the actuation stops. Clay is different because it holds whatever shape it’s formed into, making the robot more energy efficient.

While this robot is relatively simplistic, the researchers suggest some ways in which a more complex version could be used in the future:

Applications where morphing and locomotion might serve as complementary functions are abundant. For the example skins presented in this work, a search-and-rescue operation could use the clay as a medium to hold a payload such as sensors or transmitters. More broadly, applications include resource-limited conditions where supply chains for materiel are sparse. For example, the morphing sequence could be used to transform from a rolling sphere to a pseudo-jointed robotic arm. With such a morphing system, it would be possible to robotically morph matter into different forms to perform different functions.

Robot Tail No Monkey Business

Researchers at Keio University in Japan have created a wearable tail that promises to genuinely augment the wearer’s capabilities by improving their balance and agility.

Researchers from Keio University in Japan have demonstrated a bio-inspired robotic tail that can help improve a person’s balance. Photo courtesy of Keio University.

The easiest way to understand what inspired this creation is to watch monkeys effortlessly leaping from tree to tree. Their tails not only serve as an additional limb for grasping branches, but also help them reposition their bodies mid-flight for a safe landing by shifting the monkey’s center of balance as it moves. The Arque tail — as it’s been named — does essentially the same thing for humans, although we don’t recommend doing any leaping anytime soon.

The tail could give humans the same level of agility as even a cat, but its design was actually copied from the dextrous (but durable) appendage you’d find on the end of a seahorse. It’s assembled from a series of interconnected plastic vertebrae that can be customized for every wearer by simply adding more segments or counter-balancing weights, depending on the size of the person wearing it.

Inside the tail are a set of four artificial muscles powered by compressed air that contract and expand in different combinations to move and curl the tail in any direction.

The current design is dependent on an external air compressor to generate enough pressure for the tail to actually move, but as research on artificial muscles continues to advance, eventually all that could be needed to power the appendage is a strong battery.

The most immediate applications for the Arque tail include assisting workers tasked with lifting or carrying heavy objects. Like an exoskeleton suit that enhances the capabilities of the wearer’s muscles, the tail works like a counterbalance so that less force is required to lift something off the ground.

Unlike an exoskeleton, however, the tail is far less complicated and easier to take on and off.

The researchers who created the Arque also believe it could be an effective way to add full-body haptic feedback to persons exploring virtual worlds, altering their balance and momentum to mimic what their body is experiencing virtually.

See the tail in action at https://www.youtube.com/watch?v=Tr1-IhEhXYQ&feature=youtu.be.

Keep On Truckin’

In case you didn’t know, UPS has been carrying truckloads of goods in self-driving semi-trucks since May. The vehicles are driving through Arizona with routes between Phoenix and Tucson as an ongoing test. The shipping giant announced the partnership with TuSimple, an autonomous driving company, back in August. UPS Ventures is also taking a minority stake in the company.

The trucks created by TuSimple are Level 4 autonomous, which means that a computer is in complete control of driving with no required manual controls. While the trucks have been operating on the road, a driver and an engineer are still on board to monitor the system, as is required by law.

Flying Batteries

Battery power is a limiting factor for robots everywhere, but it’s particularly problematic for drones. With drones, there’s an awkward tradeoff between the amount of battery they carry, the amount of other more useful stuff they carry, and how long they can stay in the air.

Consumer drones seem to have settled around about a third of their overall mass in battery, resulting in flight times of 20 to 25 minutes at best before the drone has to be brought back for a battery swap.

Each flying battery can power the main quadrotor for about six minutes, and then it flies off and a new flying battery takes its place.

So, what happens when the drone is doing something that’s dependent on it staying in the air and it needs more juice?

When much larger aircraft have this problem (in particular military aircraft which sometimes need to stay on-station for long periods of time), the solution is mid-air refueling.

It’s easier to do this with liquid fuel than it is with batteries, of course, but researchers at UC Berkeley have come up with a clever solution: Give the batteries wings. Or, in this case, rotors.

The main quadrotor weighs 820 grams and is carrying its own 2.2 Ah lithium-polymer battery that by itself gives it a flight time of about 12 minutes. Each little quadrotor weighs 320g, including its own 0.8 Ah battery plus a 1.5 Ah battery as cargo. The little ones can’t keep themselves aloft for all that long, but that’s okay. As flying batteries, their only job is to go from the ground to the big quadrotor and back again.

As each flying battery approaches the main quadrotor, the smaller quadrotor takes a position about 30 centimeters above a passive docking tray mounted on top of the bigger drone. It then slowly descends to about 3 cm above, waits for its alignment to be just right, and then drops, landing on the tray which helps align its legs with electrical contacts. As soon as a connection is made, the main quadrotor is able to power itself completely from the smaller drone’s battery payload.

The flying batteries land on a tray mounted atop the main drone and align their legs with electrical contacts. Photos courtesy of UC Berkeley.

Each flying battery can power the main quadrotor for about five minutes, and then it flies off and a new flying battery takes its place. If everything goes well, the main quadrotor only uses its primary battery during the undocking and docking phases, and in testing, this boosted its flight time from 12 minutes to nearly an hour.

All of this happens in a motion-capture environment, which is a big constraint, and getting this precision(ish) docking maneuver to work outside or when the primary drone is moving is something that the researchers are trying to figure out.

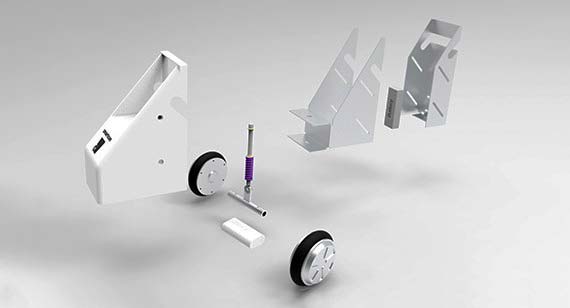

No More Taking Out the Trash

According to the US Bureau of Labor Statistics, taking out the trash is the least favorite chore of maybe all time.

Cue the robots.

There’s a cool new invention by smart home company, Rezzi that’s a trash can that takes itself out.

Rezzi’s new trash can is called the SmartCan, which eliminates the need to drag your garbage and recycling bins out to the curb on trash day.

The SmartCan is motorized and will respond to commands from a corresponding mobile app and take itself out. Users can set the specific day and time they need their trash to be at the curb for pickup by local garbage professionals.

“We want to help people eliminate unnecessary chores from their daily lives,” Rezzi CEO and SmartCan creator, Andrew Murray commented in a recent press release. “We see an opportunity to take IoT beyond just turning off lights or turning on music, and really help alleviate the burden of the mundane physical tasks that everyone faces.”

There’s no word on price, availability, or when you can buy the SmartCan, although it’s been reported that Rezzi plans to bring the device to market by late 2020. That said, the SmartCan has been rapidly pushed into product prototyping and testing, bumping up its production schedule by half a year at the minimum.

The device’s entire mechanical structure was built by Protolabs based on Rezzi’s design specs, including the main body fabricated from sheet metal, the drive train assembly, and the plastic cover made by a 3D printer.

“Much like what autonomous robotic vacuum cleaners have done for keeping a clean home and what smart doorbells have done for home security, SmartCan completes the recurring task of taking out the garbage,” Vicki Holt, president and CEO of Protolabs, said in a statement. “We’re seeing more and more autonomous products in the consumer electronics industry, aimed at reducing time spent doing less desirable things and enabling more time for valued activities.”

BEN, The Explorer

On June 1, 1937, a plane took off from Miami, FL. It was a twin-engine Lockheed Electra plane, which just so happened to contain the world’s most famous pilot and her navigator.

A combination of bad weather, radio transmission problems, and low fuel meant that the last recorded contact with the plane took place July 2, 1937. What followed was the most expensive sea and air search in American aviation up to that point. Tragically, no plane was ever discovered. Aviation pioneer, Amelia Earhart and navigator, Fred Noonan were finally declared dead on January 5, 1939.

Some 80 years later, noted marine explorer, Robert Ballard hopes to find out exactly what happened — and he’s using the latest technology to do so. The 77 year old Ballard is the same person who discovered the wreckage of the RMS Titanic at the bottom of the Atlantic Ocean in 1985.

While Ballard is leading the mission, he is being accompanied by an able assistant named BEN.

BEN is an acronym for Bathymetric Explorer and Navigator. He’s Ballard’s secret weapon in the search.

“BEN is a robotic vessel with no one on board,” Val Schmidt, research engineer at the University of New Hampshire and the UNH lead for the project, recently told Digital Trends. “We program it with instructions on where to go and how fast, and it allows systematic repeatable operations from the comfort and safety of a much larger ship or land. BEN is equipped with state-of-the-art multibeam sonar mapping and navigation systems that allow us to make very precise topographic (in our lingo, the term is ‘bathymetric’) maps of the seafloor, of the exacting kind that are required for safety of navigation.”

BEN doesn’t look particularly remarkable. Thirteen feet in length and capable of travelling at a speed of around five knots, it resembles a vastly scaled-down patrol boat. However, appearances can be deceiving. Able to operate for more than 16 hours at a time, BEN packs more gadgets than a James Bond vehicle. It boasts a plethora of smart sensors, including (but not limited to) a five-camera array, marine radar, LIDAR, and both internal and external FLIR thermal cameras for navigation in reduced visibility and monitoring of engine and compartment temperatures. Despite staying firmly on top of the water, it possesses an astonishing ability to monitor what’s happening below.

Schmidt explained: “Our primary research task is to develop methods to integrate these sensors both for operators in real time and into internal artificial intelligence systems to allow safe autonomous operation of BEN for marine science, and, in particular, the mission of seafloor mapping.”

BEN makes it possible to explore the seafloor in water that’s too deep for divers, but too shallow for deep-sea vessels like Ballard’s ship, the Nautilus.

Evidence suggests that Earhart was able to make a successful landing, most likely near the coral reef around the island of Nikumaroro, in the western Pacific Ocean. However, no plane was seen by Navy pilots when they surveyed the island days after Earhart’s disappearance. This suggests her aircraft may have been pushed off the reef into the surrounding water. It’s vital that this water can be fully explored, which is exactly where BEN comes in.

Amelia Earhart stands In front of her bi-plane in 1928. Photo courtesy of Getty Images.

What happens next remains to be seen. While it’s probably a longshot to expect this tragic head-scratcher to be solved approaching a century after the fact, this is nonetheless an impressive demonstration of how cutting edge technology can be used to add a fresh pair of eyes to even the most challenging situations.

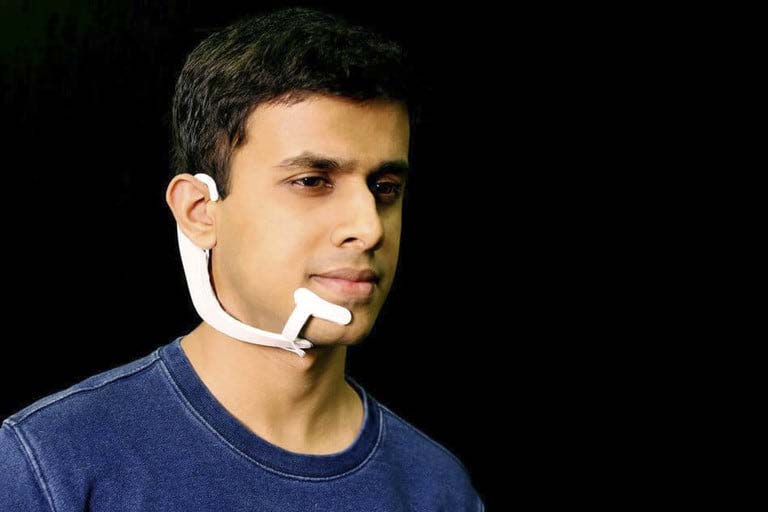

What Was I Thinking?

Imagine if you had a version of Amazon’s Alexa or Google Assistant inside your head, capable of feeding you external information whenever you required it, without you needing to say a single word and without anyone else hearing what it had to say back to you. An advanced version of this idea is the basis for future tech-utopian dreams like Elon Musk’s Neuralink, a kind of connected digital layer above the cortex that will let our brains tap into hitherto unimaginable machine intelligence.

Arnav Kapur, a postdoctoral student with the MIT Media Lab, has a similar idea. And he’s already shown it off.

AlterEgo doesn’t actually read the electrical signals from your brain, but it does let you silently ask questions and have the answer fed back to you via bone-conduction technology. For a person with a speech impediment, it could also be used to dictate words for a computer to read out.

“Throughout the history of personal computing, computers have always been external systems or entities that we interact with: desktops, smartphones, artificial intelligence tools, and even robots,” Kapur told Digital Trends. “Could we flip this paradigm? Could we augment and extend human abilities and weave the powers of computing and machine intelligence as an intrinsic human cognitive ability?”

Arnav Kapur/MIT.

The applications become more profound when considering how such a device could be used for a person with a neurodegenerative disease like Alzheimer’s. It could be possible to internally record semantic information and access this at a later time. Or, to offer unspoken cues in the form of memory aids. The dictation element would additionally be a game-changer for people with speech disabilities caused by conditions ranging from strokes, brain injuries, and tumors to Parkinson’s disease, ALS, Huntington’s disease, cerebral palsy, and more.

“My goal with AlterEgo is to enable people with speech pathologies to communicate in real time the rates at which I speak,” Kapur said. “My current work with the system is working with a variety of patients and optimizing and engineering the system to enable communication.”

According to Kapur, the total number of people in the US who fall into this category number around 7.5 million; roughly equivalent to the entire state of Washington.

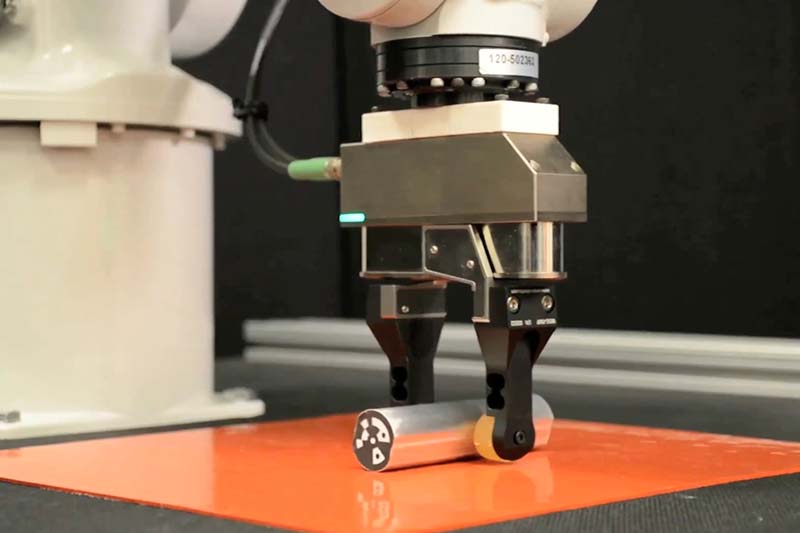

Environmentally Friendly

Engineers at MIT have now hit upon a way to impart more dexterity to simple robotic grippers: use the environment as a helping hand. The team — led by Alberto Rodriguez, an assistant professor of mechanical engineering, and graduate student Nikhil Chavan-Dafle — has developed a model that predicts the force with which a robotic gripper needs to push against various fixtures in the environment in order to adjust its grasp on an object.

For instance, if a robotic gripper aims to pick up a pencil at its midpoint but instead grabs hold of the eraser end, it could use the environment to adjust its grasp. Instead of releasing the pencil and trying again, Rodriguez’s model enables a robot to loosen its grip slightly, and push the pencil against a nearby wall — just enough to slide the robot’s gripper closer to the pencil’s midpoint.

Partnering robots with the environment to improve dexterity is an approach Rodriguez calls “extrinsic dexterity.” With Rodriguez’s new approach, existing robots in manufacturing, medicine, disaster response, and other gripper-based applications may interact with the environment in a cost-effective way, to perform more complex maneuvers.

A simple robotic gripper can adjust its grip using the environment. Here, a robot grips a rod lightly while pushing it against a tabletop. This allows the rod to rotate in the robot’s fingers. Image courtesy of the researchers/MIT News.

Article Comments