Servo Magazine ( 2022 Issue-3 )

Bots in Brief (03.2022)

Flying DRAGONs

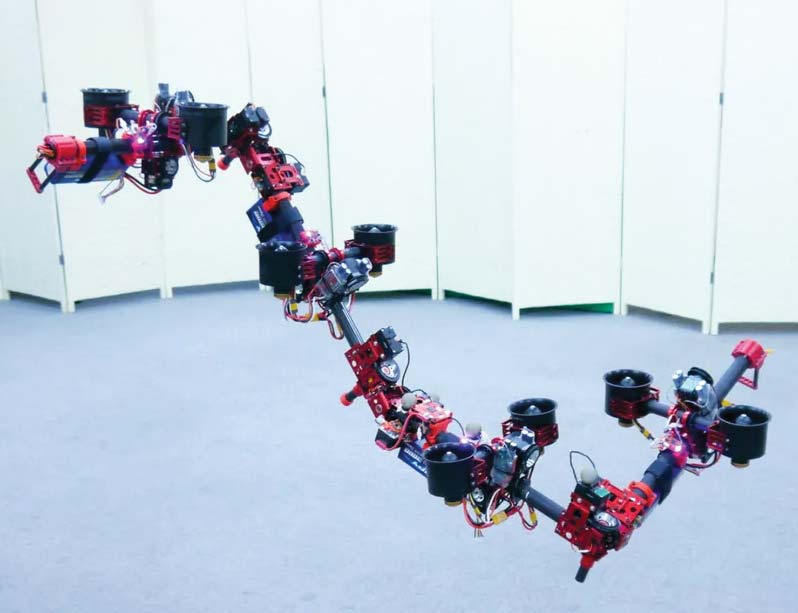

A few years ago at JSK Lab at the University of Tokyo, roboticists developed a robot called DRAGON, which stands for “Dual-rotor embedded multilink Robot with the Ability of multi-deGree-of-freedom aerial transformatiON.” It’s a modular flying robot powered by four pairs of gimbaled ducted fans with each pair linked together through a two-axis actuated joint, making it physically flexible in flight to a crazy degree. It can literally transform on the fly, from a square to a snake to anything in between, allowing it to stretch out to pass through small holes and then make whatever other shape you want once it’s on the other side.

DRAGON has more degrees of freedom than it knows what to do with, in the sense that the hardware is all there. However, the trick is getting it to use that hardware to do things that are actually useful in a reliable way. Back in 2018, DRAGON was just learning how to transform itself to fit through small spaces, but now it’s able to adapt its entire structure to manipulate and grasp objects.

In a couple of recent papers (in the International Journal of Robotics Research and IEEE Robotics and Automation Letters), Moju Zhao and colleagues from the University of Tokyo presented some substantially updated capabilities for DRAGON. For example, it’s much more stable now.

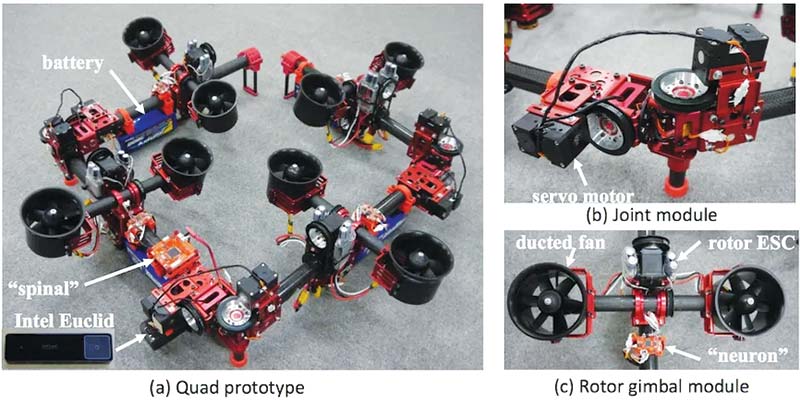

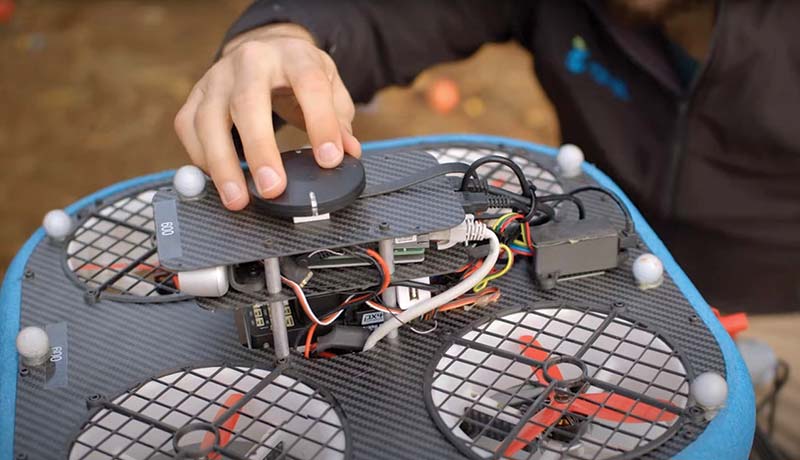

The DRAGON prototype has four links: (a) connected through joints; and (b) powered by servo motors. Each link carries a pair of ducted fan thrusters: (c) A flight control unit (labeled “spinal”) with onboard IMU and Intel Euclid sits on the second link. Each link has a distributed control board (labeled “neuron”), with the ducted fan rotors controlled by electronic speed controllers. (Photo courtesy JSK Lab/University of Tokyo.)

It’s easy to imagine all the ways that DRAGON could mobile-manipulate the heck out of things that a ground-based manipulator simply could not. While there are a bunch of different flavors of drones with arms stapled to them for this very reason, making the structure of the drone itself into the manipulator is a much more elegant solution.

Or rather, a potentially elegant solution, since DRAGON is still very much a research project. It weighs a hefty 7.6 kilograms and while its payload is a respectable 3.4 kg, the maximum flight time of three minutes is definitely a constraint that will need to be solved before the drone makes it very far out of the lab environment.

To be fair, though, this isn’t the focus of the research at the moment. It’s more about expanding DRAGON’s capabilities, which is a control problem. With the incredible amount of degrees of freedom that the system has, it’s theoretically capable of doing any number of things, but getting it to actually do those things in a reliable and predictable manner is the challenge.

And, as if all that wasn’t enough of a challenge, Zhao commented that they are considering giving DRAGON the ability to walk on the ground to extend its battery life.

RoboCup Class of 2022

This photo — featuring more than 100 Nao programmable educational robots, two Pepper humanoid assistive robots, and their human handlers — was taken at the end of this year’s RoboCup 2022 in Bangkok. After two years during which the RoboCup was scuttled by the global pandemic, the organizers were able to bring together 13 robot teams from around the world (with three teams joining in remotely) to participate in the automaton games. The spirit of the gathering was captured in this image, which (according to RoboCup organizers) shows robots with a combined market value of roughly US $1 million.(Courtesy of Patrick Göttsch and Thomas Reinhardt.)

(Under) Water World

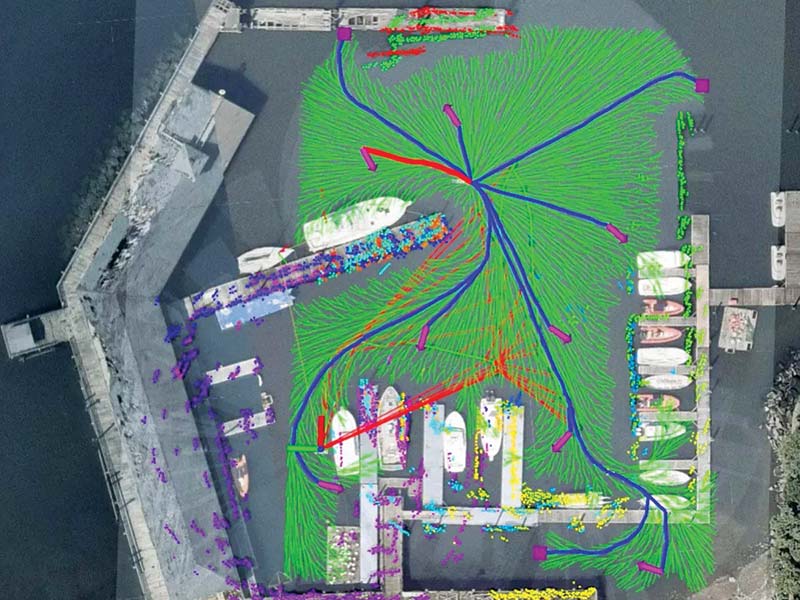

The ocean contains an endless expanse of territory yet to be explored, and mapping out these uncharted waters globally poses a daunting task. Fleets of autonomous underwater robots could be invaluable tools to help with mapping, but these need to be able to navigate cluttered areas while remaining efficient and accurate. A major challenge in mapping underwater environments is the uncertainty of the robot’s position.

In a recent study published in the IEEE Journal of Oceanic Engineering, one research team has developed a novel framework that allows autonomous underwater robots to map cluttered areas with high efficiency and low error rates.

“Because GPS is not available underwater, most underwater robots do not have an absolute position reference, and the accuracy of their navigation solution varies,” explains Brendan Englot, an associate professor of mechanical engineering at the Stevens Institute of Technology, in Hoboken, NJ, who was involved in the study. “Predicting how it will vary as a robot explores uncharted territory will permit an autonomous underwater vehicle to build the most accurate map possible under these challenging circumstances.”

The model created by Englot’s team uses a virtual map that abstractly represents the surrounding area that the robot hasn’t seen yet. They developed an algorithm that plans a route over this virtual map in a way that takes the robot’s localization uncertainty and perceptual observations into account.

The perceptual observations are collected using sonar imaging which helps detect objects in the environment in front of the robot within a 30 meter range and a 120 degree field of view. “We process the imagery to obtain a point cloud from every sonar image. These point clouds indicate where underwater structures are located relative to the robot,” Englot commented.

The research team tested their approach using a BlueROV2 underwater robot in a harbor at Kings Point, NY — an area that Englot says was large enough to permit significant navigation errors to build up, but small enough to perform numerous experimental trials without too much difficulty. The team compared their model to several other existing ones, testing each model in at least three 30 minute trials in which the robot navigated the harbor. The different models were also evaluated through simulations.

“The results revealed that each of the competing [models] had its own unique advantages, but ours offered a very appealing compromise between exploring unknown environments quickly while building accurate maps of those environments,” boasted Englot.

He noted that his team has applied for a patent that would consider their model for subsea oil and gas production purposes. However, they envision that the model will also be useful for a broader set of applications such as inspecting offshore wind turbines, offshore aquaculture infrastructure (including fish farms), and civil infrastructure such as piers and bridges.

“Next, we would like to extend the technique to 3D mapping scenarios, as well as situations where a partial map may already exist, and we want a robot to make effective use of that map, rather than explore an environment completely from scratch,” commented Englot. “If we can successfully extend our framework to work in 3D mapping scenarios, we may also be able to use it to explore networks of underwater caves or shipwrecks.”

Flying Fruit Pickers

Developed by Israel-based company Tevel Aerobotics, the Tevel drone system looks like a complex piece of machinery perhaps sent by aliens to lend us a hand by making our fruit harvesting task easier and more efficient.

With harvesting being an exhausting job, it’s becoming more and more difficult to find workers willing to do it. It’s also a costly business that requires you to pay for the picker’s food, health insurance, housing, transportation, and maybe even visas.

Aiming to be a solution to all these problems, Tevel’s on-demand flying fruit pickers can help significantly lower those costs, as they require none of the aforementioned, not to mention they can work tirelessly and around the clock.

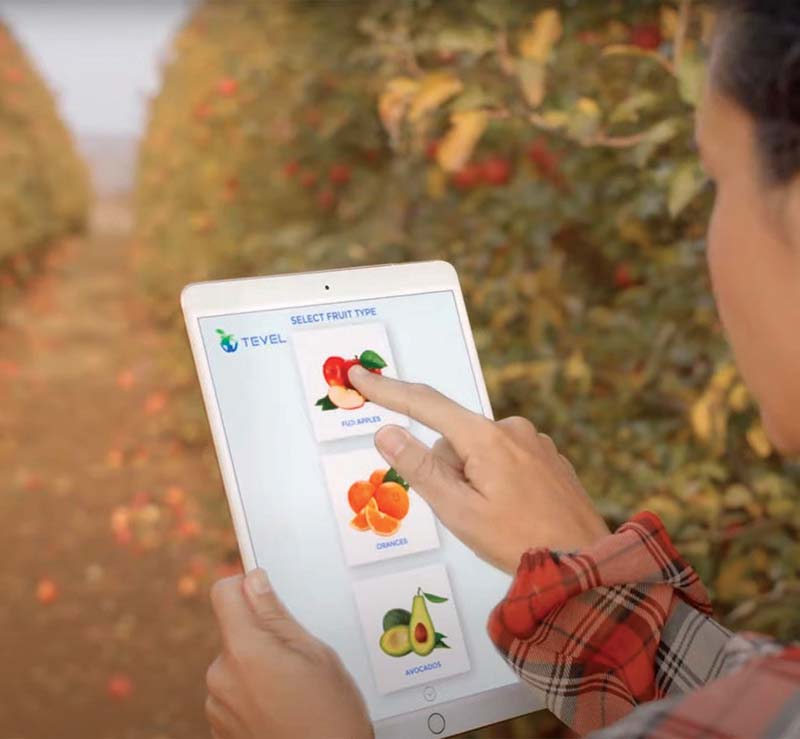

Tevel’s platform is a complex one that consists of both software and hardware components. First, using the company’s harvesting management application, farmers can figure out exactly how many robotic pickers they need, when, and for how long, and they inform Tevel’s control center. The fleet of pickers will then be deployed to the location, according to the customer’s indications.

The fruit-picking hardware part consists of a ground vehicle that has tethered drones orbiting around it. These drones come with robotic arms and grippers that are used to grab the fruit from the tree. A smart program lets the drones know which fruit is ready to be plucked and will only select, pick, and box the ones that are ready for market.

The dedicated app offers real time updates on the harvesting process, informing users of the time left to completion, the quantity picked, the cost, and so on.

What a Site!

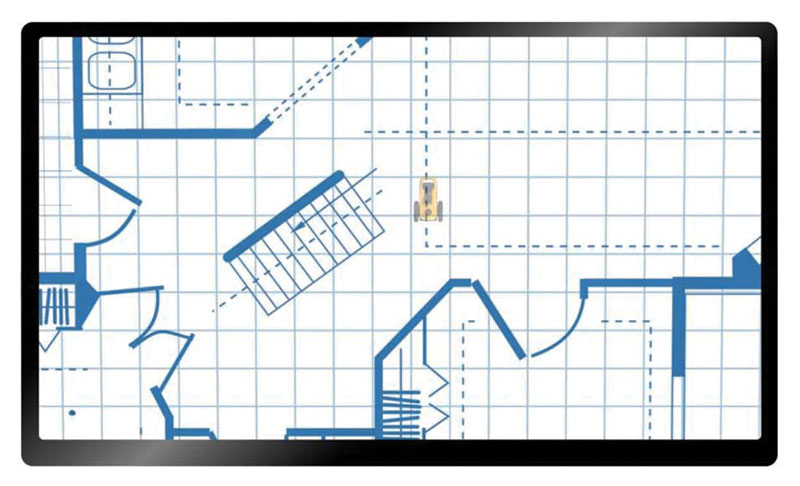

HP has announced its SitePrint: an autonomous robot that precisely prints construction site layouts onto the floor. SitePrint will be available to customers in North America through an early access program; the company is planning for a wider commercial release of the robot in 2023.

SitePrint was designed to automate the site layout process. It includes a light, compact, autonomous robot; cloud tools to submit and prepare jobs to be printed and manage the fleet; a touch screen tablet for remote control and configuration; and a portfolio of inks for different surfaces, environment conditions, and durability requirements.

The robot can print digital layouts on a variety of surfaces including concrete, tarmac, plywood, pavement, terrazzo, vinyl, and epoxy. It can print over rough surfaces and obstacles up to 2 cm thick, and avoids obstacles on the job site that it can’t paint over.

HP’s SitePrint prints construction layouts with pinpoint accuracy. (Images courtesy of HP.)

“Technology adoption and increased digitization can help construction firms realize productivity gains,” Daniel Martínez, VP and General Manager at HP Large Format Printing, said. “HP has played a key role in bridging digital and physical worlds with print solutions for architects and engineers over the last 30 years. With HP SitePrint, we’re making it faster and easier than ever for construction professionals to bring an idea to life on site, while also providing layout accuracy and reducing costs derived from reworks.”

It’s a Material World

Recent advances in soft robotics have opened up possibilities for the construction of smart fibers and textiles that have a variety of mechanical, therapeutic, and wearable possibilities. These fabrics — when programmed to expand or contract through thermal, electric, fluid, or other stimuli — can produce motion, deformation, or force for different functions.

Engineers at the University of New South Wales (UNSW), Sydney, Australia, have developed a new class of fluid-driven smart textiles that can “shape-shift” into 3D structures. Despite recent advances in the development of active textiles, “they are either limited with slow response times due to the requirement of heating and cooling, or difficult to knit, braid, or weave in the case of fluid-driven textiles,” says Thanh Nho Do, senior lecturer at the UNSW’s Graduate School of Biomedical Engineering, who led the study.

The UNSW team’s smart textile enables fabric reconfiguration that can produce shape-morphing structures such as this butterfly and flower, which can move using hydraulics. (Photo courtesy of University of New South Wales.)

To overcome these drawbacks, the UNSW team demonstrated a proof of concept of miniature, fast-responding artificial muscles made up of long fluid-filled silicone tubes that can be manipulated through hydraulic pressure. The silicone tube is surrounded by an outer helical coil as a constraint layer to keep it from expanding like a balloon. Due to the constraint of the outer layer, only axial elongation is possible, giving muscle the ability to expand under increased hydraulic pressure or contract when pressure is decreased. According to Do, using this mechanism, they can program a wide range of motion by changing the hydraulic pressure.

“A unique feature of our soft muscles compared to others is that we can tune their generated force by varying the stretch ratio of the inner silicone tube at the time they are fabricated, which provides high flexibility for use in specific applications,” Do commented.

The researchers used a simple, low-cost fabrication technique in which a long, thin silicone tube is directly inserted into a hollow microcoil to produce the artificial muscles, with a diameter ranging from a few hundred micrometers to several millimeters. “With this method, we could mass-produce soft artificial muscles at any scale and size — diameter could be down to 0.5 millimeters and length at least five meters,” Do stated.

The combination of hydraulic pressure, fast response times, light weight, small size, and high flexibility makes the UNSW’s smart textiles versatile and programmable. According to Do, the expansion and contraction of their active fabrics is similar to those of human muscle fibers.

This versatility opens up potential applications in soft robotics including shape-shifting structures, biomimicking soft robots, locomotion robots, and smart garments. There are possibilities for use as medical/therapeutic wearables, as assistive devices for those needing help with movement, and as soft robots to aid the rescue and recovery of people trapped in confined spaces.

Although these artificial muscles are still a proof of concept, Do is optimistic about commercialization in the near future.

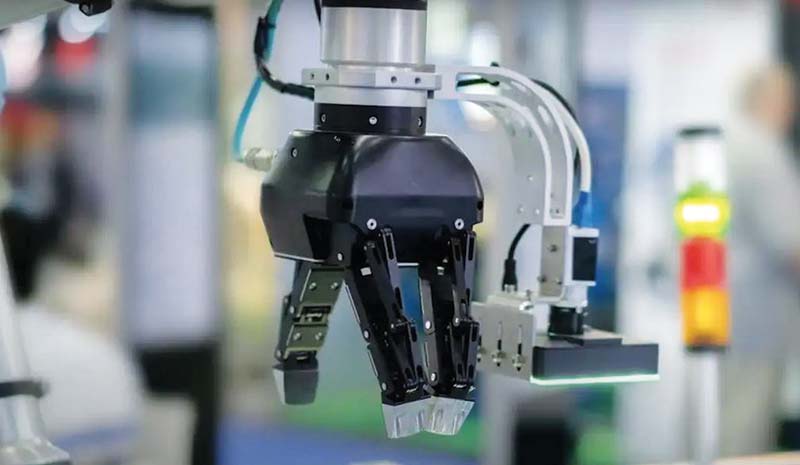

So Touchy

GelSight has announced the release of their GelSight Mini: an artificial intelligence (AI) powered 3D sensor that can give robots a sense of touch. The sensor is small enough to be comfortable for human hands and strong enough for use in robots and cobots. It takes just five minutes for the sensor to produce sharable results out of the box.

“GelSight Mini is a first-of-its-kind, affordable, and compact tactile sensor with an easy plug-and-play setup that lets users get to work within five minutes of taking the device out of the box,” Dennis Lang, Vice President of Product at GelSight, said. “We believe that GelSight Mini will reduce the barrier of entry into robotics and touch-based scanning for corporate research and development, academics, and hobbyists, while opening doors to new terrain such as the Metaverse.”

GelSight set out to make a sensor that would provide more flexibility to roboticists than any other one on the market. The company’s sensor makes digital 2D and 3D mapping available to roboticists, and the sensor exceeds the spatial resolution of human touch. This gives researchers optimized images of material surfaces that can be useful across a broad set of industries.

The GelSight Mini relies on data captured by GelSight’s elastomeric tactile sensing platform, which leverages the Robot Operating System (ROS), PyTouch, and Python. This allows users to create detailed and accurate surface characterizations that are directly compatible with familiar industry-standard software environments.

ROS compatibility, frame grabbers, and Python scripts are all provided by the company, allowing users to get started right away with unique AI and computer vision tasks, including directly creating digital twins of items to be picked using the sensor.

GelSight Mini can be used in a range of things, from industrial-style two-finger grippers to bionic hand research and developments. The sensor’s compact design and GelSight providing 3D CAD files of adapters for integration makes it easy to install into an existing system.

Different Pick Up Lines

Researchers at the University of Washington have developed a tool that can design 3D-printable passive grippers so that robots can more easily switch between different tasks.

Many robots are tied to a single job and are unable to switch gears if needed to perform a task outside of their usual one. University of Washington researchers are hoping to address this issue by creating a system that can design passive grippers, so the robot can switch out grippers and perform a task with new objects.

The 22 objects that the University of Washington team tested its grippers on. (Image courtesy of University of Washington.)

To design the grippers, the team provides the computer with a 3D model of the object it’s going to pick up and its orientation in space. The team’s algorithms then generate possible grasp configurations and ranks them based on stability and other metrics.

Next, the computer picks the best option and co-optimizes it to see if an insert trajectory is possible. If it can’t find one, it moves on to the next option until it finds one that will. When it does, the computer outputs instructions for a 3D printer to create a gripper, and for the robot arm to find the trajectory for picking up the object.

The researchers tested the system on 22 different objects and successfully picked up 20 of them. For each shape, the researchers did 10 pickup tests. For 16 of the shapes, all 10 tests were successful.

The robot was unable to pick up two of the objects because of issues in the 3D model of the objects that were given to the computer. The first object (a bowl) was modeled with thinner sides than they really were, and the second object (a cup with an egg-shaped handle) was incorrectly oriented.

The system excelled at picking up objects that vary in width or have protruding edges, and struggled with uniformly smooth surfaces like a water bottle.

Even without any human intervention, the algorithm developed the same gripping strategies for similarly shaped objects. This has led the researchers to believe that they could be able to create passive grippers that pick up a class of objects rather than a specific object.

“We still produce most of our items with assembly lines, which are really great but also very rigid. The pandemic showed us that we need to have a way to easily repurpose these production lines,” said senior author Adriana Schulz, a UW assistant professor in the Paul G. Allen School of Computer Science & Engineering. “Our idea is to create custom tooling for these manufacturing lines. That gives us a very simple robot that can do one task with a specific gripper. And then when I change the task, I just replace the gripper.”

Article Comments