Bots in Brief (03.2020)

A Little Ingenuity

Tucked under the belly of the Perseverance rover that landed on Mars is a little helicopter called Ingenuity. Its body is the size of a box of tissues, slung underneath a pair of 1.2m carbon fiber rotors on top of four spindly legs. It weighs just 1.8 kg, but the importance of its mission is massive. If everything goes according to plan, Ingenuity will become the first aircraft to fly on Mars.

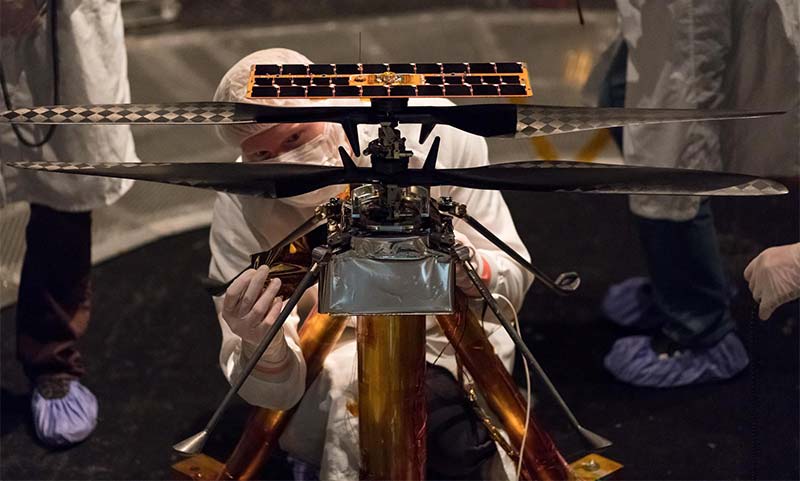

NASA engineers modifying the flight model of the Mars helicopter inside the Space Simulator at NASA JPL.

In order for this to work, Ingenuity has to survive frigid temperatures, manage merciless power constraints, and attempt a series of 90 second flights while separated from Earth by 10 light minutes. So, real time communication or control is impossible.

It’s important to keep this helicopter mission in context because this is a technology demonstration. The primary goal here is to fly on Mars, full stop. Ingenuity won’t be doing any of the same sort of science that the Perseverance rover is designed to do. If we’re lucky, the helicopter will take a couple of in-flight pictures, but that’s about it.

NASA’s Ingenuity Mars helicopter viewed from below, showing its laser altimeter and navigation camera.

The importance and the value of the mission is to show that flight on Mars is possible, and to collect data that will enable the next generation of Martian rotorcraft which will be able to do more ambitious and exciting things.

Everything about Ingenuity itself is already inherently complicated, without adding specific tasks. Flying a helicopter on Mars is incredibly challenging for a bunch of reasons, including the very thin atmosphere (just 1% the density of Earth’s), the power requirements, and the communications limitations.

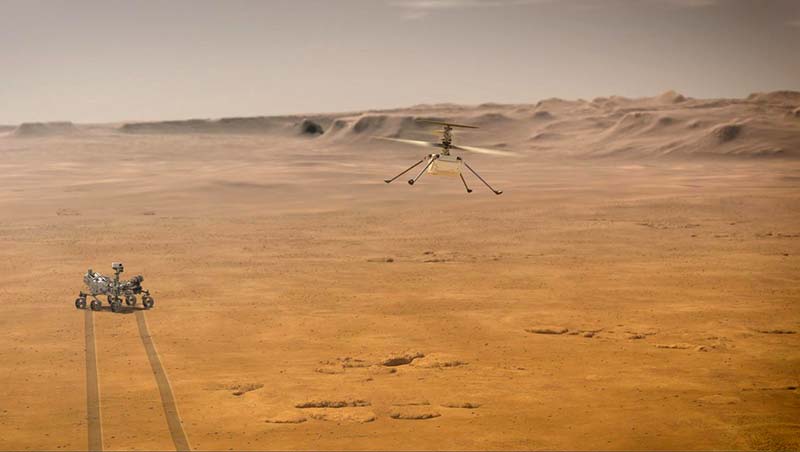

An artist’s illustration of Ingenuity flying on Mars. Graphics courtesy of NASA/JPL-Caltech.

Something Fishy

The instinctive movements of a school of fish darting in a kind of synchronized ballet have inspired researchers at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) and the Wyss Institute for Biologically Inspired Engineering. The results could improve the performance and dependability of not just underwater robots, but other vehicles that require decentralized locomotion and organization such as self-driving cars and robotic space exploration.

The fish collective called Blueswarm was created by a team led by Radhika Nagpal, whose lab is a pioneer in self-organizing systems. The oddly adorable robots can sync their movements like biological fish, taking cues from their plastic-bodied neighbors with no external controls required. Nagpal recently told IEEE Spectrum that this marks a milestone, demonstrating complex 3D behaviors with implicit coordination in underwater robots.

“Insights from this research will help us develop future miniature underwater swarms that can perform environmental monitoring and search in visually-rich but fragile environments like coral reefs,” Nagpal said. “This research also paves a way to better understand fish schools by synthetically recreating their behavior.”

The research is published in Science Robotics, with Florian Berlinger as first author. Berlinger said the “Bluedot” robots integrate a trio of blue LED lights, a lithium-polymer battery, a pair of cameras, a Raspberry Pi computer, and four controllable fins within a 3D-printed hull. The fish-lens cameras detect LEDs of their fellow fish, and apply a custom algorithm to calculate distance, direction, and heading.

Based on that simple production and detection of LED light, the team proved that Blueswarm could self-organize behaviors, including aggregation, dispersal, and circle formation. Basically swimming in a clockwise synchronization. Researchers also simulated a successful search mission, an autonomous Finding Nemo if you will. Using their dispersion algorithm, the robot school spread out until one could detect a red light in the tank. Its blue LEDs then flashed, triggering the aggregation algorithm to gather the school around it. Such a robot swarm might prove valuable in search-and-rescue missions at sea, covering miles of open water and reporting back to its mates.

There’s also a Wi-Fi module to allow uploading new behaviors remotely. The lab’s previous efforts include a 1,000-strong army of “Kilobots” and a robotic construction crew inspired by termites. Both projects operated in two-dimensional space. However, a 3D environment like air or water posed a tougher challenge for sensing and movement.

Berlinger added that the research could one day translate to anything that requires decentralized robots, from self-driving cars and Amazon warehouse vehicles to exploration of faraway planets where poor latency makes it impossible to transmit commands quickly.

The miniature robots could also work long hours in places that are inaccessible to humans and divers, or even large tethered robots. Nagpal said the synthetic swimmers could monitor and collect data on reefs or underwater infrastructure 24/7, and work into tiny places without disturbing fragile equipment or ecosystems.

“If we could be as good as fish in that environment, we could collect information and be non-invasive in cluttered environments where everything is an obstacle,” Nagpal said.

These fish-inspired robots can synchronize their movements without any outside control.

Photo courtesy of Self-organizing Systems Research Group/Harvard John A. Paulson School of Engineering and Applied Sciences.

Policing Robots

It looks like 2021 will be the beginning of the era of robotic law enforcement. A growing number of police departments around the country are purchasing robots for police work, and as this behavior becomes normalized, major concerns are starting to arise.

The NYPD purchased a robot dog earlier this year that is apparently capable of opening doors. The same kind of robot police dog has been tested out by the Massachusetts State Police. The use of drones by police departments has skyrocketed during the COVID-19 pandemic. Police departments around the country have purchased the weeble-wobble-looking robot Knightscope robot that apparently enjoys running over children’s feet and ignoring people who need help.

NYPD/Instagram.

Matthew Guariglia, a policy analyst at the Electronic Frontier Foundation, commented that the kinds of robots we’re starting to see police departments use are mostly for show, but that doesn’t mean there isn’t cause for concern.

“We’ve seen from the inside of the companies selling these robots that most of what they’re selling them is based on social media engagement and good press for your department,” Guariglia says. “Very little of it is about the actual policing the robot can do. I think by slowly introducing these robots as fun novelties that you can take selfies with, it is absolutely going to normalize a type of policing that is done by robot and by algorithm.”

While a robot police dog might be cute or it’s funny when a robotic cop falls into a fountain, these machines could be bringing us closer to something much more pernicious. Guariglia says we could soon see a proliferation of different kinds of police robots, and accountability could become a major problem.

“I worry about when we move out of the stage where police robots are just photo opportunities. We’re going to eventually have to confront the scenario in which robots that police have to make decisions, and when the time comes that a police robot makes the wrong decision — somebody gets hurt or the wrong person gets arrested — police robots are not people,” Guariglia says. “You can’t reprimand them.”

What if the robot falsely identifies them as a criminal and gets them arrested? Who will be held responsible for that? You can’t fire a robot or charge it with a crime.

Guariglia also notes that these robots can easily be outfitted with all kinds of surveillance technology and they could become “roving surveillance towers.” He says a robot might be assigned to a high-crime neighborhood to conduct near-constant surveillance and call the police when it suspects it’s identified a criminal, whether it has or not.

Furthermore, Guariglia says we might not be too far away from police robots starting to carry weapons, which would further complicate things. He notes that a police robot was already equipped with C4 and used to kill a mass shooter in Dallas in 2016, so it’s not unreasonable to assume we could see police robots equipped with weapons in the not-too-distant future.

“In extenuating circumstances, police are more than willing to impromptu weaponize robots, and we’ve already seen proposals over the years to put tasers or pepper spray on little flying drones, so it would not surprise me at all if we saw robot police dogs at protests that are equipped with pepper spray on their backs,” Guariglia says.

One possible solution to this problem would be communities preventing police departments from getting these kinds of robots in the first place. A city could adopt a Community Control Over Police Surveillance (CCOPS) ordinance, which would make it so the police department has to submit a request to the city council outlining what kind of technology it hopes to purchase before being allowed to buy it. Guariglia says that police departments might be less inclined to purchase this kind of technology if they had to get approval from the public.

The Big Sleep

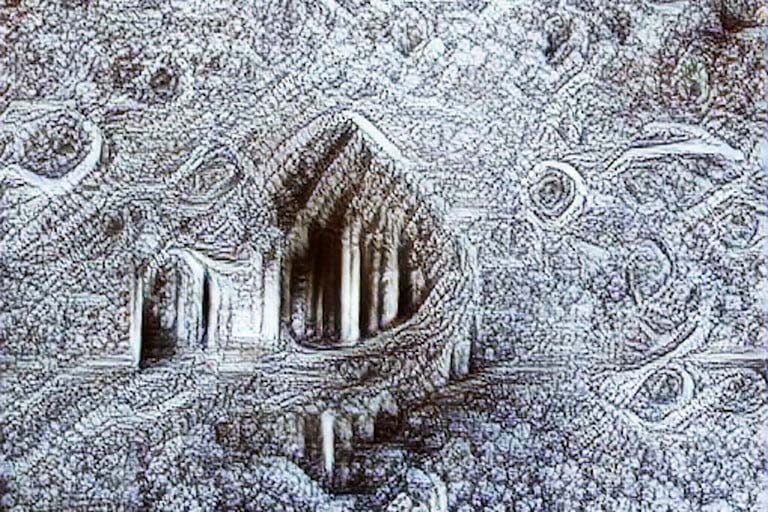

The picture above is “an intricate drawing of eternity” and it’s the work of BigSleep: the latest amazing example of generative artificial intelligence (AI) in action.

A bit like a visual version of the text-generating AI model GPT-3 (Generative Pre-trained Transformer 3; an autoregressive language model that uses deep learning to produce human-like text.), BigSleep is capable of taking any text prompt and visualizing an image to fit the words. This can be something esoteric like eternity, or it could be a bowl of cherries or a beautiful house. Think of it like a Google Images search — only for pictures that have never previously existed.

“At a high level, BigSleep works by combining two neural networks: BigGAN and CLIP,” Ryan Murdock, BigSleep’s 23-year-old creator — a student studying cognitive neuroscience at the University of Utah — recently told Digital Trends.

The first of these, BigGAN, is a system created by Google that takes in random noise and outputs images. BigGAN is a generative adversarial network: a pair of dueling neural networks that carry out what Murdock calls an “adversarial tug-of-war” between an image-generating network and a discriminator network. Over time, the interaction between the generator and discriminator results in improvements being made to both neural networks.

Meanwhile, CLIP is a neural net made by OpenAI that has been taught to match images and descriptions. Give CLIP text and images, and it will attempt to figure out how well they match and give them a score accordingly.

By combining the two, Murdock explained that BigSleep searches through BigGAN’s outputs for images that maximize CLIP’s scoring. It then slowly tweaks the noise input in BigGAN’s generator until CLIP says that the images produced match the description. Generating an image to match a prompt takes about three minutes in total.

“BigSleep is significant because it can generate a wide variety of concepts and objects fairly well at 512 x 512 pixel resolution,” Murdock said. “Previous work has produced impressive results, but, by my knowledge, much of it has been restricted to lower-resolution images and more everyday objects.”

BigSleep isn’t the first time AI has been used to generate images. Its name is reminiscent of DeepDream: an AI created by Google engineer, Alex Mordvintsev that creates psychedelic imagery using classification models.

A GAN based system was also used to create an AI painting that was sold at auction in 2018 for a massive $432,500.

To try out BigSleep for yourself, Murdock suggested checking out his Google Colab notebook regarding the project. There’s a bit of a learning curve involving using the Colab GUI and a few other steps, but it’s free to take for a spin.

Other ways of testing it will most likely open up soon. If you’re interested, you can also visit r/MediaSynthesis (https://www.reddit.com/r/MediaSynthesis), where users are posting some of the best images they’ve generated with the system so far.

Name That Tune

From Journey’s “Don’t Stop Believin’” to Queen’s “Bohemian Rhapsody” to Kylie Minogue’s “Can’t Get You Out Of My Head,” there are some songs that manage to successfully worm their way down our ear canals and take up residence in our brains. What if it was possible to read the brain’s signals, and to use these to accurately guess which song a person is listening to at any given moment?

That’s what researchers from the Human-Centered Design department at Delft University of Technology in the Netherlands and the Cognitive Science department at the Indian Institute of Technology Gandhinagar have been working on. In a recent experiment, they demonstrated that it’s eminently possible to do this, and the implications could be more significant than you might think.

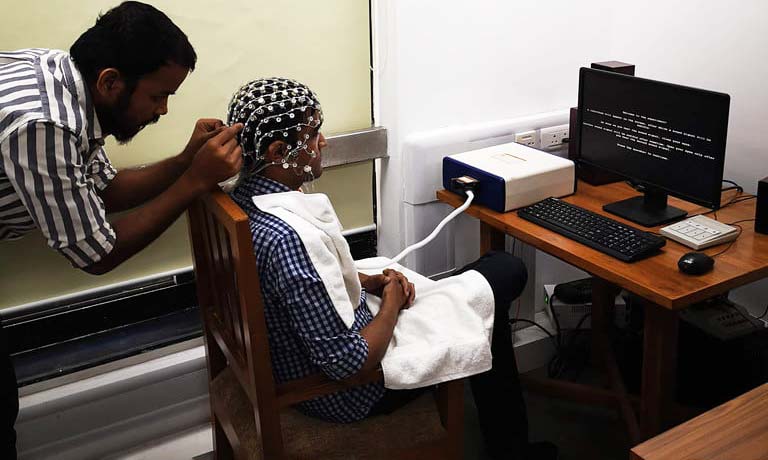

For the study, the researchers recruited a group of 20 people and asked them to listen to 12 songs using headphones. To aid with their focus, the room was darkened and the volunteers blindfolded. Each was fitted with an electroencephalography (EEG) cap that’s able to noninvasively pick up the electrical activity on their scalp as they listen to the songs.

This brain data alongside the corresponding music was then used to train an artificial neural network to be able to identify links between the two. When the resulting algorithm was tested on data it hadn’t seen before, it was able to correctly identify the song with 85% accuracy — based entirely on the brain waves.

“The songs were a mix of Western and Indian songs, and included a number of genres,” Krishna Miyapuram, assistant professor of cognitive science and computer science at the Indian Institute of Technology Gandhinagar, explained. “This way, we constructed a larger representative sample for training and testing. The approach was confirmed when obtaining impressive classification accuracies, even when we limited the training data to a smaller percentage of the dataset.”

This isn’t the first time that researchers have shown it’s possible to carry out “mind-reading” demonstrations that would make David Blaine jealous, all using EEG data. For instance, neuroscientists at Canada’s University of Toronto Scarborough have previously reconstructed images based on EEG data to digitally re-create face images stored in a person’s mind. Miyapuram’s own previous research includes a project in which EEG data was used to identify movie clips viewed by participants, with each one intended to provoke a different emotional response.

Interestingly, this latest work showed that the algorithms proved very effective at guessing the songs being listened to by one participant, after being trained on their specific brain. However, it wouldn’t work so well when applied to another person. In fact, “not so well” took the accuracy in these tests from 85% to less than 10%.

New Hyundai Bots

Let’s take a look at what Hyundai Motor Group, the new owners of Boston Dynamics, has been up to recently. The first robot is DAL-e; what HMG is calling an “Advanced Humanoid Robot.”

Hyundai Motor Group’s “Advanced Humanoid Robot” DAL-e.

According to Hyundai, DAL-e is “designed to pioneer the future of automated customer services,” and is equipped with “state-of-the-art artificial intelligence technology for facial recognition as well as an automatic communication system based on a language-comprehension platform.” You’ll find it in car showrooms, but only in Seoul for now.

The other new robot is TIGER (Transforming Intelligent Ground Excursion Robot).

Hyundai Motor Group’s TIGER (Transforming Intelligent Ground Excursion Robot).

It’s been discussed how adding wheels can make legged robots faster and more efficient, but we’re not sure that it works all that well going the other way (adding legs to wheeled robots). Rather than adding a little complexity to get a multi-modal system that you can use much of the time, you’re instead adding a lot of complexity to get a multi-modal system that you’re going to use sometimes. Or, something like that.

One could argue, as perhaps Hyundai would, that the multi-modal system is critical to get TIGER to do what they want it to do which seems to be primarily remote delivery. They mention operating in urban areas as well, where TIGER could use its legs to climb stairs, but this could be beat by more traditional wheeled platforms — or even whegged platforms — that are almost as capable while being much simpler and cheaper. For remote delivery, legs might be a necessary feature.

The TIGER concept could be integrated with a drone to transport it from place to place. Why not just use the drone to make the remote delivery instead? Maybe if you’re dealing with a thick tree canopy, the drone could drop TIGER off in a clearing and the robot could drive to its destination, but that’s now a very complex system for a very specific use.

The best part about these robots from Hyundai is that between the two of them, they suggest that the company is serious about developing commercial robots, as well as willing to invest in something that seems a little crazy. Just like Boston Dynamics.

What the Smell?

Research into robotic sensing has been very human-centric. Most of us navigate and experience the world visually and in 3D, so robots tend to get covered with things like cameras and LiDAR. Touch and sound are important as well, so robots are getting pretty good with understanding tactile and auditory information. But what about smell? In most cases, smell doesn’t convey nearly as much information for us as humans, so it certainly isn’t the sensing modality of choice in robotics.

Dogs, rats, vultures, and other animals have a good way of using smell, but how does one go about researching this from a technical perspective? How can you find a way to use this kind of sensing system? Enter the Smellicopter.

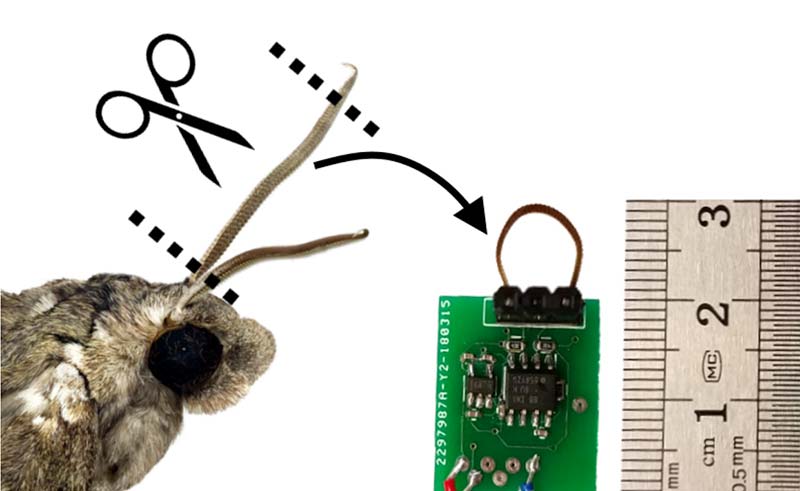

That fuzzy little loop is a moth’s antenna. Photo courtesy Mark Stone/University of Washington.

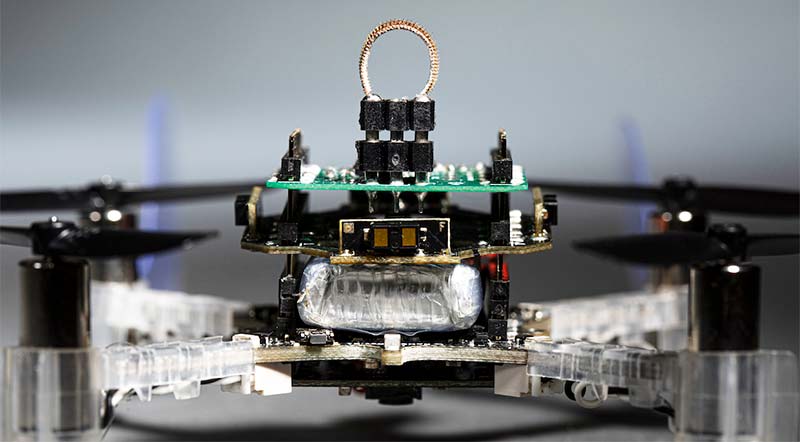

For the actual drone itself, it comes from an open-source drone project called Crazyflie 2.0 (https://www.bitcraze.io/products/crazyflie-2-1), and has some additional off-the-shelf sensors for obstacle avoidance and stabilization. Some interesting bits are a couple of passive fins that keep the drone pointed into the wind, and then there’s the sensor called an electroantennogram.

The drone’s sensor, called an electroantennogram, consists of a “single excised antenna” from a Manduca sexta hawkmoth and a custom signal processing circuit. Photo courtesy of UW.

To make one of these sensors, you just “harvest” an antenna from a live hawkmoth. Obligingly, the moth antenna is hollow, meaning that you can stick electrodes up it. Whenever the olfactory neurons in the antenna (which is still technically alive even though it’s not attached to the moth anymore) encounter an odor that they’re looking for, they produce an electrical signal that the electrodes pick up.

Plug the other ends of the electrodes into a voltage amplifier and filter, run it through an analog-to-digital converter, and you’ve got a chemical sensor that weighs just 1.5 grams and consumes only 2.7 mW of power. It’s significantly more sensitive than a conventional metal-oxide odor sensor in a much smaller and more efficient form factor, making it ideal for drones.

To localize an odor, the Smellicopter uses a simple bio-inspired approach called crosswind casting which involves moving laterally left and right and then forward when an odor is detected. Here’s how it works.

The vehicle takes off to a height of 40 cm and then hovers for 10 seconds to allow it time to orient upwind. The Smellicopter starts casting left and right crosswind. When a volatile chemical is detected, the Smellicopter will surge 25 cm upwind and then resume casting. As long as the wind direction is fairly consistent, this strategy will bring the insect (or robot) increasingly closer to a singular source with each surge.

Since odors are airborne, they need a bit of a breeze to spread very far, and the Smellicopter won’t be able to detect them unless it’s downwind of the source. That’s just how odors work — even if you’re right next to the source, if the wind is blowing from you towards the source rather than the other way around, you might not catch a whiff of it.

The IceBot Cometh

No matter how brilliant the folks at NASA and JPL are, inevitably, their robots break down. It’s rare that these breakdowns are especially complicated, but since the robots aren’t designed for repair, there isn’t much that can be done. Say the Mars rovers could swap out their own wheels when they got worn out. Where are you going to get new robot wheels on Mars, anyway?

This is actually the bigger problem: finding the necessary resources to keep robots running in extreme environments. We’ve managed to solve the power problem pretty well, often leveraging solar power since it’s a resource that you can find almost anywhere. You can’t make wheels out of solar power, but you can make wheels (and other structural components) out of another material that can be found just lying around all over the place: ice.

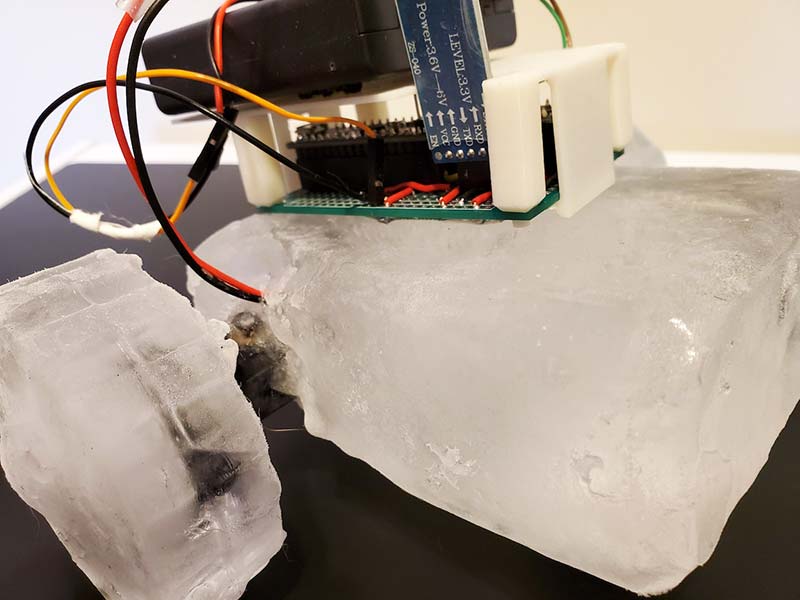

In a paper presented at the recent IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Devin Carroll and Mark Yim from the GRASP Lab at the University of Pennsylvania in Philadelphia, stress that this is very preliminary work. They say they’ve only just started exploring the idea of a robot made of ice. Obviously, you’re not going to be able to make actuators or batteries or other electronic thingies out of ice, and ice is never going to be as efficient as a structural material as titanium or carbon fiber or whatever. However, ice can be found in a lot of different places, and it’s fairly unique in how it can be modified. For eample, heat can be used to cut and sculpt it, and also to glue it to itself.

The IROS paper takes a look at different ways of manufacturing robotic structural components from ice using both additive and subtractive manufacturing processes, with the goal of developing a concept for robots that can exhibit “self-reconfiguration, self-replication, and self-repair.” The assumption is that the robot would be operating in an environment with ice all over the place, where the ambient temperature is cold enough that the ice remains stable, and ideally also cold enough that the heat generated by the robot won’t lead to an inconvenient amount of self-melting or an even more inconvenient amount of self-shorting.

Between molding, 3D printing, and CNC machining, it turns out that just cutting up the ice with a drill is the most energy efficient and effective method, although ideally you’d want to figure out a way of using it where you can manage the waste water and ice shavings that result so that they don’t refreeze somewhere you don’t want them to. Of course, sometimes refreezing is exactly what you want, since that’s how you do things like place actuators and attach one part to another.

IceBot is a proof-of-concept Antarctic exploration robot that weighs 6.3 kg. It was made by hand, and the researchers mostly just showed that it could move around and not immediately fall to pieces even at room temperature. There’s a lot to do before IceBot can realize some of those self-reconfiguration, self-replication, and self-repair capabilities, but the researchers are on it.

IceBot is a proof-of-concept robot with structural parts made of ice. Photo courtesy of GRASP Lab.

Pooch Parlance

Have you ever wondered what your pet is trying to tell you? Well, a collar that debuted at the recent CES 2021 may hold the answer.

Petpuls’ AI powered smart collar uses voice recognition technology to detect and track five different emotional states. It analyzes the tone and pitch of your dog’s bark to tell you whether your pup feels happy, anxious, angry, sad, or relaxed.

The analysis device is attached to a collar, then pairs through Wi-Fi with an iOS or Android app to give you a readout of what your pet is “saying.” In addition to tracking mood, Petpuls also includes an accelerometer to track your dog’s activity and manage its diet. Think of it like a FitBit for your dog. The range is only around 15 feet, but your phone will pair whenever you come back in range.

The AI collar also measures rest. Dogs sleep an average of 14 hours per day and bark in total for less than one. (I think I know dogs that bark way more than that!) Sleep is a critical part of your dog’s health, so measuring how much rest your pup gets — in addition to the tone of its voice — will help you better care for your four-legged friend.

The artificial intelligence technology uses an algorithm to determine mood, along with a database of more than 10,000 bark samples from over 50 breeds of dogs. As your pup’s voice data accumulates, Petpuls becomes more accurate.

The research and testing was done by Seoul National University and gives Petpuls an emotional recognition accuracy rate of more than 80%.

Petpuls is IP54 water-resistant, so you’ll need to take it off when bathing your pet, but a little rain won’t hurt it. It can operate between 8 and 10 hours on a single charge, so it’s a good idea to recharge it nightly.

Small collars are available for $99, with additional straps available for $20. Large collars are available for $108, with additional straps available for $25. Petpuls comes in five colors: orange, blue, green, hot pink, and turquoise. You can purchase the device through Petpuls’ website at https://www.petpuls.net.

Prometheus Drone Folds It Up

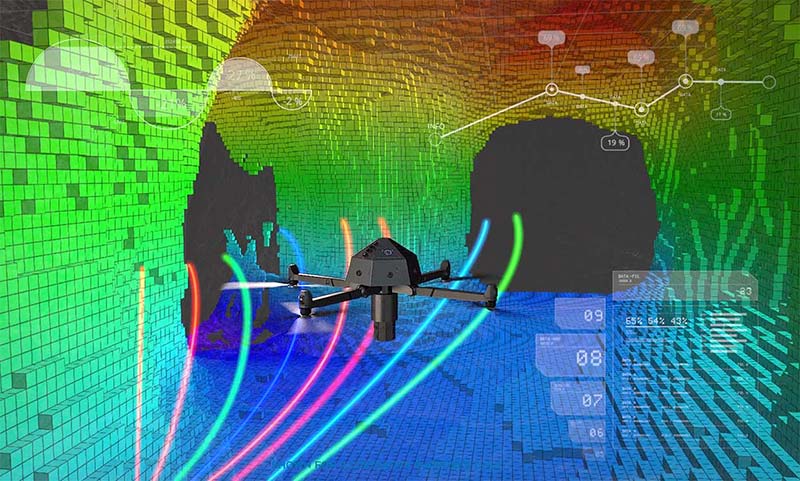

Inspecting old mines is a dangerous business. For humans, mines can be lethal. There can be falling rocks and/or noxious gases. Robots can go where humans might suffocate, but even robots can only do so much when mines are inaccessible from the surface.

Now, researchers in the UK, led by Headlight AI, have developed a drone that could cast a light in the darkness.

Named Prometheus, this drone can enter a mine through a borehole not much larger than a football before unfurling its arms and flying around the void. Once down there, it can use its payload of scanning equipment to map mines where neither humans nor robots can presently go.

The researchers hope that this could make mine inspection quicker and easier. The team behind Prometheus published its design in a recent issue of Robotics.

It’s that ability to fold and enter a borehole that makes Prometheus remarkable, according to Jason Gross, a professor of mechanical and aerospace engineering at West Virginia University. Gross calls Prometheus “an exciting idea,” but he does note that it has a relatively short flight window and few abilities beyond scanning.

Conceptual illustration of the Prometheus drone mapping a mine. Illustration courtesy of Headlight AI.

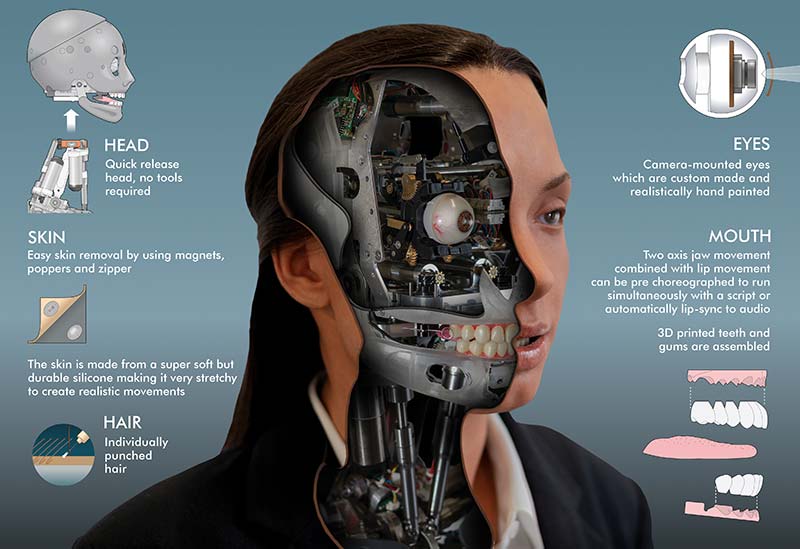

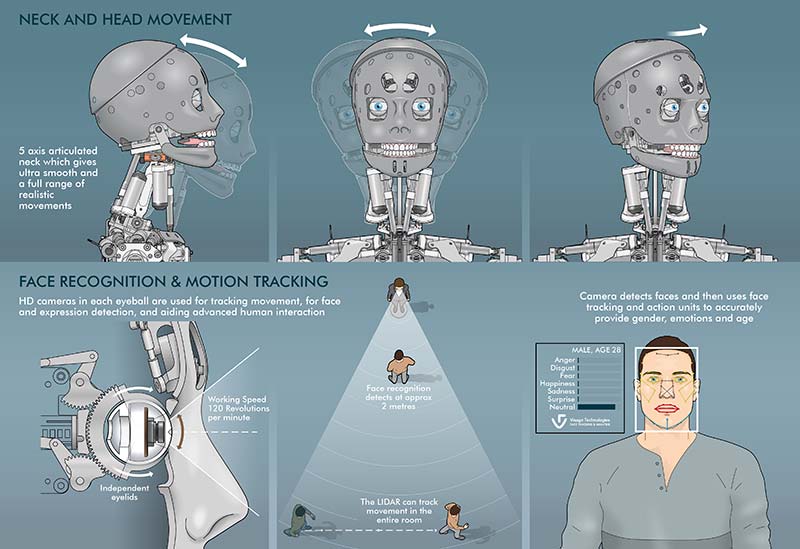

Mesmer-ized

Mesmer is a system designed by Engineered Arts (https://www.engineeredarts.co.uk) for building lifelike humanoid robots. It includes all the parts that are needed to breathe life into a character.

- Hardware – Motors, electronics and connectors.

- Sensors – Cameras, depth sensors, LiDAR, and microphones.

- Firmware – Motor control for speed, position, and torque.

- Software – For control of animation, interaction, audio, and lighting.

All the components were designed from scratch by Engineered Arts specifically for humanoid robots, so everything fits and works together in perfect harmony.

Check out these graphics that show how it works.

Engineered Arts Ltd was founded in October 2004 by Will Jackson. The company now concentrates entirely on development and sales of an ever-expanding range of humanoid and semi-humanoid robots featuring natural human-like movement and advanced social behaviours.

Engineered Arts’ humanoid robots are used worldwide for social interaction, communication, and entertainment at public exhibitions and attractions, as well as university research labs.

Under Pressure

Engineers at the University of California San Diego have created a four-legged soft robot that doesn’t need any electronics to work. The quadruped only needs a constant source of pressurized air for all its functions, including its controls and locomotion systems.

The team, led by Michael T. Tolley, a professor of mechanical engineering at the Jacobs School of Engineering at UC San Diego, detailed its findings recently in Science Robotics.

“This work represents a fundamental yet significant step towards fully-autonomous, electronics-free walking robots,” said Dylan Drotman, a Ph.D. student in Tolley’s research group and the paper’s first author.

Applications include low-cost robotics for entertainment such as toys and robots that can operate in environments where electronics cannot function, such as MRI machines or mine shafts. Soft robots are of particular interest because they easily adapt to their environment and operate safely near humans.

Most soft robots are powered by pressurized air and are controlled by electronic circuits. However, this approach requires complex components like circuit boards, valves, and pumps, often outside the robot’s body. These components which constitute a quadruped’s brains and nervous system are typically bulky and expensive. By contrast, the UC San Diego robot is controlled by a light-weight, low-cost system of pneumatic circuits made up of tubes and soft valves onboard the robot itself. The robot can walk on command or in response to signals it senses from the environment.

This quadruped relies on a series of valves that open and close in a specific sequence to walk.

“With our approach, you could make a very complex robotic brain,” said Tolley, the study’s senior author. “Our focus here was to make the simplest air-powered nervous system needed to control walking.”

The quadruped’s computational power roughly mimics mammalian reflexes that are driven by a neural response from the spine rather than the brain. The team was inspired by neural circuits found in animals called central pattern generators that are made of very simple elements that can generate rhythmic patterns to control motions like walking and running.

To mimic the generator’s functions, the engineers built a system of valves that act as oscillators, controlling the order in which pressurized air enters air-powered muscles in the robot’s four limbs. Researchers built an innovative component that coordinates the robot’s gait by delaying the injection of air into the robot’s legs. The robot’s gait was inspired by sideneck turtles.

The quadruped is also equipped with simple mechanical sensors: little soft bubbles filled with fluid placed at the end of booms protruding from the robot’s body. When the bubbles are depressed, the fluid flips a valve in the robot that causes it to reverse direction.

The quadruped is equipped with three valves acting as inverters that cause a high pressure state to spread around the air-powered circuit, with a delay at each inverter.

Each of the robot’s four legs has three degrees of freedom powered by three muscles. The legs are angled downward at 45 degrees and composed of three parallel connected pneumatic cylindrical chambers with bellows. When a chamber is pressurized, the limb bends in the opposite direction. As a result, the three chambers of each limb provide multi-axis bending required for walking. Researchers paired chambers from each leg diagonally across from one another, simplifying the control problem.

The legs are angled down 45 degrees and composed of three parallel, connected pneumatic cylindrical chambers with bellows. Photos courtesy of UCSD.

A soft valve switches the direction of rotation of the limbs between counterclockwise and clockwise. That valve acts as what’s known as a latching double pole/double throw switch which is a switch with two inputs and four outputs, so each input has two corresponding outputs it’s connected to. That mechanism is a little like taking two nerves and swapping their connections in the brain.

Article Comments