Servo Magazine ( 2019 Issue-3 )

bots IN BRIEF (03.2019)

Whisk(er)ed Away

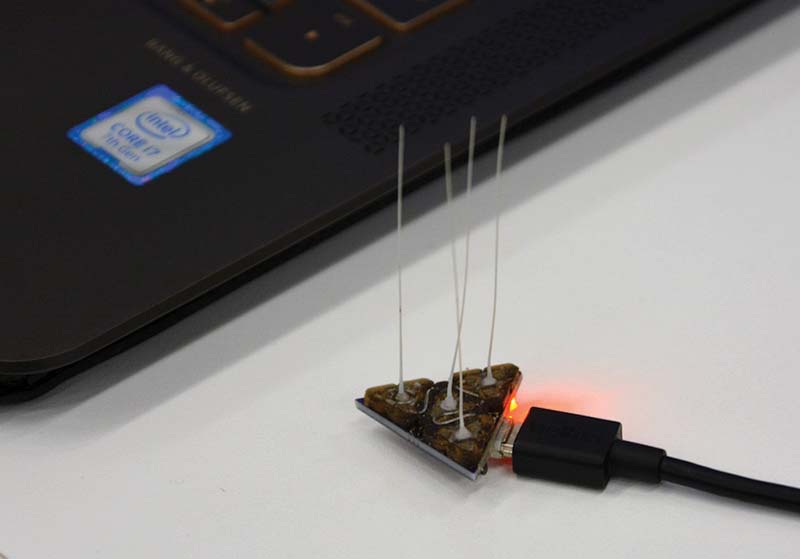

At the recent ICRA, Pauline Pounds from the University of Queensland in Brisbane, Australia demonstrated a new whisker sensing system for drones. The whiskers are tiny, cheap, and sensitive enough to detect air pressure from objects even before they make physical contact.

Here’s how the researchers describe the system:

We are interested in translating the proven sensor utility of whiskers on ground platforms to hovering robots and drones — whiskers that can sense low-force contact with the environment such that the robot can maneuver to avoid more dangerous high-force interactions. We are motivated by the task of navigating through dark, dusty, smoky, cramped spaces, or gusty, turbulent environments with micro-scale aircraft that cannot mount heavier sensors such as LIDARs.

The whisker fibers themselves are easy to fabricate; they’re just blobs of ABS plastic that are heated up and then drawn out into long thin fibers like taffy. The length and thickness of the whiskers can be modulated by adjusting the temperature and draw speed. The ABS blob at the base of each whisker is glued to a 3D-printed load plate which is, in turn, attached to a triangular arrangement of force pads (actually encapsulated MEMS barometers). The force pads can be fabricated in bulk, so it’s straightforward to make a whole bunch of whiskers at one time through a process that’s easy to automate. The materials cost of a four-whisker array is about $20, and the weight is just over 1.5 grams.

While the focus of this research has been on whiskers for microdrone applications, it seems like these could be fantastic sensors for a wide variety of robots — especially for cheap robots. Any small robots that operate in environments as described above could leverage these sensors to keep from running into things where far more expensive cameras and LIDAR systems would struggle.

For now, the University of Queensland researchers are focused on aerial applications, with the next steps being to mount whisker arrays on real drones to see how they perform.

Researchers at the University of Queensland in Brisbane, Australia have demonstrated a new whisker sensing system for drones. Image courtesy of University of Queensland.

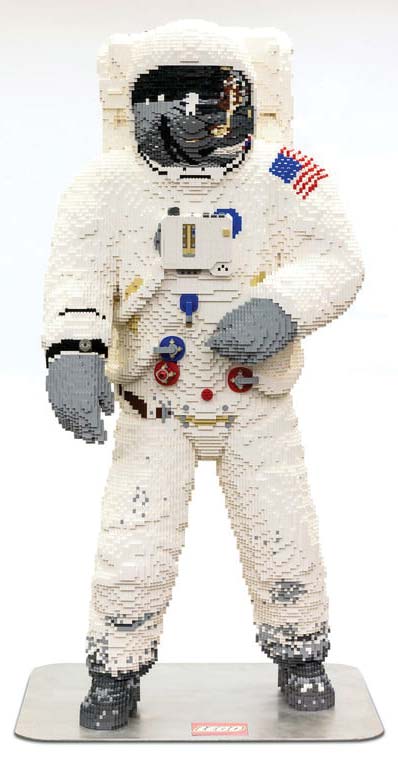

One Big Step for LEGO

In celebration of the 50th anniversary of the moon landing, LEGO unveiled a life-sized model of an astronaut, constructed entirely from LEGO bricks.

The model of the Apollo 11 lunar module pilot is based on the suit which Neil Armstrong wore when he made his historic small step onto the moon. The model stands at six feet/three inches tall and boasts some incredible details on it, like the landscape of the moon you can see reflected in the helmet.

To see how this giant tribute was assembled, there’s a time lapse video at https://www.digitaltrends.com/cool-tech/lego-life-sized-astronaut/?utm_source=sendgrid&utm_medium=email&utm_campaign=daily-brief showing the process of building a LEGO figure on this scale. It took 30,000 bricks and a team of 10 people totalling nearly 300 hours of design and construction.

Cassie Can

At UC Berkeley’s Hybrid Robotics Lab, led by Koushil Sreenath, researchers are teaching their Cassie bipedal robot (called Cassie Cal) to wheel around on a pair of hovershoes.

Hovershoes are like hoverboards that have been chopped in half, resulting in a pair of motorized single-wheel skates. You balance on the skates and control them by leaning forwards and backwards and left and right, which causes each skate to accelerate or decelerate in an attempt to keep itself upright.

It’s not easy to get these things to work (even for humans), but by adding a sensor package to Cassie, the UC Berkeley researchers have managed to get it to zip around campus fully autonomously.

Cassie Cal riding a pair of hovershoes around the UC Berkeley campus. Photo courtesy of UC Berkeley.

Sunshine State Goes Autonomous

Florida governor, Ron DeSantis has removed many of the obstacles that previously stood in the way of companies hoping to test autonomous vehicles on the state’s roads. The Republican politician signed a law that establishes a clear legal framework for self-driving cars to operate within the state, including prototypes navigating on their own without a human operator behind the wheel.

Starting back on July 1, 2019, automakers and tech companies are now allowed to test experimental autonomous cars on Florida roads without anyone behind the wheel or inside the car. The only catch is that the prototypes will need to comply with very basic safety and insurance regulations outlined in the law.

When a human operator is behind the wheel, the autonomous prototype must be able to emit visual and audible alerts if it detects one of its core systems has failed. When a problem occurs while it’s out on its own, it needs to be able to safely bring itself to a full stop. That means slowly pulling over and activating its hazard lights, not slamming on its own brakes in the middle of a busy intersection.

Significantly, the law clearly notes the autonomous driving system is considered the car’s operator when it’s engaged, even if there are passengers riding in the cabin. This clause implies the company that built the prototype is responsible in the event of an accident, and it forces engineers to ensure the technology they develop is safe and reliable.

Motorists traveling inside an autonomous vehicle are exempt from Florida’s ban on using wireless communication devices while behind the wheel of a moving car. Additionally, the law specifies that the ban on watching a television show, a movie, or any type of moving broadcast on the state’s highways doesn’t apply to passengers of autonomous cars. (Yep. Go ahead and fire up Netflix.)

These exemptions will help car and tech companies keep the promises they routinely make as they race to release autonomous cars to the general public. From Audi to Waymo, the players in this high-stakes game pledge drivers who consent to be passengers will have more time to work, read, or catch up on their favorite television shows during their commute. Plus, since a human is not legally required to monitor the road ahead, you may pass someone napping behind the wheel the next time you visit the Sunshine State.

The law makes Florida “the most autonomous vehicle-friendly state in the country,” according to DeSantis.

Do the Shuffle

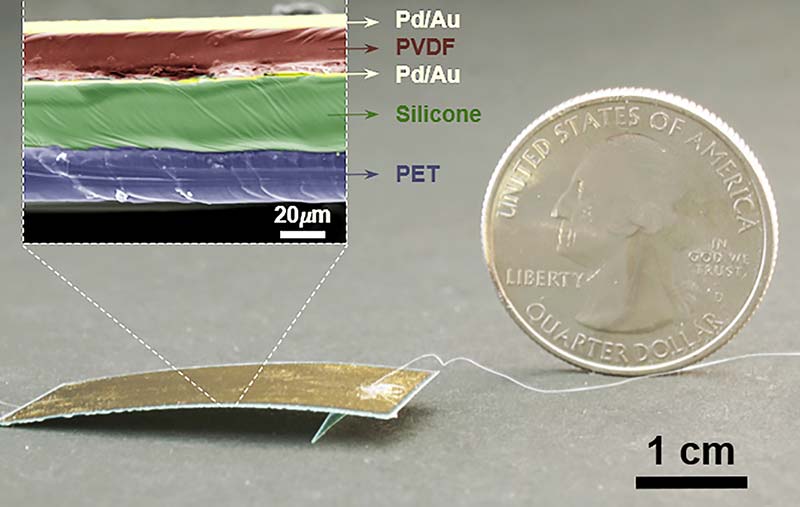

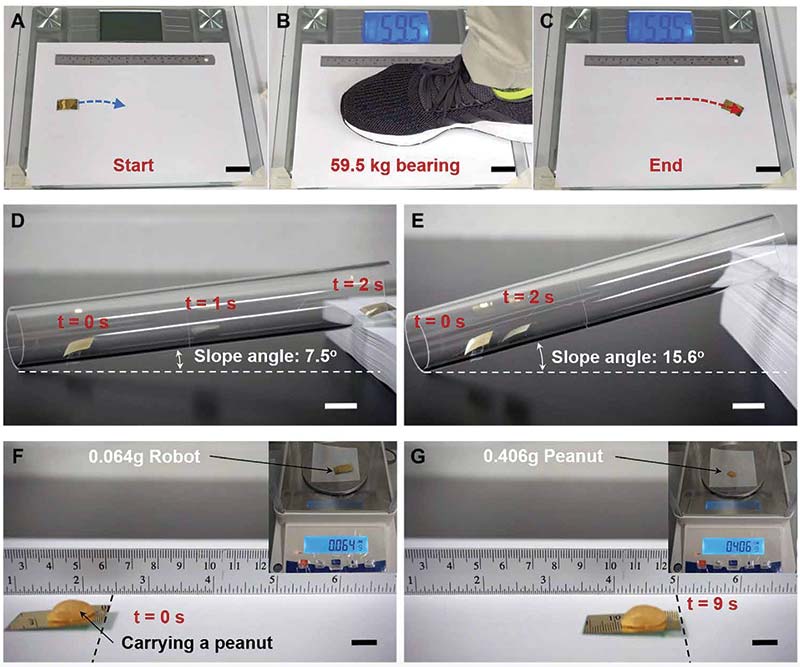

In a recent issue of Science Robotics, a group of researchers from the Tsinghua University in China and the University of California, Berkeley presented a new kind of soft robot that’s both high performance and very robust. The deceptively simple robot looks like a bent strip of paper, but it’s able to move at 20 body lengths per second and survive being stomped on by a human wearing tennis shoes. Take that, cockroaches!

This little robot looks like a bent strip of paper, but it’s able to move at 20 body lengths per second and survive being stomped on.

This prototype robot measures just 3 cm x 1.5 cm. It takes a scanning electron microscope to actually see what the robot is made of, which is a thermoplastic layer sandwiched by palladium-gold electrodes and bonded with adhesive silicone to a structural plastic at the bottom.

Photos courtesy of Science Robotics.

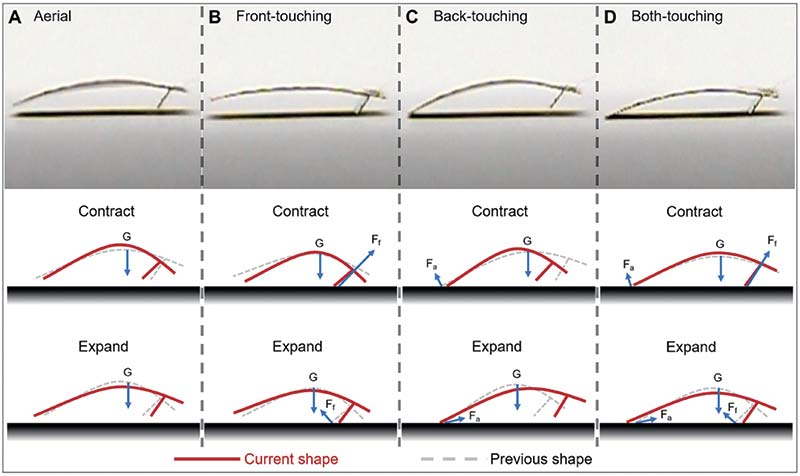

When an AC voltage (as low as eight volts, but typically about 60 volts) is run through the electrodes, the thermoplastic extends and contracts, causing the robot’s back to flex and the little “foot” to shuffle. A complete step cycle takes just 50 milliseconds, yielding a 200 Hz gait. Technically, the robot “runs,” since it does have a brief aerial phase.

Photos from a high-speed camera show the robot’s gait (A to D) as it contracts and expands its body.

Some other cool things about it:

You can step on it (squishing it flat with a load about one million times its own body weight) and it’ll keep on crawling, albeit only half as fast.

Even climbing a slope of 15 degrees, it can still manage to move at one body length per second.

It carries peanuts! With a payload of six times its own weight, it moves a sixth as fast, but still, that’s not a bad delivery time.

The researchers also put together a prototype with two legs instead of one, which was able to demonstrate a potentially faster galloping gait by spending more time in the air.

They suggest that robots like these could be used for “environmental exploration, structural inspection, information reconnaissance, and disaster relief.”

I Fold

At ICRA in Montreal earlier this year, researchers from UC Berkeley demonstrated a new design for a foldable drone that’s able to shrink itself by 50 percent in less than half a second, thanks to spring-loaded arms controlled by the power of the drone’s own propellers.

The trick here is that the springs are exerting constant tension on the passively hinged arms of the quadrotor. It’s enough tension to snap the arms inwards when the motors are off, but when the motors are on, the force they exert is stronger than the tension exerted by the springs, snapping the arms out again and keeping them there.

The actual transition point (where the force exerted by the motors overcomes the tension on the springs, or vice versa) has been carefully calibrated to make sure that the quadrotor stays a quadrotor most of the time, and only folds up when you want it to.

The big advantage of this design is that it adds a relatively small amount of complexity while still enabling dynamic folding that significantly reduces the size of the quadrotor. In its unfolded state, it’s just as easy to control as a quadrotor that can’t fold.

The researchers also say that they could potentially get the quadrotor to fold up into an even more compact configuration. The constraint at the moment is that if it gets any smaller, the blades start to intersect. However, because each propeller counter-rotates relative to its two neighbors, if you keep them spinning at the same rate “the speed of the blades relative to each other would be small and any collisions between blades would be minor.”

The UC Berkeley morphing drone in the unfolded (top) and folded (bottom) configurations. The drone changes shape without the use of any additional actuators. When low thrust forces are produced by the propellers, springs pull the arms downward into the folded mode. When high thrust forces are produced, the vehicle transitions into the unfolded mode. Photo courtesy of High Performance Robotics Lab/UC Berkeley.

Bite on This

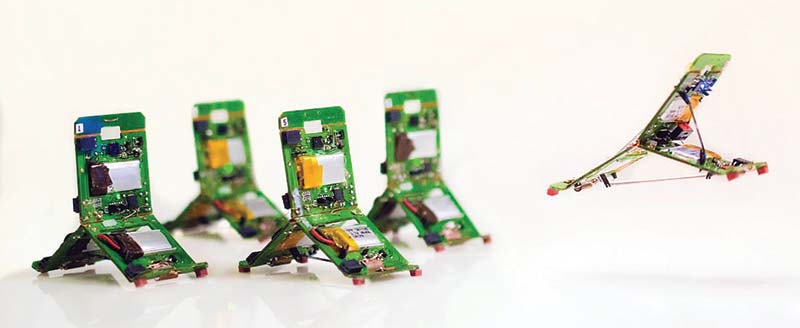

So, how do you make a swarm of inexpensive small robots with insect-like mobility that don’t need motors to get around? Well, Jamie Paik’s Reconfigurable Robotics Lab at EPFL has an answer: Tribots that are inspired by trap-jaw ants.

The thing about trap-jaw ants is that they can fire themselves into the air by biting the ground. In just 0.06 milliseconds, their half-millimeter long mandibles can close at a top speed of 64 meters per second, which works out to an acceleration of about 100,000 g. Biting the ground causes the ant’s head to snap back with a force of 300 times its body weight which launches the ant upwards. These guys can fly eight centimeters vertically, and up to 15 cm horizontally. That’s a lot for something that’s just a few millimeters long.

EPFL’s jumping robots called Tribots can be built on a flat sheet and then folded into a tripod shape, sort-of origami-style. Image courtesy of Harpreet Sareen/Elbert Tiao.

EPFL’s Tribots look nothing at all like trap-jaw ants. They’re about 5 cm tall, weigh 10 grams each, and can be built on a flat sheet and then folded into a tripod shape. The Tribots are fully autonomous, meaning they have onboard power and control, including proximity sensors that allow them to detect objects and avoid them. Avoiding objects is where the trap-jaw ants come in.

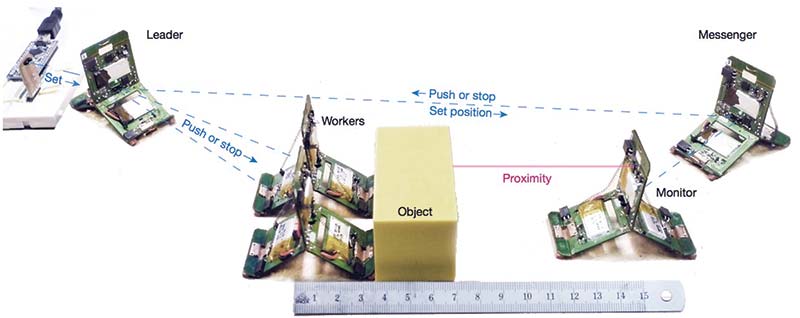

Five Tribots collaborate to move an object to a desired position, using coordination between a leader, two workers, a monitor, and a messenger robot. The leader orders the two worker robots to push the object, while the monitor measures the relative position of the object. As the object blocks the two-way link between the leader and the monitor, the messenger maintains the communication link. Image courtesy of EPFL.

Using two different shape-memory actuators (a spring and a latch, similar to how the ant’s jaw works), the tiny bots can move around using a bunch of different techniques that can adapt to the terrain they’re on, including:

- Vertical jumping for height

- Horizontal jumping for distance

- Somersault jumping to clear obstacles

- Walking on textured terrain with short hops (called “flic-flac” walking)

- Crawling on flat surfaces

Tribot’s maximum vertical jump is 14 cm (2.5 times its height), and horizontally it can jump about 23 cm (almost four times its length). Tribot is very efficient in these movements, with a cost of transport much lower than similarly-sized robots; in fact, it’s on par with insects themselves.

Working together, small groups of Tribots can complete tasks that a single robot couldn’t do alone. One example is pushing a heavy object a set distance. It turns out that you need five Tribots for this task: a leader robot; two worker robots; a monitor robot to measure the distance that the object has been pushed; and then a messenger robot to relay communications around the obstacle.

The researchers acknowledge that the current version of the hardware is pretty limited (mobility, sensing, and computation), but it does a reasonable job of demonstrating what’s possible with the concept.

EPFL researchers, Zhenishbek Zhakypov and Jamie Paik. Image courtesy of Marc Delachaux/EPFL.

The plan going forward is to automate fabrication in order to “enable on-demand ‘pushbutton-manufactured’” robots.

Going Out on a Limb

Agroup of researchers from Preferred Networks has experimented with building mobile robots out of a couple of generic servos plus tree branches. Yep. You read that right.

Researchers from Preferred Networks built robots out of unusual materials like tree branches and used deep reinforcement learning to develop locomotion algorithms for them. Image courtesy of Azumi Maekawa.

These robots figure out how to walk in simulation first, through deep reinforcement learning. The way this works is by picking up some sticks, weighing and 3D scanning them, simulating the entire robot, and then rewarding gaits that result in the farthest movement. There’s also some hand-tuning involved to avoid behaviors that might (for example) “cause stress and wear in the real robot.”

The robot is controlled by an Arduino Mega and powered by Kondo KRS-2572HV servo motors with a separate driver and power supply. Image courtesy of Preferred Networks.

There’s Power in the Blood

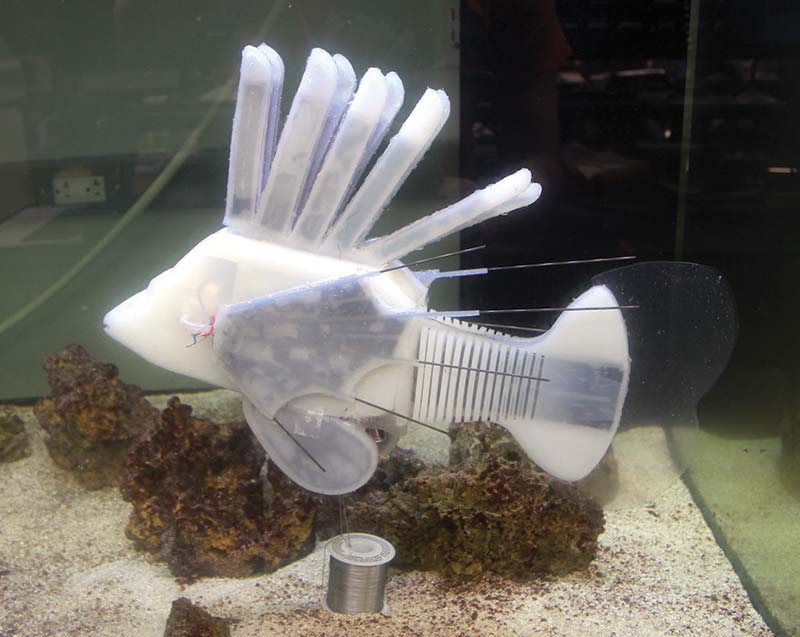

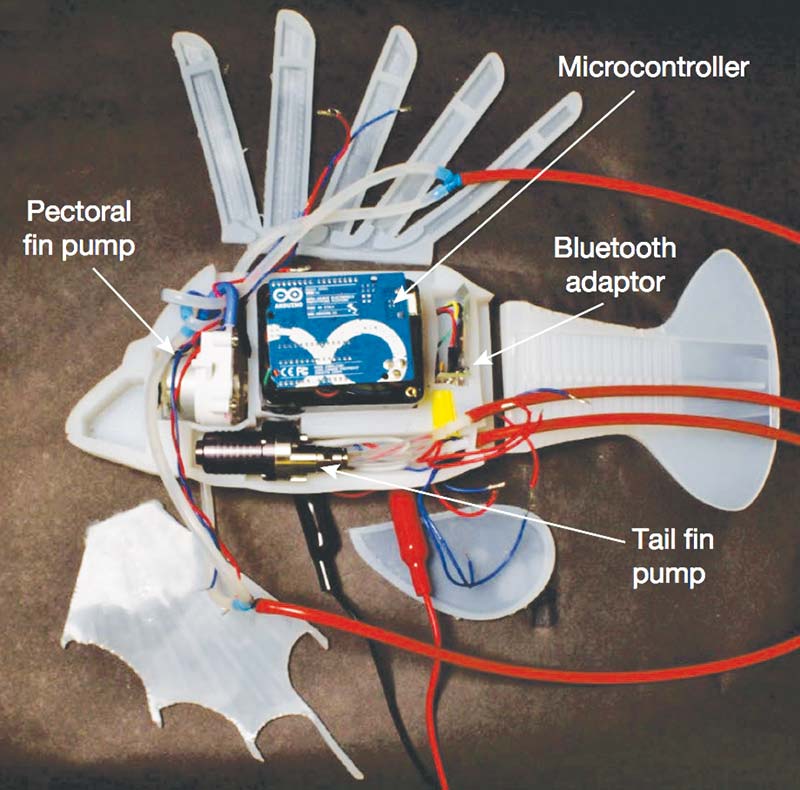

Researchers from Cornell and the University of Pennsylvania have come up with a robotic fish that uses synthetic blood pumped through an artificial circulatory system to provide both hydraulic power for muscles and a distributed source of electrical power. The system they designed “combines the functions of hydraulic force transmission, actuation, and energy storage into a single integrated design that geometrically increases the energy density of the robot to enable operation for long durations.”

This robotic fish uses synthetic blood pumped through an artificial circulatory system to provide both hydraulic power for muscles and a distributed source of electrical power. Photos courtesy of James Pikul.

This fish isn’t going to win any sprints, but it’s got impressive endurance, with a maximum theoretical operating time of over 36 hours while swimming at 1.5 body lengths per, uh, minute. The key to this is in the fish’s blood, which (in addition to providing hydraulic power to soft actuators) serves as one half of a redox flow battery. The blood is a liquid triiodide cathode, which circulates past zinc cells submerged in an electrolyte.

As the zinc oxidizes, it releases electrons which power the fish’s microcontroller and pumps. The theoretical energy density of this power system is 322 watt-hours per liter, or about half of the 676 watt-hours per liter that you’ll find in the kind of lithium-ion batteries that power a Tesla.

Conventional batteries may be more energy dense, but that Tesla also has to lug around motors and stuff if it wants to go anywhere. By using its blood to drive hydraulic actuators as well, this fish is far more efficient.

Inside the fish are two separate pumps; each one is able to pump blood from a reservoir of sorts into or out of an actuator. Pumping blood from the dorsal spines into the pectoral fins pushes the fins outward from the body, and pumping blood from one side of the tail to the other and back again results in a swimming motion.

The innards of the robot fish include two pumps, molded silicone shell with fin actuators, a microcontroller, and a synthetic vascular system containing flexible electrodes and a cation-exchange membrane encased in a soft silicone skin.

In total, the fish contains about 0.2 liters of blood, distributed throughout an artificial vascular system that was designed on a very basic level to resemble the structure of a real heart. The rest of the fish is made of structural elements that are somewhat like muscle and cartilage.

It’s probably best to not draw too many parallels between this robot and an actual fish, but the point is that combining actuation, force transmission, and energy storage has significant advantages for this particular robot. The researchers say that plenty of optimization is possible as well, which would lead to benefits in both performance and efficiency.

Solar-Powered X-Wing

Researchers from Harvard’s Microrobotics Lab (led by Professor Robert J. Wood) have come up with a four-winged version of their RoboBee platform. This version is called RoboBee X-Wing, and it’s capable of untethered flight thanks to solar cells and a light source.

RoboBee X-Wing is five centimeters long and weighs 259 milligrams. At the top are solar cells and at the bottom are all the drive electronics needed to boost the trickle of voltage coming out of the solar panels, up to the 200 volts that are required to drive the actuators that cause the wings to flap at 200 Hz.

The reason the robot’s bits and pieces are arranged this way is to keep the solar panels out of the airflow of the wings, while simultaneously keeping the overall center of mass of the robot where the wings are. The robot doesn’t have any autonomous control, but it’s stable enough for very short open loop flights lasting less than a second. The Harvard researchers say that the flight of their robot is “sustained” rather than just a “liftoff.”

The reason for the solar cells is because the robot can’t lift the kind of battery that it would need to power its wings, so off-board power is necessary. If you don’t want a tether (and seriously, who wants a tether!), that means some kind of wireless power. X-Wing gets its power from the sun. Well, three suns, actually.

Since one isn’t enough, the researchers emulate the sun with some powerful lamps. This means that X-Wing isn’t yet practical for outdoor operation, although they say that a 25 percent larger version (that they’re working on next) should reduce the number of suns required to just 1.5.

In its current version, RoboBee X-Wing does have some mass budget left over for things like sensors, but it sounds like the researchers are primarily focused on getting that power requirement down to one sun or below. It’s going to take some design optimization and additional integration work before RoboBee X-Wing gets to the point where it’s flying truly autonomously, but so far, there’s a substantial amount of progress towards that goal.

The new RoboBee X-Wing features solar cells, an extra pair of wings, and improved actuators. It can fly untethered for brief periods of time. Photo courtesy of Harvard Microrobotics Lab.

Beloved Professor Passes

Beloved professor and computer scientist at MIT, Patrick Winston died on July 19 at Massachusetts General Hospital in Boston. He was 76.

A professor at MIT for almost 50 years, Winston was director of MIT’s Artificial Intelligence Laboratory from 1972 to 1997 before it merged with the Laboratory for Computer Science to become MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL).

A devoted teacher and cherished colleague, Winston led CSAIL’s Genesis Group, which focused on developing AI systems that have human-like intelligence, including the ability to tell, perceive, and comprehend stories. He believed that such work could help illuminate aspects of human intelligence that scientists don’t yet understand.

He is survived by his wife, Karen Prendergast and his daughter, Sarah.

A devoted teacher and cherished colleague, Patrick Winston led CSAIL’s Genesis Group, which focused on developing AI systems that have human-like intelligence, including the ability to tell, perceive, and comprehend stories. Photo courtesy of Jason Dorfman/MIT CSAIL.

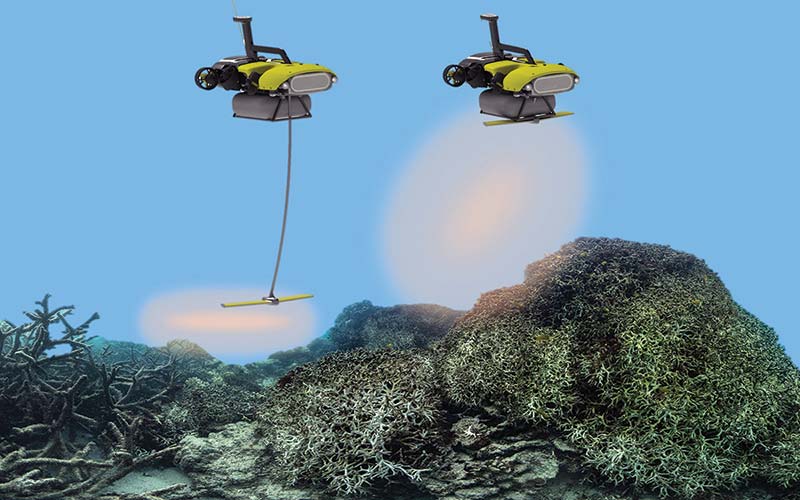

RangerBot to the Rescue

This past spring, a yellow robot glided through a coral reef in the Philippines, spending hours gently sprinkling microscopic baby coral onto the damaged reef below. It was an early test of technology that some researchers think could help speed up efforts to rebuild struggling reefs around the world.

“The world’s reefs are losing corals faster than they can be naturally replaced,” says Peter Harrison, an ecologist at Southern Cross University in Australia, who partnered with Matthew Dunbabin, an engineer from Queensland University of Technology, to build and test RangerBot. “In just about every reef system on the planet, we are suffering from declining corals. So, what we’re focused on is trying to restore coral populations to get the corals growing back on these degraded reef systems as quickly as possible.”

The new process accelerates a technique that Harrison — known for pioneering “coral IVF” — has already tested by hand. Corals typically reproduce en masse at night. Once a year, when the moon, tides, and temperature are right, a blizzard of billions of eggs and sperm floats from corals to the surface of the water for fertilization. In a week or less, new coral larvae begin to restock the reef.

Unfortunately, as coral reefs collapse from heatwaves in the ocean, overfishing, pollution, and other problems, some reefs don’t have enough coral left to successfully spawn and rebuild.

Harrison and his team collect coral spawn from corals that have survived recent mass bleaching events, and are most likely to also survive in the future as ocean temperatures continue to rise. “What we’re trying to do is to capture the remaining genetic diversity of those surviving corals,” he says.

The team raises the coral inside enclosed areas on a reef for five to seven days, then delivers the tiny new corals to reefs that need it most. This approach called “larval restoration” is typically done by hand. However, RangerBot can cover much larger areas; approximately 1,500 square meters in a hour.

This current RangerBot is a variation of a device that was originally designed to hunt and kill an invasive starfish that is another threat to coral reefs. The base of the robot can use different attachments, including the injection system for the starfish or the payload of baby coral (when it plants coral, it’s renamed the LarvalBot).

It navigates through the reef using computer vision and is controlled by a tablet; eventually, it will operate autonomously. It could also be used for everything from monitoring water quality to counting turtles. “We want to let the marine scientists do what they do best, which is assessing conditions,” says Dunbabin. “We just want to provide tools for them to really upscale the activities that they’re doing.”

Robot Ump Doesn’t Strike Out

Professional baseball took a big step forward in July as the home plate umpire sported a “robotic” earpiece in his right ear and computer in his back pocket to assist with judging pitches.

The computer officially called balls and strikes in regular competition for the first time in the game’s history in the United States at a minor league all-star game. Major League Baseball in February signed a three-year agreement with the independent eight-team Atlantic League to install experimental rules in line with Commissioner Rob Manfred’s vision for a faster, more action-packed game.

Among the first changes discussed was an automated balls and strikes regime, run via a panel above home plate made by sports data firm, TrackMan. After a half-season of testing, the system was ready for the league All-Star Game, debuting with great fanfare and an unambiguous strike.

Umpire Brian deBrauwere huddles behind York Revolution catcher, James Skelton while wearing an earpiece during the first inning of the Atlantic League All-Star Game held in York, PA. Photo courtesy of Julio Cortez/Associated Press.

Heavy Petal

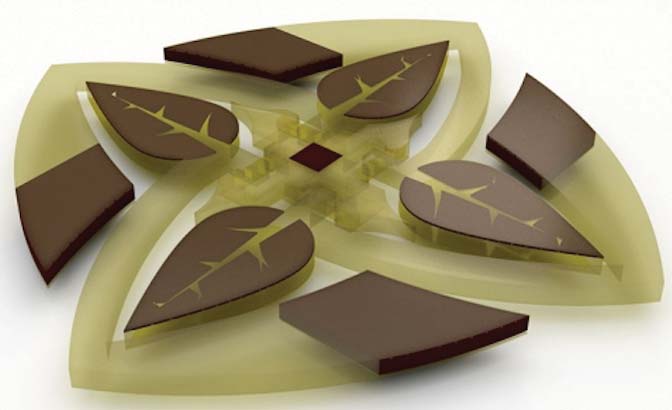

An automated system developed by MIT researchers designs and 3D prints complex robotic parts (actuators) that are optimized according to an enormous number of specifications. In short, the system does automatically what is virtually impossible for humans to do by hand.

In a paper recently published in Science Advances, the researchers demonstrated the system by 3D printing floating water lilies with petals equipped with arrays of actuators and hinges that fold up in response to magnetic fields run through conductive fluids.

The actuators are made from a patchwork of three different materials, each with a different light or dark color and a property (such as flexibility and magnetization) that controls the actuator’s angle in response to a control signal.

Software first breaks down the actuator design into millions of three-dimensional pixels, or “voxels,” that can each be filled with any of the materials. Then, it runs millions of simulations, filling different voxels with different materials. Eventually, it lands on the optimal placement of each material in each voxel to generate two different images at two different angles. A custom 3D printer then fabricates the actuator by dropping the right material into the right voxel, layer by layer.

A new MIT-invented system automatically designs and 3D prints complex robotic actuators optimized according to a very large number of specifications such as appearance and flexibility. To demonstrate the system, researchers fabricated floating water lilies with petals equipped with arrays of actuators and hinges that fold up in response to magnetic fields run through conductive fluids. Photo courtesy of Subramanian Sundaram.

Article Comments