Servo Magazine ( 2022 Issue-2 )

Bots in Brief (02.2022)

First Steps to LEGO-Size Bipedals

At the recent International Conference on Robotics and Automation (ICRA), roboticists from Carnegie Mellon University (CMU) asked an interesting question: What happens if you try to scale down a bipedal robot? Like, way down? This comment from their paper sums up their goal to make miniature walking robots as small as a LEGO Minifigure (one centimeter leg) or smaller.

Their current robot (while small, its legs are 15 cm long) is obviously bigger than a LEGO Minifigure. However, that’s okay, because it’s not supposed to be quite as tiny as the group’s ultimate ambition would be. At least not yet.

It’s a platform that the CMU researchers are using to figure out how to proceed. They’re still assessing what it’s going to take to shrink bipedal walking robots to the point where they could ride in Matchbox cars.

At very small scales, robots run into all kinds of issues, including space and actuation efficiency. These crop up mainly because it’s simply not possible to cram the same number of batteries and motors that go into bigger bots into something that tiny. So, in order to make a tiny robot that can usefully walk, designers have to get creative.

Bipedal walking is already a somewhat creative form of locomotion. Despite how complex bipedal robots tend to be, if the only criteria for a bipedal robot is that it walks, then it’s kind of crazy how simple roboticists can make them.

For small humanoids, the CMU researchers are trying to figure out how to leverage the principle of dynamic walking to make robots that can move efficiently and controllably while needing the absolute minimum of hardware and in a way that can be scaled. With a small battery and just one actuator per leg, CMU’s robot is fully controllable, with the ability to turn and to start and stop on its own.

“Building at a larger scale allows us to explore the parameter space of construction and control, so that we know how to scale down from there,” says Justin Yim, one of the authors of the ICRA paper. “If you want to get robots into small spaces for things like inspection or maintenance or exploration, walking could be a good option, and being able to build robots at that size scale is a first step.”

“Obviously [at that scale], we will not have a ton of space,” adds Aaron Johnson, who runs CMU’s Robomechanics Lab. “Minimally actuated designs that leverage passive dynamics will be key. We aren’t there yet on the LEGO scale, but with this paper, we wanted to understand the way this particular morphology walks before dealing with the smaller actuators and constraints.”

Scalable Minimally Actuated Leg Extension Bipedal Walker Based on 3D Passive Dynamics, by Sharfin Islam, Kamal Carter, Justin Yim, James Kyle, Sarah Bergbreiter, and Aaron M. Johnson from CMU was presented at ICRA 2022.

Seed-y Inspiration

The relatively simple and popular quadrotor design for drones emphasizes performance and manufacturability. However, there are some trade-offs; namely, endurance. Four motors with rapidly spinning tiny blades suck up battery power and while consumer drones have mitigated this somewhat by hauling around ever-larger batteries, the fundamental problem is one of efficiency in flight.

In a paper published recently in Science Robotics, researchers from the City University of Hong Kong have come up with a drone inspired by maple seeds that weighs less than 50 grams but can hold a stable hover for over 24 minutes.

Maple seed pods (also called samaras) are those things you see whirling down from maple trees in the fall, helicopter style. The seed pods are optimized for maximum air time through efficient rotating flight thanks to an evolutionary design process that rewards the distance traveled from the parent tree, resulting in a relatively large wing with a high ratio of wing to payload.

Samara drones (or monocopters, more generally) have been around for a while. They make excellent spinny gliders when dropped in midair, and they can also achieve powered flight with the addition of a propulsion system on the tip of the wing. This particular design is symmetrical using two sizable wings, each with a tip propeller. The electronics, battery, and payload are in the center, and flight consists of the entire vehicle spinning at about 200 RPM.

A bicopter is inherently stable, with the wings acting as aerodynamic dampers that result in passive-attitude stabilization — something that even humans tend to struggle with. With a small battery, this drone weighs just 35 grams with a wingspan of about 60 centimeters. The key to the efficiency is that unlike most propellerized drones, the propellers aren’t being used for lift. They’re being used to spin the wings, and that’s where the lift comes from.

Full 3D control is achieved by carefully pulsing the propellers at specific points in the rotation of the vehicle to translate in any direction. With a 650 milliampere-hour battery (contributing to a total vehicle mass of 42.5 g), the drone is able to hover in place for 24.5 minutes. The ratio of mass to power consumption that this represents is about twice as good as other small multirotor drones.

You may be wondering just how useful a platform like this is if it’s constantly spinning. Some sensors simply don’t care about spinning, while other sensors have to spin themselves if they’re not already spinning. (You can see how this spinning effect could actually be a benefit for, say, LiDAR.) Cameras are a bit more complicated, but by syncing the camera frame rate to the spin rate of the drone, the researchers were able to use a 22 g camera payload to capture four 3.5 fps videos simultaneously, recording video of every direction at once.

Despite the advantages of these samara-inspired designs, we haven’t seen them make much progress outside of research contexts. The added complication seems to be enough that at least for now, it’s just easier to build traditional quadrotors. Near-term applications might be situations in which you need lightweight, relatively long-duration functional aerial mapping or surveillance systems.

Long Ma, the Dragon

For centuries, people in China have maintained a posture of awe and reverence for dragons. In traditional Chinese culture, the dragon — which symbolizes power, nobility, honor, luck, and success in business — even has a place in the calendar. Every 12th year is a dragon year.

Flying, fire-breathing horses covered in lizard scales have been part of legend, lore, and literature since those things first existed. Now, in the age of advanced technology, an engineer has created his own mechatronic version of the mythical beast.

François Delarozière, founder and artistic director of French street-performance company La Machine, is shown riding his brainchild, called Long Ma. The 72 tonne steel and wood automaton can carry 50 people on a covered terrace built into its back and still walk at speeds of up to 4 kilometers per hour.

It flaps its leather and canvas-covered wings and shoots fire, smoke, or steam from its mouth, nose, eyelids, and more than two dozen other vents located along its 25 meter long body.

Long Ma spends most of its time in China, but the mechanical beast will be transported to France so it can participate in fairs there this summer. It has already been featured at the Toulouse International Fair, where it thrilled onlookers from April 9th to 18th.

Nos.e Knows Its Whiskey

The Nos.e analyzed and identified different whiskey samples at CEBIT 2019. Photo courtesy of University of Technology Sydney.

A whiskey connoisseur can take a whiff of a dram and know exactly the brand, region, and style of whiskey in hand. So, how do our human noses compare to electronic noses in distinguishing the qualities of a whiskey?

A study published earlier this year in IEEE Sensors Journal describes a new e-nose that is surprisingly accurate at analyzing whiskies. It can identify the brand of whiskey with more than 95 percent accuracy after just one “whiff.”

“This lucrative industry has the potential to be a target of fraudulent activities such as mislabeling and adulteration,” explains Steven Su, an associate professor at the Faculty of Engineering and IT, University of Technology Sydney. “Trained experts and experienced aficionados can easily tell the difference between whiskies from their scents. But it is quite difficult for most consumers, especially amateurs.”

Therefore, Su and his colleagues sought to adapt one of their e-noses so that it could analyze some key qualities of whiskey. Their original e-nose was designed to detect illegal animal parts sold on the black market such as rhino horns, and they have since also adapted their e-nose for breath analysis and assessing food quality.

Their newest whiskey-sniffing e-nose — called Nos.e — contains a little vial where the whiskey sample is added. The scent of the whiskey is injected into a gas sensor chamber which detects the various odors and sends the data to a computer for analysis. The most important scent features are then extracted and analyzed by machine learning algorithms designed to recognize the brand, region, and style of whiskey.

In their study, the researchers put Nos.e to the test by using it to analyze a panel of half a dozen different whiskies, ranging from Johnnie Walker Red and Black label brands to Macallan’s 12 year old single malt Scotch whisky. For comparison, they also analyzed the whiskey samples using state-of-the-art two-dimensional gas chromatography time of flight mass spectrometry (GC×GC-ToFMS). Whereas an e-nose simply assesses the overall aroma profile of a substance, the GC×GC-ToFMS enables the identification of individual compounds in the complex scent mixtures. Although the GC×GC-ToFMS is highly accurate, this instrument approach is also more expensive and complex to use than an e-nose.

Su’s team was taken aback by the results, which showed that Nos.e was on par with the GC device in terms of identifying whiskey brands.

Getting Plastered

Roboticists have seized an opportunity to match the strengths of humans and robots while “plastering” over their respective weaknesses.

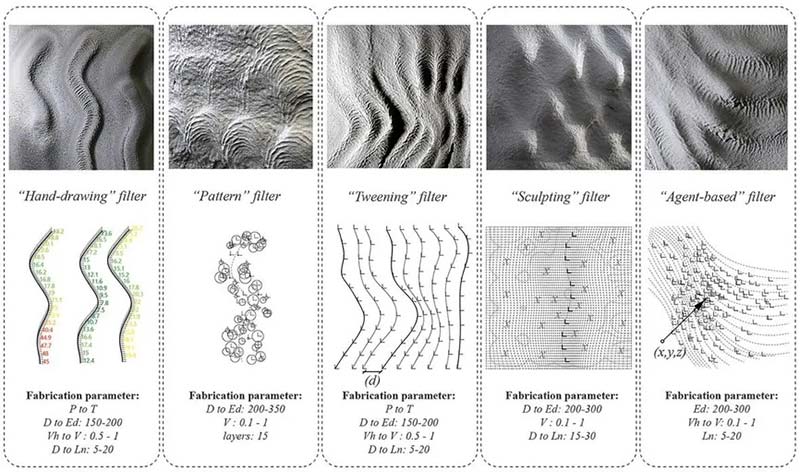

At CHI 2022, researchers from ETH Zurich presented an interactive robotic plastering system that lets artistic humans use augmented reality to create three-dimensional designs meant to be sprayed in plaster on bare walls by robotic arms.

This robotic system offers an advanced kind of interface that enables a new kind of art that wouldn’t be possible otherwise, and that doesn’t require a specific kind of expertise. It’s not better or worse; it’s just a different approach to design and construction.

I Smell a Rat

There’s a lot of interest in designing robots that are agile enough to navigate through tight spaces. This ability could be useful in assessing disaster zones or pipelines, for example. However, choosing the right design is crucial to success in such applications.

“Though legged robots are very promising for use in real world applications, it is still challenging for them to operate in narrow spaces,” explains Qing Shi, a Professor at the Beijing Institute of Technology. “Large quadruped robots cannot enter narrow spaces, while micro quadruped robots can enter the narrow spaces but face difficulty in performing tasks owing to their limited ability to carry heavy loads.”

So, instead of designing a large four-legged robot or microrobots, Shi and his colleagues decided to create a robot inspired by an animal highly adept at squeezing through tight spaces and turning on a dime: the rat.

In a study recently published in IEEE Transactions on Robotics, the team demonstratd how their new rat-inspired robot, SQuRo (small-sized Quadruped Robotic Rat) can walk, crawl, and climb over objects, and turn sharply with unprecedented agility. What’s more, SQuRo can recover from falls like its organic inspiration.

Shi and his colleagues first used X-rays of real rats to better understand the animal’s anatomy, especially its joints. They then designed SQuRO to have a similar structure, movement patterns, and degrees of freedom (DOF) as the rodents they studied. This includes two DOFs in each limb, the waist, and the head; the setup allows the robot to replicate a real rat’s flexible spine movement.

SQuRo was then put to the test through a series of experiments, first exploring its ability to perform four key motions: crouching-to-standing, walking, turning, and crawling. The turning results were especially impressive, with SQuRo demonstrating it can turn on a very tight radius of less than half its own body length. “Notably, the turning radius is much smaller than other robots, which guarantees the agile movement in narrow space,” Shi explained.

Next, the researchers tested SQuRo in more challenging scenarios. In one situation they devised, the robotic rodent had to make its way through a narrow irregular passage that mimicked a cave environment. SQuRo successfully navigated the passageway. In another scenario, SQuRo successfully toted a 200 gram weight (representing 91 percent of its own weight) across a field that included inclines of up to 20 degrees.

Any robot that is navigating disaster zones, pipelines, or other challenging environments will need to be able to climb over any obstacles it encounters. With that in mind, the researchers also designed SQuRo so that it can lean back on its haunches and put its forelimbs in position to climb over an object — similar to what real rats do.

In an experiment, they showed that SQuRo can overcome obstacles 30 millimeters high (which is 33 percent of its own height) with a success rate of 70 percent. In a final experiment, SQuRo was able to right itself after falling on its side.

“To the best of our knowledge, SQuRo is the first small-sized quadruped robot of this scale that is capable of performing five motion modes, which includes crouching-to-standing, walking, crawling, turning, and fall recovery,” boasted Shi.

He says the team is interested in commercializing the robot and plans to improve its agility via closed-loop control and in-depth dynamic analysis. “Moreover, we will install more sensors on the robot to conduct field tests in narrow unstructured pipelines,” says Shi. “We are confident that SQuRo has the potential to be used in pipeline [fault] detection after being equipped with cameras and other detection sensors.”

TurtleBot Time

Clearpath Robotics is now offering their newest, fanciest TurtleBot: the TurtleBot 4. Built on top of iRobot’s Create 3 in close partnership with Open Robotics, the TurtleBot 4 is a relatively affordable way to get started with ROS 2 even as a robotics beginner. For folks looking for something more advanced, TurtleBot 4 also has the potential to help you extend your experience into graduate-level research and beyond.

TurtleBot 4’s big differentiator is that it’s designed to showcase ROS 2 — the powerful open source Robotic Operating System that is working hard to successfully transition from robotics research into an all-purpose framework that can safely and reliably power commercial robots as well. This is the first version of the TurtleBot to run ROS 2 from the ground up (including the Create 3 base), and offers an opportunity for anyone from middle schoolers on up to learn ROS 2 in a safe and well-supported way on real hardware.

There will be two versions of the TurtleBot 4 available from Clearpath. Both versions use the iRobot Create 3 development platform as a mobility base with the same power and charging system, including a base station. Both also include a 2D RPLIDAR-A1 sensor with a 0.15m to 12m range. Compute comes in the form of a Raspberry Pi 4B running Ubuntu 20.04 with ROS 2 already installed.

From there, the TurtleBot 4 Standard splits off from the TurtleBot 4 Lite. The Lite version misses out on some additional options for user accessible power, as well as useful interfaces including extra LEDs, some physical buttons, and a small OLED display that by default shows the robot’s IP address (or whatever else you want). This is especially helpful because it makes it easy to fire the robot up and launch a demo behavior without requiring an external computer.

The other big difference is in the sensor. The Lite includes an OAK-D-Lite camera and stereo depth sensor, while the TurtleBot 4 Standard comes with a more capable OAK-D-Pro.

The cost of the TurtleBot 4 Lite is $1,195, while the TurtleBot 4 Standard is $1,850. This is certainly a premium over what you’d pay for all of the parts individually, and you can certainly build yourself a TurtleBot 4 mostly from scratch if you want to.

Using the Create 3 as a base gives the TurtleBot 4 both the ruggedness of a Roomba and a bunch of useful integrated sensors — the same ones that Roombas use to reliably navigate your house and not fall down your stairs. The Create 3’s battery gives the TurtleBot 4 an impressive minimum battery life of 2.5 hours, and all the parts are easy to fix or replace since you’ve got access to iRobot’s supply chain. Top speed is nearly half a meter per second, or slightly slower if you don’t disable the cliff sensors.

If any of this doesn’t satisfy your needs, part of the point of the TurtleBot platform is that it’s super easy to expand, as long as you know what you’re doing (or are willing to learn). Power and communications ports are easy to access and the TurtleBot 4 has lots of easy ways to mount up to 9 kilograms of hardware.

TurtleBot 4 will ship fully assembled with all necessary software pre-installed and configured, and you’ll have detailed user documentation plus demo code and a bunch of tutorials. There’s also a Ignition Gazebo simulation model to play with, which you can access without even buying a TurtleBot 4 since it’s completely free. This should be especially useful for classrooms, where multiple students could work in simulation before trying things out on the real robot.

DARPA Does Off-Road

Earlier this year, DARPA announced the first phase of a new program called RACER, which stands for Robotic Autonomy in Complex Environments with Resiliency. The RACER program is all about high speed driving in unstructured environments, which is a problem that has not been addressed by the commercial-vehicle-autonomy industry since we have roads.

Where DARPA is going this time, there are no roads, and the agency wants autonomous vehicles to be able to explore on their own, as well as keep up with vehicles driven by humans.

Three teams that will each get funding and vehicles from DARPA are Carnegie Mellon University, NASA JPL, and the University of Washington. If everything goes well, we’ll be seeing some absolutely bonkers off-road autonomous racing over the next three years.

The techniques developed through the RACER program aren’t military-specific. For example, you can easily imagine how they could be applied to situations where you’re driving on a road that isn’t part of a data set. Or, there are lots of roads out there that are just terrible,and it would be nice if an autonomous vehicle could handle those as well.

The US Army’s Autonomous Platform Demonstrator (APD).

Each team will be provided with vehicles by DARPA, each of which will have the same sensors, the same computer, and the same ROS based software infrastructure because DARPA wants to emphasize the development of autonomy software and algorithms rather than seeing which team can staple the most expensive sensors to their vehicle.

During the first phase, the vehicles will be off-road buggy-type things, similar to the Polaris MRZR-X. If the first phase is a success and the program enters Phase 2, the vehicles get upgraded, and each team will get to play around with one of the US Army’s Autonomous Platform Demonstrators (APD).

It’s a six-wheeled hybrid electric vehicle that weighs nearly 10 tonnes, and was designed from the ground up to be unmanned. It can reach speeds of 50 miles per hour, climb a one meter step, manage a slope of 60 percent, and turn in place.

DARPA anticipates running a series of field experiments in each phase of the RACER program. Each experiment will be 10 days long, and they’ll take place at six month intervals, with the first having kicked off in March at the National Training Center in Ft. Irwin, CA.

The courses during Phase 1 experiments will likely be about five kilometers long. DARPA describes them as “generally trail-less off-road natural terrain with vegetation, slope, discrete obstacles, and ground surface changes.” The vehicles should also be able to handle common environmental conditions, including “dusk/dawn, moderate dust, moderate rain/snow, light fog, natural shadows, lighting changes, and possibly exposure to night conditions.”

All that teams will get are a list of GPS coordinates reflecting course boundaries, route waypoints, and the end goal. Teams can use GPS to try to localize if they want to, but a GPS signal may not be available at all times. When it is, it’ll only be accurate to ±10 meters.

They can also use a topological map, but only at a resolution of 1:50,000. Otherwise, no external localization or preexisting information can be used, and maps from prior runs aren’t allowed either. To keep things achievable, DARPA will make sure that “multiple routes between waypoints will exist that can achieve RACER speed metrics when driven by a human driver.”

DARPA’s hope is that in Phase 1, teams will be able to demonstrate average autonomous speeds of 18 kilometers per hour with interventions required no more frequently than one every 2 km. Phase 2 (using the APD) would be significantly more aggressive, with course lengths of 15 to 30 km or more, an average autonomous speed goal of 29 km/h, and interventions only once every 10 km.

If you’re wondering where these metrics come from, here’s what DARPA is looking for the autonomous vehicles to be able to do: “Maintain maneuver with manned combat vehicles at their OPTEMPO [that is, “operations tempo”] speeds, specifically the M1 Abrams main battle tank.”

Special Delivery

Autonomous delivery specialist Nuro now has a third version of its self-driving pod.

The newer electric vehicle comes with more space for customer orders, customizable compartments for different types of goods, and an updated suite of navigation cameras, radar, LiDAR, and thermal cameras to equip the pod with a detailed view of its immediate environment.

For the first time, it also features an external airbag to protect any distracted walkers and wayward cyclists who inadvertently stray into the pod’s path. A photo (shown here) released by the California based company shows the airbag fully inflated, covering the entire front of the driverless pod. This is also the first Nuro pod that can be commercialized at scale, the company said.

Unlike self-driving cars being tested by Waymo and others, Nuro’s custom-built vehicles are designed exclusively to carry goods, with no space for passengers or even a safety driver. In fact, it doesn’t even have a steering wheel.

Such a design gives Nuro’s vehicle a footprint that’s 20% smaller than the average passenger car, enabling it to take up the absolute minimum amount of road space.

Nuro has been testing earlier iterations of its vehicle on public roads in three states and has inked partnerships with the likes of Kroger, FedEx, Domino’s, and CVS Pharmacy.

Select customers can place an order with a local business such as a grocery store or pizza restaurant using Nuro’s smartphone app. When the autonomous vehicle carrying the order reaches its destination address, the customer receives an alert on their phone. They then pop outside and simply tap in a code on the vehicle’s touchscreen to unlock the compartment containing their order.

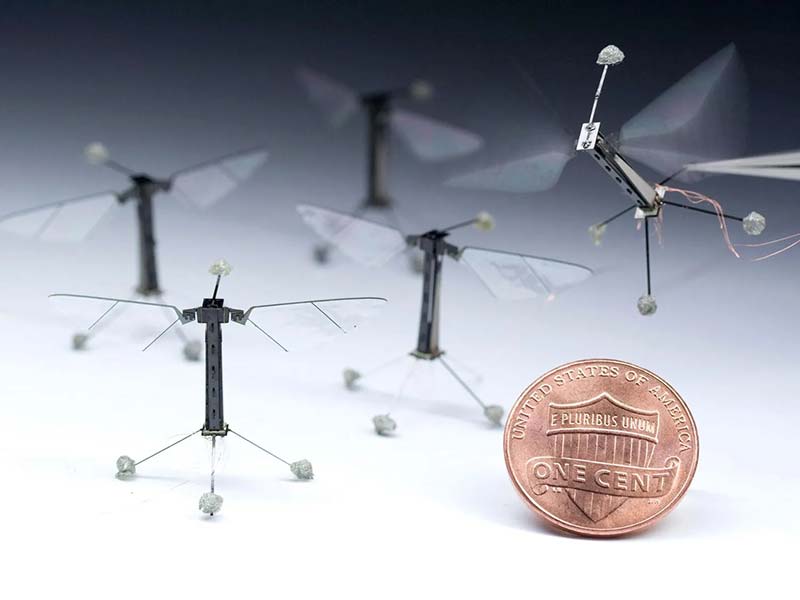

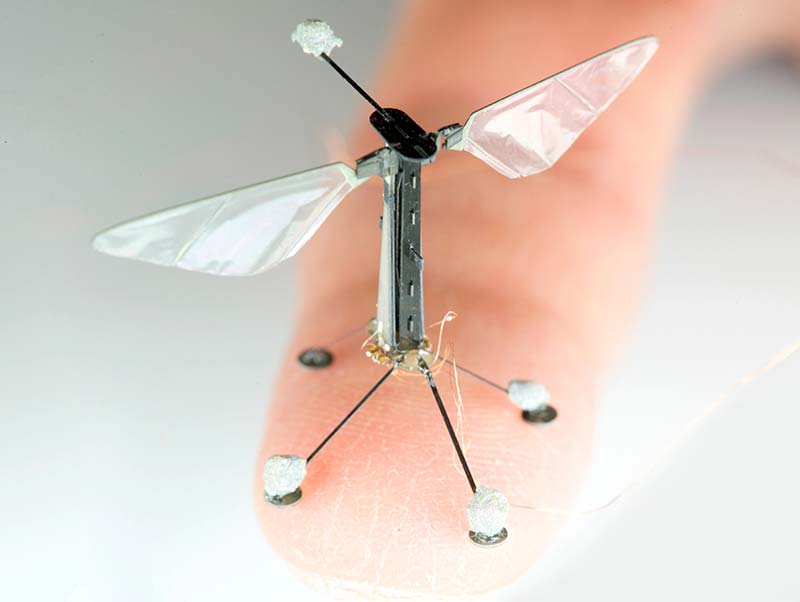

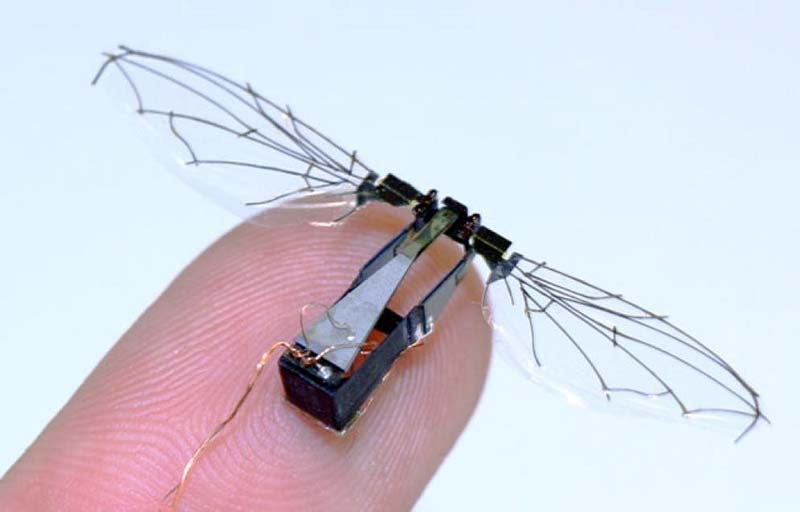

RoboBee Buzzes Better

Since becoming the first insect-inspired robot to take flight, Harvard University’s famous little robotic bee — dubbed the RoboBee — has achieved novel perching, swimming, and sensing capabilities, among others.

More recently, RoboBee has hit another milestone: precision control over its heading and lateral movement, making it much more adept at maneuvering. As a result, RoboBee can hover and pivot much better in midair like its biological inspiration, the bumblebee. The advancement is described in a study published recently in IEEE Robotics and Automation Letters.

The higher level of control over flight will be beneficial in a wide range of scenarios where precision flight is needed. For instance, consider needing to explore sensitive or dangerous areas — a task to which RoboBee could be well suited — or if a large group of flying robots must navigate together in swarms.

“One particularly exciting area is in assisted agriculture, as we look ahead towards applying these vehicles in tasks such as pollination, attempting to achieve the feats of biological insects and birds,” explains Rebecca McGill, a Ph.D. candidate in Materials Science and Mechanical Engineering at Harvard, who helped codesign the new RoboBee flight model.

However, achieving precision control with a flapping-winged robot has proven challenging and for a good reason. Helicopters, drones, and other fixed-wing vehicles can tilt their wings and blades and incorporate rudders and tail rotors to change their heading and lateral movement. Flapping robots, on the other hand, must move their wings up and down at different speeds in order to help the robot rotate while upright in midair. This type of rotational movement induces a force called yaw torque.

So, flapping-wing micro-aerial vehicles (FWMAVs) such as RoboBee have to precisely balance the upstroke and downstroke speeds within a single fast-flapping cycle to generate the desired yaw torque to turn the body. “This makes yaw torque difficult to achieve in FWMAV ,” explains McGill.

To address this issue, McGill and her team developed a new model that analytically maps out how the different flapping signals associated with flight affect forces and torques, determining the best combination for yaw torque (along with thrust, roll torque, and pitch torque) in real time.

We Might As Well Jump

Over the last decade or so, there’s been an enormous variety of jumping robots. With a few exceptions, these robots look to biology to inspire their design and functionality.

A few exceptions to the bio-inspired approach have included robots that leverage things like compressed gas and even explosives to jump in ways that animals cannot. The performance of these robots is very impressive, at least partially because their jumping techniques don’t get all wrapped up in biological models that can be influenced by nonjumping things, like versatility.

For a group of roboticists from the University of California, Santa Barbara and Disney Research, this led to a simple question: If you were to build a robot that focused exclusively on jumping as high as possible, how high could it jump?

In a paper published in Nature, they answer that question with a robot that can jump 33 meters high, which reaches right about eyeball level on the Statue of Liberty.

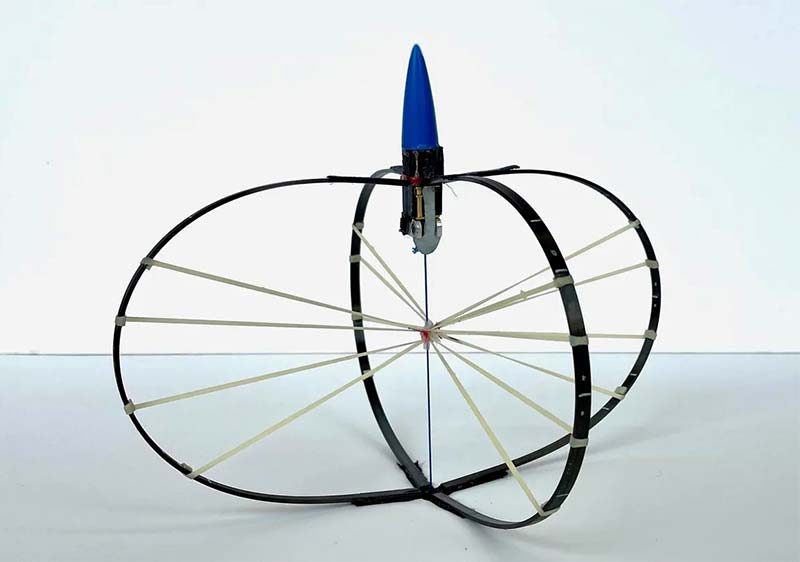

The jumper is 30 centimeters tall and weighs 30 grams, which is relatively heavy for a robot like this. It’s made almost entirely of carbon fiber bows that act as springs, along with rubber bands that store energy in tension. The center bit of the robot includes a motor, some batteries, and a latching mechanism attached to a string that connects the top of the robot to the bottom

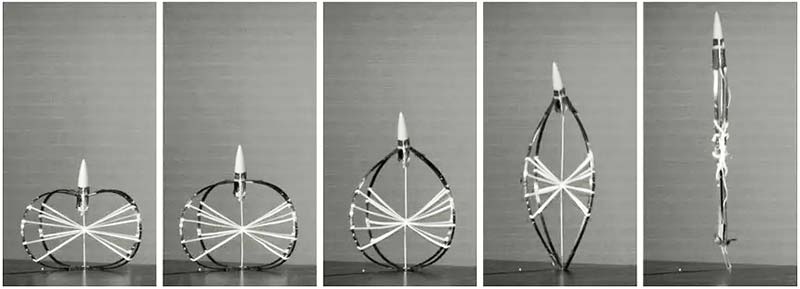

To prepare for a jump, the robot starts spinning its motor which (over the course of two minutes) winds up the string, squishing the robot down, and gradually storing up a kind of ridiculous amount of energy. Once the string is almost completely wound up, one additional tug from the motor trips the latching mechanism, which lets go of the string and releases all of the energy in approximately nine milliseconds, over which time the robot accelerates from zero to 28 meters per second.

The robot has a specific energy of over 1,000 joules per kilogram, which is enough to propel it about an order of magnitude higher than even the best biological jumpers, and easily triples the height of any other jumping robot in existence.

A series of high speed images showing the robot releasing the tension in its springs and jumping.

The reason that this robot can jump as high as it does is because it relies on a clever bit of engineering that you won’t find anywhere (well, almost anywhere) in biology: a rotary motor. With a rotary motor and some gears attached to a spring, you can use a relatively low amount of power over a relatively long period of time to store lots of energy as the motor spins.

Animals don’t have access to rotary motors, so while they do have access to springs (tendons), the amount that those springs can be charged up for jumping is limited by how much you can do with the single power stroke that you get from a muscle. The upshot here is that the best biological jumpers (like the galago) simply have the biggest jumping muscles relative to their body mass. This is fine, but it’s a pretty significant limitation to how high animals can possibly jump.

Article Comments